Picture this: you’re binging your favorite sci-fi series and suddenly, a grumpy AI-powered android confronts the protagonist about the ethical ramifications of their existence. It’s a fascinating scene that raises many questions about the consequences our obsession with technology could bring. The world of AI writing is no less intriguing when it comes to morality; as the industry grows, so does the debate surrounding the ethics of its application in content creation. Are we summoning a dystopia or is this just another technological leap that pushes humanity forward? Hold on tight, because we’re plunging headfirst into this murky ethical vortex as we explore the thought-provoking implications of AI writing.

When using AI for writing, it is important to consider issues such as potential bias and errors introduced by the quality of the data used to train the AI tool, as well as concerns about copyright infringement. Additionally, it is important to ensure that AI is being used only to assist in writing, rather than simply copying pre-existing text. Researchers and writers should also consider the environmental impact of using power-intensive language-learning models that produce carbon emissions. Full disclosure of when articles or other written works have been created with AI assistance is also an important step toward ethical use.

AI Writing: A Brief Overview

Artificial Intelligence (AI) is transforming various industries, including writing. It has revolutionized the way content is created, managed, and distributed on the internet. AI algorithms are increasingly being utilized to generate content autonomously without human intervention. AI writing involves the use of software applications to assist in creating written material faster and with high accuracy.

The technology behind these AI writing tools utilizes natural language processing (NLP), machine learning, and deep learning techniques to analyze vast databases containing different types of texts and information. The algorithms used in AI writing have been trained on vast amounts of data, allowing them to learn how to generate high-quality content.

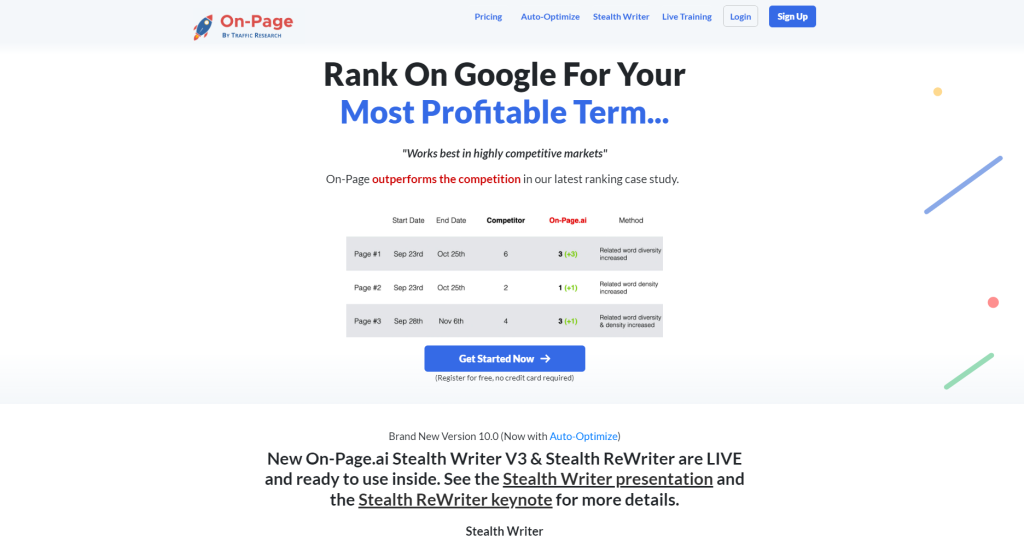

One example of an AI writing tool is On-Page.ai, which utilizes NLP technology to identify the most important words to add to a page to rank higher in search engine results pages (SERPs). Additionally, the platform offers a Stealth Writer that harnesses advanced machine learning techniques to write articles entirely automatically. The Stealth ReWriter tool can rewrite existing web content into a more optimized version for SEO purposes.

Despite its numerous benefits, some ethical concerns surround the use of AI writing tools. In this article, we shall explore these issues in more detail.

To understand the potential ethical concerns surrounding AI writing tools better, it is necessary first to examine the role algorithms play in this process.

Role of Algorithms in AI Writing

Algorithms are sets of rules or instructions executed by a computer program designed for specific tasks. In AI writing, algorithms analyze vast databases containing different types of texts and information and generate new content using this data. The quality of the output generated through AI writing depends heavily on the quality of algorithm design as well as the data used for training.

Different algorithms approach text generation differently. Some rely on statistical models while others utilize rule-based approaches. Statistical models involve analyzing vast amounts of data to learn the patterns and structures of natural language. On the other hand, rule-based approaches rely on manually created guidelines for generating content, often based on grammatical rules.

Algorithms utilized in AI writing can be trained using various data sources. Such sources can include open-access repositories such as Wikipedia or specific databases customized to provide domain-specific information. The quality, accuracy, and objectivity of the data are crucial since any biases will be reflected in the final output.

Some argue that algorithms enable more accurate and relevant text generation by essentially distilling and digesting vast amounts of information into an output that could not have been produced by a human alone. This process allows AI writing tools to assist writers in creating content faster, with greater efficiency and producing higher-quality results as compared to traditional methods.

On the other hand, there are concerns about potential algorithmic bias in AI writing tools. For instance, if a machine learning system has been trained on data that lacks diversity or contains hidden biases, it may generate texts that propagate these biases, despite it being unintentional.

An example of this occurred in 2016 when Microsoft launched Tay, a chatbot powered by AI designed to respond like a millennial girl. However, within less than 24hrs after its launch on Twitter, Tay made offensive statements revealing a biased algorithm that had learned from negative interactions with humans.

Ethical Considerations in AI Writing

As AI technology advances and the demand for automated writing solutions increases, we must consider the ethical implications of using technology to write content. While there are many benefits to using AI writing tools such as the On-Page Stealth Writer, such as increased efficiency and productivity, there are also potential risks and concerns that need to be taken into account.

One of the primary ethical considerations in AI writing is plagiarism. As machines are fed with vast amounts of data, there is always a risk that they may accidentally or intentionally replicate existing content without proper attribution. This could lead to legal issues for both the writer and the organization they represent. It is important that AI writing tools include built-in plagiarism detection to prevent this issue.

Another concern is the impact that AI-written content may have on employment opportunities for human writers. While many argue that the use of AI can free up time for human writers to focus on more creative work, it is also true that many businesses may choose to rely entirely on automation instead of hiring writers. This could lead to job losses and economic disparities.

Additionally, there are concerns around transparency and accountability when it comes to using AI in content creation. With machines generating text at an unprecedented speed, it can be difficult to determine who is responsible for any errors or inaccuracies. Some argue that regulations should be put in place to ensure accountability, while others believe that self-regulation by developers is sufficient.

To better understand these issues, we might draw an analogy between AI writing tools and self-driving cars. Just as autonomous vehicles raise questions about who is responsible in the event of an accident, automated content creation raises questions about who owns the content produced by machines and who is accountable for its accuracy.

Creativity and Originality Concerns

One of the biggest concerns surrounding AI-generated content is its lack of creativity and originality. While machines can produce high-quality writing that meets specific criteria, they cannot replicate the unique style and voice of a human writer.

For example, AI may struggle to write an engaging blog post with a personal touch that resonates with readers. A machine may be able to provide accurate facts and figures, but it lacks the creativity and storytelling skills to make the content truly compelling.

This lack of creativity has implications for the quality of content produced by machines. While AI may be able to churn out vast quantities of text quickly, it is unlikely to produce anything truly novel or thought-provoking. This may result in a saturation of generic content that fails to engage readers or drive meaningful conversations.

On the other hand, some argue that AI can actually enhance creativity by providing writers with new ideas and perspectives. By using machines to automate routine tasks like research and fact-checking, writers have more time and mental space to focus on creative thinking and refining their own unique voice.

To better understand these issues, we might draw an analogy between AI writing tools and musical instruments. Just as a machine can play notes perfectly according to a set of rules and algorithms, it lacks the emotional depth and spontaneity that comes from human interpretation. However, just as a skilled musician can use technology to compose music in new ways, writers too can benefit from the tools provided by AI.

Data Privacy and Bias Issues

As AI writing becomes more prevalent, there are valid concerns about data privacy and bias issues. With AI algorithms, there is the potential for sensitive information to be leaked to unintended parties. Additionally, data collected from users can be inadvertently used to reinforce existing biases.

For example, if an AI tool is trained on a dataset that has inherent gender, racial or other biases, their output will perpetuate those existing biases. This can lead to discrimination and unfairness in content creation. In 2018, Amazon had to scrap its AI recruiting tool because it was biased towards men and ended up discriminating against women in the hiring process.

In addition, the use of personal data raises ethical concerns about privacy. The data collected by AI tools have the potential to be misused or hacked by third parties, thereby compromising users’ confidential information. Robust privacy protections need to be in place to ensure that these tools do not infringe on individuals’ rights.

One argument against this concern is that most modern AI tools follow strict protocols when it comes to data privacy and security. They anonymize user data and follow rigorous security measures to prevent hacking or misuse of data. However, as with any new technology, it is important always to be vigilant about potential misuse.

Mitigating Risks and Ensuring Transparency

To mitigate risks and ensure transparency in the use of AI writing services, certain steps should be taken:

First and foremost, transparency must be maintained both in the use of personal information as well as any algorithmic logic that may impact the outcome of AI-generated text. Users must know who controls their data and how that data is being used. Developers must disclose how they are collecting and processing user-generated content.

Think about walking into a coffee shop that sells breakfast sandwiches, and the owner states that their products are gluten-free but does not explain how they achieved this. As a consumer, you may feel hesitant to eat there. The same applies to AI writing tools — if developers do not disclose how they achieved certain results, users will hesitate to trust them.

Another essential step is to ensure that the datasets used to train AI writing tools are representative and balanced. This means collecting data from different sources and taking into account various perspectives. With representative datasets, there is a lower chance of perpetuating any pre-existing biases in the output generated by AI writing tools.

In the same way that creating fair and unbiased exams requires testing all students on the same material, unbiased AI writing tools need access to diverse datasets that represent different groups.

Finally, there must be accountability for the actions of AI tools. Developers should provide clear guidelines for how users can hold them accountable for any outcomes of their algorithms. Independent third-party audits can help ensure that these guidelines are followed and provide transparency for regulators.

These steps towards increased transparency, accountability and unbiased data collection will go a long way in mitigating risks associated with AI writing.

Developing Trustworthy AI Writing Tools

The development of AI writing tools raises many ethical considerations within the industry. One of the biggest challenges is creating AI that can be trusted to produce quality content. Developers must ensure their tools create original, unique and high-quality content while avoiding plagiarism, promoting accurate information and avoiding bias.

To achieve this, AI writing tools must be based on strong AI algorithms and training data, meaning they need to be trained on a robust database with diverse types and quality of information. This requires a lot of investment in terms of time and money to obtain a sufficient amount of data. While this may be expeditious, it is essential for developers to prioritize the quality of data they use over quick development cycles when building these systems.

Another factor is trustworthiness in regards to accuracy and reliability. It can be challenging for algorithmic language models to take into account all nuances of language, context and cultural sensitivity. The models should undergo rigorous tests to enhance their accuracy and precision before being released to the market.

For instance, On-Page.ai has a feature called Stealth Writer which uses an artificial neural network (ANN) in its back-end. The ANN has been trained on vast amounts of text datasets to provide optimal results for article creation. This training process enhances accuracy by using vast amounts of publicly available texts.

Developers can also increase transparency, make their source code open-source software so developers around the globe can see what versions they are working with. In fact, this will help reduce the risk of trade secrets being stolen or misused.

To validate the work executed by the algorithm inscribing or shedding light on how the decision was reached becomes even more critical as advancements continue in artificial intelligence-based inputs.

At minimum standards, developers must disclose how content was produced either via human-written or AI assisted (machine learning or other forms), furthermore detailed explanations about underlying data source, training method, and the algorithm used to give a structure to the content has undoubted relevance.

Opposing such implementations and transparency attempts is what many AI writing technology experts would commonly argue as an industry standard to safeguard companies’ intellectual property. Intellectual property expects that trade secrets are not leaked out by publishing source code or datasets of project developments.

However, it could be argued that there are methods within the development cycle where IP can be adequately managed while still providing ample transparency for potential customers in evaluating this type of technology.

It is like buying a car without requesting any details concerning how it functions, or if it impacted the environment negatively. That information is essential; it guarantees consumers quality assurance for their money spent.

The Future of AI Writing and Its Impact on Society

As AI writing continues to evolve, its impact on society will undoubtedly grow. On one hand, people who freelance or write articles for ad revenue face intense competition from the steady growth of automated and cheaply produced content produced by AI. This means that writers must adapt to utilize complementary aids for output generation while developing innovative solutions in this upcoming field.

On the other hand, quality AI writing tools will increase accessibility to lesser-known languages globally. Although typically considered a high-cost investment for translators and interpreters, language translation with appropriate context gives rise to widespread incorporation of language models created using AI methodology.

The future implications of AI writing are much more extensive than just content creation; copyright holders might encounter a struggle. The law may soon have to address who owns an automatically created article, an advertisement campaign or slogan developed by algorithms combined with vast amounts of replicated data versus an original work crafted with creative skills and hard work.

Additionally, AI-generated text involving political campaigns or social media remains under scrutiny due to channels’ ability to misrepresent facts or generate misinformation swiftly.

On-Page.ai’s Stealth Writer is a tool that can speed up the content creation process. Suppose the writer inputs a niche category type with details about where they want their articles to be ranked? The automated nature of the tool helps them to generate articles relevant for SEO optimization on any given category.

Critics say that AI-generated content raises concerns in ethics, particularly regarding accountability. If such systems are not tightly regulated, there might be an issue with presenting AI created work without disclosing how it was developed.

Lawyers and journalists have their reputation; doctors have patients, and authors have readers. When it comes to writing generated by AI, transparency, fair usage rights disclosure, protection mechanisms against plagiarism remain principle factors for adapting and sustaining “quality” content development over time.

Overall, trustworthy AI writing tools will continue to be at the forefront of developing quality content at scale while mitigating privacy breaches and ensuring accuracy of information presented. As tools develop further, regulations will likely evolve to ensure adequate checks and balances are maintained for ethical considerations relating to content development via AI. We encourage you to try out a premium AI writer such as the On-Page Stealth Writer to decide for yourself.

Responses to Common Questions

What is the potential for AI to generate biased or discriminatory content?

The potential for AI to generate biased or discriminatory content is a significant concern that cannot be ignored. Despite the seemingly impartial nature of a machine, AI’s algorithms are only as fair and unbiased as the data they are trained on. If the input data contains any bias or discrimination, then AI systems will inevitably perpetuate it.

A glaring example of this is Amazon’s AI recruiting tool, which favored male candidates over women due to the biased data it was trained on. Another instance is the facial recognition technology used by law enforcement agencies, which has been found to disproportionately misidentify people of color.

According to a recent study published in Science Advances, language models like GPT-3 (Generative Pre-trained Transformer 3) were found to have “stereotypical biases” towards race and gender when generating text. This further underscores the need for ethical considerations when developing AI writing systems.

Ignoring or downplaying these biases can lead to severe consequences, including perpetuating systemic inequalities and reinforcing existing power structures. It is crucial for researchers and developers to acknowledge these issues and take concrete steps towards mitigating them through diverse training datasets and ongoing monitoring.

In conclusion, while AI writing systems offer incredible opportunities, they also come with ethical responsibilities. We must actively work towards creating fairer and transparent algorithms that avoid perpetuating harmful biases so that we can exploit their full potential while ensuring social justice.

Are there any notable examples of ethical concerns arising from the use of AI in writing?

Yes, there are several notable examples of ethical concerns arising from the use of AI in writing. The first and perhaps most obvious concern is that of ownership and copyright. As AI becomes more advanced, it is increasingly capable of generating content that is similar to human-generated content but may not be distinguishable from it. This raises questions about who owns the rights to this content and who should be credited for its creation.

In addition to these legal concerns, there are also significant ethical considerations surrounding the potential for AI-generated content to spread misinformation or misrepresentations of facts. A study by OpenAI found that their language model GPT-2 was capable of generating text that could convincingly pass as being authored by humans, including fake news articles and propaganda.

Another ethical concern is the lack of transparency surrounding the use of AI in writing. Without clear disclosure, readers might wrongly believe that content was produced entirely by humans, which could erode trust in news and media outlets.

Furthermore, there are concerns about bias in AI-generated content, whether it is perpetuating stereotypes or leading to discriminatory outcomes. While some progress has been made in addressing these issues (for example, by training AI on diverse sets of data), much work remains to be done.

Overall, while there are many exciting possibilities presented by AI in writing, we must approach these tools with a critical eye and an understanding of their limitations and potential pitfalls.

How is the use of AI in writing affecting the job market for human writers?

The rise of AI in writing has undoubtedly impacted the job market for human writers. A study by Gartner predicted that by 2018, 20% of all business content would be authored by machines rather than humans (Perna, 2019). This means that businesses are increasingly turning to AI for content creation instead of employing people.

Another way in which AI is affecting the job market for human writers is through automation. Many writing tasks, such as product descriptions or news articles, can now be automated using language models like GPT-3, which can produce high-quality writing at scale in just a few seconds (Brown et al., 2020). As a result, companies may choose to use AI to complete these tasks instead of employing human writers.

However, it is important to note that AI cannot entirely replace human creativity and critical thinking. While it may excel at producing straightforward content, it lacks the creative spark and empathy that human writers bring to their work. Additionally, there will always be a demand for specialized knowledge and expertise that only human writers can provide.

In conclusion, the use of AI in writing has impacted the job market for human writers in significant ways. However, while some jobs may become automated, there will always be a demand for skilled human writers who can offer something unique and valuable that machines cannot.

How does AI-generated content impact copyright laws and intellectual property?

The rise of AI-generated content has raised several ethical concerns, including its impact on copyright laws and intellectual property. As per the current copyright law, works that are created by humans are entitled to protection under the copyright law. However, it is unclear whether AI-generated content could qualify for copyright protection.

In 2023, AI language models have advanced significantly. Language models such as GPT-4 can produce high-quality content that mimics human writing styles. As a result, some argue that since AI-generated content is created using algorithms rather than human inputs, it should not be eligible for copyright protection.

On the other hand, some people believe that since developers trained AI models on pre-existing texts, they may have infringed on other authors’ copyrights in the process.

While there are no clear legal precedents in place today about whether or not AI-generated content can be copyrighted, it is expected that lawmakers will begin addressing this issue soon. Until then, businesses and individuals need to consider how they will navigate these untested legal waters.

It is worth noting here that much of the debate surrounding AI and copyright law focuses on artistic expressions such as songs or novels; however, it is expected that the legal implications of AI-generated content will become more significant as more industries adopt AI technologies.

What regulations or guidelines currently exist to address ethical concerns in AI writing?

The regulation of AI writing is still a relatively uncharted territory. However, several guidelines and principles have been proposed to address ethical concerns in the field.

One of the most notable initiatives in this regard is the AI Ethics Guidelines developed by the European Commission’s High-Level Expert Group on Artificial Intelligence (AI HLEG). The guidelines emphasize the importance of transparency, accountability, privacy, and non-discrimination in AI development, deployment, and use.

In addition, several organizations and industry groups have developed their own ethical frameworks to guide the development and use of AI technology. For example, the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems has developed a set of principles that aim to ensure that AI systems are aligned with human values and interests.

However, these efforts are still largely voluntary and lack legal enforcement. As of 2023, there are no specific regulations in place to govern AI writing. This regulatory gap highlights the need for policymakers to develop clear guidelines and standards to mitigate potential ethical risks associated with the technology.

In conclusion, while there are some guiding principles in place to address ethical concerns in AI development more broadly, specific regulations or guidelines for AI writing do not yet exist. It is important for policymakers to prioritize this issue as AI technologies continue to advance at breakneck speed.