Imagine a future where your voice is the key to unlocking endless possibilities, where AI and speech recognition technology blend seamlessly to revolutionize our digital experience. That future is already here, shaping the world around us in remarkable ways. Get ready to embark on an exhilarating journey as we delve into how cutting-edge AI-powered speech recognition tools are rapidly becoming the magic wand for SEO optimization and transforming the way information reaches our fingertips!

Advanced speech recognition solutions use AI and machine learning to improve accuracy over time by analyzing patterns and learning from each interaction. Through advancements in natural language processing, deep learning, and neural networks, AI is able to understand more nuanced aspects of human speech, making speech recognition technology more accurate and customizable than ever before.

AI-driven Speech Recognition Advances

One of the most exciting prospects of recent years with AI technology is the integration and use of speech recognition tools. In the past, voice recognition was slow and often inaccurate, making it both frustrating and time-consuming for users who wanted to dictate a text or transcribe it accurately. With advances in deep learning and natural language processing (NLP), however, sophisticated algorithms are now able to accurately recognize individual words and phrases on par with human accuracy for some languages.

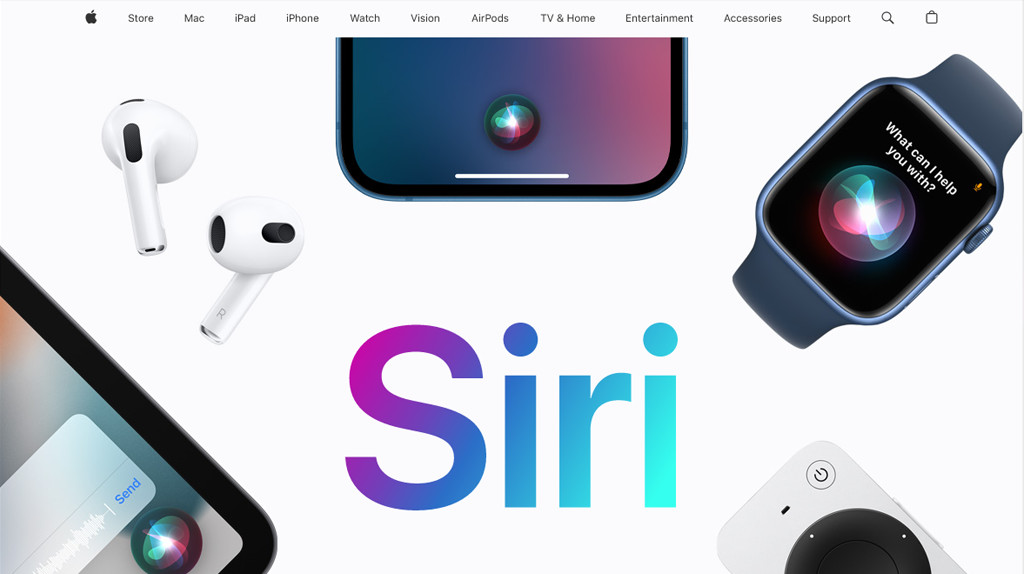

One striking example of speech recognition advancement is through smartphones which now come shipped with pre-built virtual assistants programmed to understand and convert voice inputs dictated by users. The most popular voice assistant technologies today include Apple Siri, Google Assistant, and Amazon Alexa amongst others. A voice query is recognized by these smart assistants within a few seconds, which then responds with an answer or executes the intended request without much delay or user intervention.

Several other applications are also leveraging speech recognition advancements to improve human-machine interaction across many industries, including healthcare, finance, call-centers, customer support networks as well as smart home devices aimed at enhancing domestic living experiences.

Just like hearing aids that amplify weak sound signals to aid persons experiencing hearing loss limitations in comprehending speech, the innovative Speech Recognition technology amplifies an individual’s speech ability to digitally create written text documents with ease.

What makes such innovative advancements possible is the use of Machine Learning Techniques; next we’ll explore that further.

- Speech recognition technology has advanced significantly with the help of machine learning, enabling virtual assistants and other applications to accurately understand and respond to voice queries. This advancement is transforming how we interact with technology and improving various industries’ efficiency, including healthcare, finance, customer support networks, and smart home devices. With the use of speech recognition technology, individuals can dictate text documents easily, similarly to how hearing aids amplify sound signals. Machine learning techniques facilitate these advancements, promising further exciting possibilities in the future.

Machine Learning Techniques

Fundamentally Machine Learning stands at the core of all Artificial Intelligence technologies. It provides machines with unique abilities to analyze complex data sets and draw meaningful insights necessary for future decision-making. Through Machine Learning in speech recognition, systems can be trained on a vast amount of labelled audio data sets curated from different sources to learn and recognize various acoustic patterns unique to each human speaker.

Speech recognition technology is deployed in the medical field to create electronic health records. A doctor can dictate clinical notes thanks to speech recognition software that transcribes spoken words into written text, dramatically speeding up their note-taking process. Besides, Machine Learning helps the program learn and differentiate between medical jargon and everyday language.

Moreover, with Machine Learning speech recognition systems improve over time by analyzing speech data from multiple speakers across diverse fields- eventually leading to greater accuracy in recognizing complex speech patterns and nuances such as accent and context-specific vocabulary.

One challenge presented by Machine Learning techniques is an Appropriate-feature extraction that would enable the development of accurate models for highly sophisticated tasks like Speaker Identification or Language Identification. Nonetheless, models are continually being refined through the development of more powerful learning algorithms and larger, diverse data sets needed for training.

Accurate Transcription through AI

Transcription is the process of converting spoken words into written text. Until recently, human transcriptionists were necessary to accurately transcribe audio recordings, but with the advancements in AI technology, machines are now able to perform this task with high accuracy rates. Automatic speech recognition (ASR) technology powered by AI is revolutionizing the world of transcription, making it faster, more efficient and cheaper than ever before.

One example of how AI-powered transcription can be useful is in the legal industry where lawyers regularly have to manually transcribe depositions, meetings and court proceedings. Not only is this type of work time-consuming and tedious but it also comes with a high risk of error. By using ASR technology, lawyers can significantly reduce their workload while also ensuring accurate transcripts for future reference.

The accuracy rate of the speech recognition software has greatly improved over recent years as a result of machine learning techniques such as neural networks and deep learning algorithms. These techniques allow the AI models to learn from extensive datasets which results in a more accurate transcription output. Human verification is still required to ensure quality control, but overall accuracy rates can be up to 95%.

Despite the impressive gains that ASR technology has made in improving transcription accuracy rates, challenges still exist when it comes to recognizing accents, dialects or non-native speakers’ English. Speech recognition algorithms are trained on primarily American English which often leads to errors when attempting to transcribe other languages or dialects. This highlights an ongoing need for continued development within this area.

The combination of speech and language technologies using machine learning is similar to how people learn languages – by listening and repetition. When we learn a new language, we start by recognizing individual sounds before eventually understanding words and full sentences. Similarly, machine learning algorithms develop through exposure to large volumes of data and learning patterns in speech before transcribing spoken language with high accuracy.

- The global market for speech recognition technology is predicted to reach USD 24.9 billion by 2025, as companies increasingly adopt AI-powered solutions.

- By 2023, it’s estimated that customers will prefer using speech interfaces to initiate 70% of self-service customer interactions, up from 40% in 2019.

- Research shows that advanced AI-driven speech recognition systems can achieve a word error rate as low as 5%, making them highly accurate at understanding human speech and outperforming manual transcription methods (Microsoft Research).

Enhancing Customer Experiences

One of the most exciting applications of AI-powered speech recognition technology is its potential to improve customer experiences. Whether it be through virtual assistants, call centers or customizable voice interfaces, these technologies can provide a streamlined experience that ensures quick resolution of customer queries and reduces holding times.

A well-known application of this technology is Apple’s Siri, which uses speech recognition and natural language processing (NLP) algorithms to understand human speech. Customers interact with Siri using their voice, rather than having to navigate menus or buttons on their phone’s screen. This offers a frictionless experience for users where they can easily order products, check the weather, or set reminders without lifting a finger.

Speech-based interfaces also provide opportunities to personalize services based on historical data captured via multiple channels, such as social media interactions, emails or text messages. This enables businesses to tailor their offerings to specific customers’ needs and preferences while also eliminating the need for repetitive tasks like address verification or holding times.

Despite the potential benefits that AI-powered customer service brings, it’s important not to overlook the potential challenges too. For example, there are concerns about data privacy and security – particularly when sensitive customer data is stored by third-party vendors. There are also concerns around how such technologies will impact employment levels across various industries. As AI evolves, it’s essential that businesses and policy-makers collaborate to ensure any negative impacts are minimized.

Using AI-powered speech recognition in customer service is similar to using personal shoppers in the fashion industry. Just as personal shoppers use their knowledge of products and style to offer personalized recommendations to customers, AI-powered virtual assistants leverage data analytics and machine learning algorithms to provide tailored services for each user.

Virtual Assistants and Call Centers

Virtual assistants and chatbots have become an integral part of the modern-day customer experience. With AI training, these assistants can understand customers’ requests and provide accurate responses in real-time. The technology offers businesses a great opportunity to automate their customer service operations while also providing quick and efficient responses to customers.

One industry that has widely adopted virtual assistants is the call center industry. By adopting AI-driven speech recognition technology, call centers can improve their efficiency and productivity while reducing the time spent in queue for customers. An AI-powered voice assistant can interact with customers, mimic human language, and provide them with answers to common questions without the need for human intervention. This ensures the call center agents can focus on more complex issues that require human attention.

For instance, a clothing brand implemented an AI-driven personal shopping assistant on their website that could recognize voice commands. The personal shopping assistant helped customers find clothes based on different styles, preferences, and budgets. Customers could simply ask for help finding clothes from this particular store’s new collection or suggest items they like during previous purchases.

With the use of AI-powered virtual assistants, businesses can recreate a natural conversation flow between a customer and an enterprise system. This feature has dramatically reduced operational costs while also improving the overall customer experience.

However, some critics argue that replacing human agents with virtual assistants might lead to less personalized customer interactions. While AI-enabled virtual assistants can handle straightforward tasks automatically, they still lack the emotional intelligence of human agents.

Accessibility and Inclusive Technologies

Speech recognition technology has created many opportunities for people with disabilities by enabling them to communicate effectively without any physical constraints. This technology has empowered such individuals by providing a channel where they can interact with computers and virtual assistants using their own voices.

By integrating speech recognition technology in assistive devices like hearing aids and speech synthesizers, the lives of people with disabilities have significantly improved. This technology has enabled those who were once isolated from society to take part in various activities.

For example, speech recognition technology is helping individuals with visual impairments to control their smart homes using voice commands. They can switch on lights, adjust the thermostat, control the TV, among other things.

Additionally, speech recognition technology is becoming widely used in e-learning platforms to enable students with special needs to interact with computer systems more effectively. This technology allows them to study and learn alongside their peers without any hindrances.

In a way similar to how ramps and elevators enable people with physical disabilities to enter buildings or traverse different floors, speech recognition technology provides similar access and helps bridge the gap between people with disabilities and others.

Nonetheless, some critics argue that speech recognition technology comes with its own set of issues. Most notably is the high word error rate (WER). While the WER has declined over the years due to enhanced machine learning techniques, there are still significant challenges for users with heavy accents, non-standard dialects, or limited vocabularies.

Industries Benefiting from AI & Speech Recognition

The impact of speech recognition and AI extends beyond the realm of personal devices and into industries that are revolutionizing business practices. Thanks to recent advancements in machine learning and natural language processing, organizations in healthcare, finance, telecommunications and beyond are taking full advantage of the breakthrough technology.

One such industry that has reaped the benefits of AI is mortgage lending. By implementing speech recognition tools, lenders can automate transcription, shorten processing times, and reduce costs. The integration of speech technology within the mortgage industry also ensures compliance with federal regulations by providing an accurate record of conversations between lenders and borrowers.

In financial services, Automated Speech Recognition (ASR) is revolutionizing call center operations. ASR technology provides real-time access to customer information, enabling faster issue resolution times and a streamlined customer support experience. In addition to improving efficiency and throughput on calls, ASR can also enable advanced features such as sentiment analysis or call categorization based on the main topic being discussed.

There is potential for AI-powered virtual assistants to replace human employees across industries. While virtual assistants automate many tasks, they cannot yet replace a human touch nor provide the same level of empathy and customized service a human employee would offer. Human agents provide customers with personalized recommendations, resolve issues through repartee that machines struggle with and provide relationship-building skills embraced by customers.

Healthcare, Finance, and Telecommunications

Healthcare has become increasingly reliant on natural language processing techniques in clinical trials with patients when recording side effects or complications stemming from medicinal use. Healthcare providers have also used AI-powered transcription services to convert audio records of patient interactions into typed notes detailing patient histories for easy reference during follow-up appointments. ASR tools are improving care delivery by providing detailed treatment insights that doctors can review after each appointment.

The finance industry has embraced AI-driven customer support tools and also utilized speech technology transcription services to enable better-documenting multi-party calls like deal-making. This enables immediate feedback on the call, reducing back-and-forth written communication and providing a complete record of the conversation.

In telecommunications, AI-powered recommendations are providing real-time solutions to customers experiencing technical difficulties or service issues, much like an on-demand technical support technician would. Speech recognition remains a crucial part of telephony services that have become ubiquitous for many business practices both internally and externally.

Telecommunications has used ASR to transcribe conversations during product development and trials, improving products and minimizing errors whilst enabling quick resolution for any discovered product defects. For more articles about AI and SEO, visit On-page.ai

Common Questions and Answers

What are the benefits of using AI in speech recognition?

The benefits of using AI in speech recognition are numerous and undeniable. Firstly, AI-powered speech recognition systems boast a higher accuracy rate compared to traditional models. For instance, Google’s latest speech recognition system achieved a word error rate of just 4.9%, which is the lowest ever recorded. Secondly, deploying AI-assisted technologies can significantly reduce operational costs for businesses while also providing faster turnaround times and higher quality output.

AI-powered speech recognition technology also empowers individuals with disabilities, making it possible for them to communicate more effectively, access education materials and enhance overall quality of life. Additionally, AI-assisted Siri and Alexa technologies have revolutionized the way we communicate by enabling hands-free operations in our daily lives that can perform various tasks such as searching the internet, setting reminders and playing music without having to move from where we are seated.

In terms of productivity gains, a recent study has projected that global businesses could save an estimated $1trillion annually by utilizing speech recognition technologies powered by artificial intelligence. This is mainly attributed to increased efficiency in communication within enterprises coupled with a significant reduction in the time spent on non-core tasks.

In conclusion, the effectiveness of AI-powered speech recognition technologies cannot be overstated. By driving down operational costs while enhancing accuracy and boosting overall productivity, companies across all sectors stand to gain immensely from embracing these emerging technologies.

How can businesses and industries benefit from utilizing AI-powered speech recognition?

Businesses and industries can hugely benefit from utilizing AI-powered speech recognition technology. By implementing this technology, companies streamline their workflows, automate repetitive tasks, reduce costs associated with manpower, and enhance productivity significantly.

According to the MarketsandMarkets report, the market for speech and voice recognition is expected to grow to $26.79 billion by 2023. This growth is driven by various sectors including healthcare, banking, retail, and education. One significant benefit of AI-powered speech recognition is its ability to improve customer experience and satisfaction levels. Through the use of chatbots or virtual assistants that utilize natural language processing, businesses can provide 24/7 support service to their customers.

In healthcare, AI-powered speech recognition technology enables physicians to spend more time focusing on patients rather than documentation. Voice recognition software programs transcribe patient records automatically in real-time while healthcare practitioners access the patient’s information through mobile devices or desktops.

Moreover, in the field of education, teachers can utilize this technology to reduce administrative workload while providing prompt feedback to students simultaneously. With AI-powered speech recognition technology integrated into classroom environments, it becomes easy for teachers to evaluate academic performance based on a student’s tone and expression during discussions.

Ultimately, the integration of AI-powered speech recognition technology offers an edge in terms of efficiency over competitors in any industry. The potential benefits are numerous and enable companies to accomplish business objectives while enriching work-life balance for employees across multiple professions simultaneously.

How does AI improve speech recognition technology?

AI improves speech recognition technology in multiple ways. One of the key benefits of AI is that it can help speech recognition systems learn and adapt based on user feedback, making them more accurate over time. Additionally, AI can process vast amounts of data and identify patterns that might not be noticeable to a human reviewer. This ability allows researchers to train speech recognition models using large datasets, which helps improve their accuracy.

Another benefit of AI is that it can help reduce errors caused by environmental factors such as background noise or differences in accents and dialects. For example, Google’s AI-powered speech recognition system boasts a word error rate of just 4.9%, down from 8.5% just four years prior. This improvement was largely due to advances in machine learning, which allowed the system to better handle complex acoustic environments.

In conclusion, AI has the potential to revolutionize speech recognition technology by improving accuracy, reducing errors caused by environmental factors and enabling systems to learn and adapt over time. With continued advancements in machine learning and natural language processing technologies, we can expect even more impressive gains in the years to come.

Are there any drawbacks or limitations to using AI in speech recognition?

Yes, there are still some drawbacks and limitations to using AI in speech recognition technology.

Firstly, AI is heavily reliant on data, which means that the accuracy of speech recognition software may be affected by the availability and quality of the data used to train it. According to a study by voice technology company, Cerence, language models can be biased due to imbalanced training data, resulting in lower accuracy rates for certain groups of users.

Secondly, AI-powered speech recognition technology still struggles with understanding natural language and context. This means that it may misinterpret or miss entirely colloquialisms, sarcasm, or other nuances that human ears can easily pick up on. A report by Stanford University stated that “Speech Recognition Technology performs best with large vocabularies under practical speaking conditions…However, even state-of-the-art systems handle colloquialisms and conversations poorly.”

Lastly, privacy concerns remain an issue with the use of AI in speech recognition. There have been instances where smart speakers were found to be recording conversations without user consent and transmitting them back to manufacturers for analysis – such as in the 2019 Ring controversy. As much as 64% of consumers say they’re concerned about privacy while using this technology.

To conclude, while AI-powered speech recognition technology has made significant progress in recent years, there are still some limitations and challenges that need to be addressed before it can reach its full potential.

What advancements have been made in AI-powered speech recognition in recent years?

In recent years, AI-powered speech recognition technology has made incredible advancements, changing the way we interact with machines and devices. According to a report by Tractica, the global market for voice and speech recognition technology is expected to grow from $1.1 billion in 2016 to $6.9 billion by 2025.

One major advancement is the deep learning-based approach to speech recognition which has allowed for significant improvements in accuracy and natural language processing. Google’s DeepMind AlphaGo program demonstrated an impressive ability to recognize and respond to conversational language, leading to more engaging interactions between humans and machines.

Another notable advancement is the integration of AI-powered speech recognition into products such as Amazon’s Echo and Google Home devices which have become increasingly popular among consumers. In fact, according to eMarketer, about 82.2 million people in the US alone are predicted to use a smart speaker this year.

Overall, the advancements made in AI-powered speech recognition have enabled more seamless communication between humans and machines with a growing abundance of applications across various industries including healthcare, automotive, education, and entertainment.