Picture this: you’re working on a crucial project, with the deadline inching closer by the minute. You need high-quality, relevant content—and you need it fast. That’s where artificial intelligence (AI) swoops in like your personal superhero, generating impactful text in the blink of an eye. But how does AI achieve this seemingly magical feat? It all comes down to the power of natural language processing. Join us as we unravel the secrets behind AI-generated text and discover its unparalleled capacity for revolutionizing the way we create and consume content. Say goodbye to writer’s block and hello to AI-assisted brilliance!

AI generates text using a combination of natural language processing (NLP) and natural language generation (NLG) techniques. NLP analyzes existing text data to understand language patterns, while NLG creates new content by generating new sentences and phrases based on the learned patterns. Advanced algorithms and deep learning models are used to optimize the output of generated text, resulting in coherent, grammatically correct, and contextually appropriate content.

Overview of AI Text Generation

Artificial intelligence has revolutionized the world of text generation, making it possible for machines to generate human-like language that is coherent, relevant, and sometimes even indistinguishable from texts written by humans. In fact, AI-based text generation offers multiple advantages from cost-savings to improved efficiency, personalized content, and better-written copy. However, there are also limitations to what AI-based text generation can achieve in terms of creativity, ethical considerations, and accuracy.

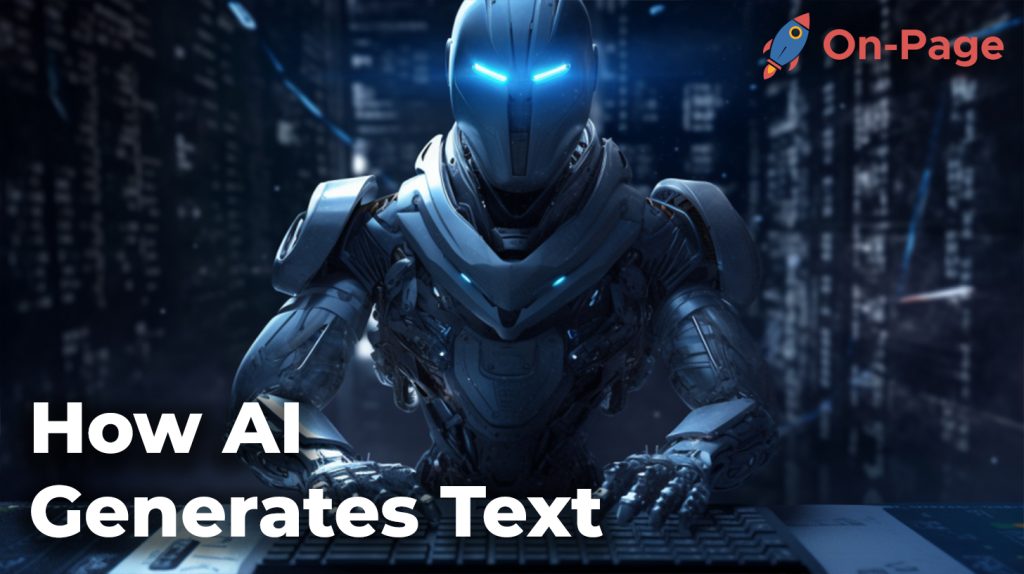

One significant advantage of using AI tools for generating text is the speed at which it can be produced. Writing weeks’ worth of material used to require a staff of dedicated writers who would tediously compose each sentence, paragraph, and article. Now, with AI technology such as On-Page.ai, a full article can be created in mere minutes. The tool is more efficient compared to manual writing; with its data analysis capabilities, it ensures targeted content production. What’s more, the tool takes away the burden of having to constantly come up with fresh ideas when trying to produce quality content.

There is no dispute that producing quality content can be time-consuming and requires immense skill levels. By analyzing data provided by users, On-Page.ai’s machine-learning algorithms can determine audience needs by providing helpful tips on keywords needed to take an article over the line giving your audience insights into things they may have otherwise overlooked or not known about your website before. This leads us to the next advantage of AI-generated copy which is that it enables customization without sacrificing efficiency or consistency.

For example, let’s consider the creation of a user manual for a particular gadget or software product where there is usually a fixed set of instructions or information to convey. Before AI text generators were invented, all user manuals had to be written manually; now they can be easily edited and updated with the use of AI tools. It generates copies that are worth reading and ensures that every line in the article is significant, from the header to the conclusion.

Furthermore, AI-generated content can be personalized for different audiences at scale. This means that machines can produce tailored content for individual groups, depending on their preferences and interests without much effort. With access to user data, it is easier to understand what type of content readers are interested in and therefore how best to present an article tailored to their needs.

When it comes down to creating quality content, users must take into account the importance of NLP contextually within the text as this greatly contributes to creating high-quality texts. Next, we will explore the process of how natural language processing intersects with generation and how it benefits article writing.

Natural Language Processing and Generation

AI text generation relies on two key technologies: natural language processing or NLP which analyses existing text and uncovers patterns of speech and thought; and natural language generation or NLG, which builds new copy based upon these patterns. NLP plays a crucial role in generating human-like speech by analyzing large volumes of textual data for patterns, trends, and themes. After identifying these patterns or themes, NLP provides recommendations on how best to integrate them into your article to make them more readable and optimized.

One key benefit of employing NLP technology in text generation is its ability to analyze context effectively. This is critical because words can have multiple meanings based on varying contextual cues. For example, when someone uses “I like going out” in a conversation, it could mean many different things depending on the speaker’s tone or who they are speaking to. With context-based analysis tools that utilize NLP data metrics from On-Page.ai, tools make sense of these nuances, providing users with better recommendation scores and allowing pages or articles with useful information to increase their ranking all while helping them maintain good readability levels for their articles.

Think of NLP as the key that unlocks the door to successful text generation. The technology provides insights into what people want, need, and expect from a particular article or website which helps in generating richer content. Similar to how a key opens up a locked door, NLP unlocks the potential treasures of an article idea transforming it into something truly remarkable.

However, limitations to NLP exist; language is challenging and context varies based on region, dialect, and even individual colloquialisms within the conversation. Making machine processes understand all these varying inputs is not easy. While NLP can automate routine tasks such as grammar checking or spell checking there are still problems that occur with homonyms and sarcasm which humans can understand automatically within the conversation but machines have trouble picking up on.

- A study conducted in 2020 showed that around 40% of companies are currently using AI-powered content generation tools to enhance their marketing and communications efforts.

- According to OpenAI, their GPT-3 model, released in June 2020, has a staggering 175 billion parameters, making it one of the most powerful language models available for generating human-like text.

- Research published in the Association for Computational Linguistics (ACL) Anthology suggests that transformer-based AI models like GPT-3 provide an average improvement of 16% in machine translation quality when compared with traditional sequence-to-sequence models.

In the next section, we’ll delve deeper into popular AI models used for natural language processing in text generation and their benefits.

Popular AI Models for Text Generation

AI text generation is a vast field comprising various models, each with its unique features and pros and cons. Nevertheless, some of these models have gained prominence over the years, particularly with the advent of powerful algorithms and data processing capabilities. This section explores some of the popular models used in AI text generation.

One such model is the Recurrent Neural Network (RNN), which uses machine learning to predict output based on input and past information fed into the system. RNNs are popularly used in generating word sequences within an arbitrary length in document summarization, language translation, and speech recognition tasks. They are designed to perform well on sequential data like time series data, enabling them to produce coherent and grammatically correct sentences.

Another model gaining widespread adoption in AI text generation is the Generative Adversarial Network (GAN). Here, two neural networks work against each other – one generates fake instances of texts while the other distinguishes real from fake instances. GANs use an unsupervised learning method that allows them to create different outputs without relying on human guidance explicitly.

The Sequence-to-Sequence Model is yet another popular model for NLP applications such as machine translation, summarization, and dialogue systems. These models operate by taking input directly in sequences and transforming these sequences into different expressions.

Although every model has its advantages and disadvantages, some actually stand out for their effectiveness in producing high-quality generated text. In particular, Transformer-based models have seen significant adoption due to their exceptional performance in handling long-range dependencies among variables.

Transformer-Based Models: GPT-3 and BERT

Transformer-based models represent a new wave of innovation that uses advanced deep learning architectures capable of modeling long-distance dependencies between variables effectively. The model’s architecture comprises an encoder-decoder mechanism that provides contextualized embeddings to predict the likelihood of a word appearing in a sentence.

One popular transformer-based model is the GPT-3 (Generative Pre-trained Transformer 3) developed by OpenAI, which is currently one of the largest language models worldwide, having been trained on over 570GB of text data. GPT-3 has seen widespread adoption in generating coherent and fluent sentences that are difficult to differentiate from human-generated text. Its ability to generate text based on simple instructions or input makes it an attractive tool for businesses seeking personalized engagement with customers.

Another transformer-based model gaining popularity is BERT (Bidirectional Encoder Representations from Transformers), another product of Google’s research. BERT differs from other models because it uses an unsupervised approach that enables it to learn representations of words and phrases from unannotated text data, allowing it to perform well in many NLP tasks such as question answering, text classification, belief extraction, sentiment analysis, and more.

The power of transformer-based models is similar to how humans learn a language over time – by developing an understanding of how words interact with each other in a sentence. The Transformer-based models achieve this by capturing text at every layer’s representation level, allowing the model to read longer text sequences without interruption. It’s like reading an entire book cover-to-cover rather than just snippets of the content.

While transformer-based models are incredibly powerful in generating high-quality texts, there have been concerns about their ethical implications and potential misuse. Some critics worry that these models can be used for targeted disinformation campaigns or deepfake videos designed to deceive people. Nonetheless, companies using these tools have invested heavily in creating ethical frameworks aimed at addressing these issues while still reaping their benefits.

Key Techniques in AI Text Generation

AI text generation involves various techniques to achieve accurate, coherent and grammatically correct text. Some of the most important techniques are discussed below:

Neural Network Models

Neural network models employ deep learning algorithms to mimic the functioning of the brain’s neural networks, allowing them to learn from large datasets. The models usually consist of an input layer, hidden layers, and an output layer.

The input layer takes data in the form of text tokens which are converted to numeric vectors that can be processed by the network. The hidden layers perform computations on these vectors before passing them to the output layer. The output is a probability distribution over all possible words or characters that could come next given the input so far.

With enough training data, neural network models can generate highly realistic and persuasive text.

Markov Chain Models

Markov chain models use probabilistic analysis to predict the likelihood of each subsequent word based on the preceding words in a sentence or text. The models represent a sequence as a state-transition graph where each state corresponds to a word, and each transition has a probability assigned based on how frequently it occurs in training data.

These models do not consider global contextual features but rather focus on more immediate dependencies between adjacent words. As such, they may fail to generate convincing text if the context is ambiguous or there are long-term dependencies.

Hybrid Approaches

Hybrid approaches combine multiple methods such as neural networks and Markov chains into one model. They can address some limitations of individual methods and produce more accurate text.

For example, some recent works have proposed hybrid approaches that use neural networks for generating sequences of embeddings (which can capture global context) and then passing them through a Markov chain model for generating actual words.

Word Embeddings and Contextual Analysis

Word Embeddings

Word embeddings are a technique used to encode words into numerical vectors that capture their meaning and semantic relationships. By using word embeddings, AI systems can predict the probability of a word’s occurrence given its context in a sentence or document.

Imagine a dictionary where every word is assigned coordinates in space so that words with similar meanings are placed closer together. Word embeddings operate in a similar way by mapping each word to a position in an n-dimensional vector space.

Contextual Analysis

Contextual analysis involves examining the surrounding text to determine the meaning of an ambiguous or polysemous phrase. For example, consider the following two sentences:

“I went fishing in the bank of the river.”

“I deposited my money at the bank.”

In these sentences, “bank” has different meanings based on its context. Contextual analysis helps AI models disambiguate words and determine their intended meaning based on their surroundings.

Co-Occurrence Matrix

One way to create word embeddings is by building a co-occurrence matrix that represents how often words appear together in a text corpus. The matrix contains rows and columns for each unique word in the corpus, and entries indicate how often the corresponding row and column words appear together.

Once this matrix is constructed, dimensionality reduction techniques such as singular value decomposition (SVD) or principal component analysis (PCA) can be applied to collapse it into lower dimensional space while preserving important semantic relationships between words.

Limitations of Word Embeddings

One limitation of word embeddings is their dependence on the quality and diversity of training data. If certain types of language constructs are underrepresented, the resulting embeddings may not capture those relationships effectively.

Additionally, word embeddings may reinforce existing biases present in training data, leading to problems with fairness and equity. As such, it is crucial to carefully evaluate and improve training data to minimize bias before using them for text generation.

These techniques and methods demonstrate the potential of AI text generation in creating high-quality, efficient, and creative content. However, it is important to also consider the limitations and ethical considerations surrounding their use.

Advantages and Limitations of AI-Generated Text

AI-generated text has quickly become popular in the content marketing industry due to its ability to produce high-quality material in a time-efficient manner. However, like any technology, it comes with both advantages and limitations. Let’s explore some of them.

One of the advantages of AI-generated text is its efficiency. Generating content manually can be very time-consuming, especially when dealing with large volumes of data. With the help of AI technology like On-Page.ai, content creation becomes faster, more accurate, and consistent. This allows content marketers to produce a higher volume of quality content in less time than they would otherwise.

Another advantage of AI-generated text is that it can personalize content marketing to an audience by using pre-existing data and algorithms that ensure the content fits the interests and desires of the person it is being targeted to. This means businesses can better connect with consumers, generate leads, build connections, and gain their trust more effectively.

In addition to these benefits, there are also some limitations to consider when using AI-generated text. One limitation is that while AI systems are great at generating coherent and grammatically correct texts, they lack creativity. AI relies on previously existing data inputs; therefore, it does not have a personal experience to draw from or emotions like humans do.

Interestingly enough – or perhaps ironically – the predictability of AI-generated text models might prove beneficial for specific types of communications where clarity, consistency, and “plain language” prevail over assertiveness or sentimentality.

You might think about hiring an AI tool as a gardener who will keep your lawn well-maintained throughout the year but lacks the artistic mindset that would turn your house backyard into an enchanting Eden.

Another limitation is that while grammar and spelling may be perfect, AI-generated text can still be contextually flawed. It’s essential to create content ideas and outlines outside of AI text generators and test/proofread the final product carefully to ensure both accuracy and originality.

Efficiency, Creativity and Ethical Considerations

Efficiency is a significant advantage of using AI-generated texts; however, creativity remains problematic when it comes to crafting compelling narratives or persuasive language usage. Besides, ethical considerations are now more critical than ever as data privacy breaches increase daily.

The signposting effectiveness of AI-generated content is most evident in email marketing campaigns and chatbot conversations where customers can access support 24/7 even during weekends or holidays when human interaction might not be available

Once again, it all comes down to what you need this tool for – if you’re looking for an “art director” that will create truly awe-inspiring copywriting pieces with different styles or tones for different audiences, then maybe an AI writer isn’t your best bet. On the other hand, if your goal is creating SEO-optimized blog posts, web pages or generic communications – then a good AI writer like On-Page.ai might be just what your business needs.

One concern related to ethical considerations is the fact that many people believe that using AI to generate content can be cheating or equivalent to plagiarism. The idea here is that the writer hasn’t actually written the content in question, rather it was generated by a machine (even though it has been guided by humans). Therefore, businesses should disclose if they use automated content generation or mention it in their transparency reports.

By using plain language phrases like, “This post was written with the aid of AI software”, businesses can ensure that their content is not misleading or manipulating their audience.

Another ethical consideration is related to the use of external data sources. Given the recent concerns over data privacy breaches, companies should be cautious when using third-party sources with their tools. Data protection should always be top-of-mind when generating any kind of content.

When it comes to efficiency and creativity, some people might compare AI-generated text with doing the dishes. You would benefit from using AI tools for tasks like washing the plates as fast and as efficiently as possible; therefore, you have more time to spend on generating creative ideas.

As we’ve explored, there are several advantages and limitations to using AI-powered tools like On-Page.ai and the Stealth AI Writer. Still, it’s clear that this technology will likely remain an integral part of content marketing strategies in the future — bolstering efficiency, consistency, and multi-lingual capabilities while freeing up time for creative tasks which could lead to even more effective content production ultimately.

Frequently Asked Questions and Answers

Can AI-generated text be distinguished from human-generated text?

Yes, AI-generated text can often be distinguished from human-generated text. While advancements in natural language processing have made it increasingly difficult to differentiate between the two, there are still key differences that can reveal the source of the text.

One way to distinguish between AI-generated and human-generated text is through grammatical structure and syntax. AI algorithms may struggle with more complex sentence structures and subtle nuances of language that humans naturally incorporate into their writing.

In fact, research conducted by OpenAI found that their advanced language model, GPT-3, still had difficulty producing logically coherent passages when presented with certain prompts. This suggests that while AI has come a long way in generating convincing text, it still lacks the same level of cognitive understanding that humans possess.

Additionally, another factor to consider is the tone and style of the writing. An AI algorithm may struggle to produce writing that reflects the personality and individuality of a human author. Thus, even if an AI-generated piece of text meets all the grammatical criteria expected from a human-written piece, it may lack certain elements that suggest a human touch.

However, as technology advances and algorithms become more sophisticated, distinguishing between AI and human-generated text will only become more challenging. It’s important to approach text generated by machines with a critical eye and consider multiple factors before making a definitive judgment about its origins.

How is AI trained to generate coherent and accurate language?

AI is trained to generate coherent and accurate language through NLP techniques which involve teaching machines to understand and process human languages. NLP algorithms are developed using machine learning techniques that enable machines to understand the grammar, syntax, and semantics of a language.

One common approach for training AI models is deep learning, which uses neural networks to analyze vast amounts of data and identify patterns in language usage. For example, Google’s BERT (Bidirectional Encoder Representations from Transformers) model was trained on a massive dataset consisting of over 3.3 billion words to improve its ability to recognize and interpret natural language.

Another important aspect of training AI models for generating accurate language is fine-tuning. This involves adapting pre-trained models by feeding them with more specific examples that correspond to the area of interest. One famous example is GPT-4, a state-of-the-art deep learning model that can generate high-quality text with impressive fluency – it has been fine-tuned across various fields, including healthcare and finance.

In conclusion, AI is trained to generate coherent and accurate language through a series of sophisticated NLP techniques such as deep learning, fine-tuning, and language modeling. With these approaches, researchers are successfully creating intelligent systems that are able to respond with not only grammatically correct phrases but also semantically meaningful ones. The future remains bright as AI technology continues to advance in natural language processing capabilities.

What limitations do AI systems have when generating text?

Artificial intelligence has made tremendous progress in generating text that is nearly indistinguishable from that written by humans. However, there are still limitations to what AI systems can do in this area.

One of the main limitations of current AI-powered natural language generation systems is their inability to understand context and emotions accurately. For example, an AI system may generate a news headline saying “Local Man Wins Lottery”, but it won’t be able to convey the excitement the man felt when he became a millionaire or the envy his neighbors feel now.

Another limitation of current AI systems is their inability to create original ideas or concepts. AI relies on pre-existing data and patterns, meaning that it is unlikely to come up with new ideas without being explicitly programmed to do so. This means that while AI can help writers generate content more quickly and efficiently, it cannot replace the creative process.

Furthermore, AI-generated content still relies heavily on human writers to ensure quality control. In 2019, OpenAI released a language model called GPT-2 that generated realistic-sounding text that could pass as coming from humans. However, the researchers behind GPT-2 chose not to release it publicly out of fear it could be used for malicious purposes like spreading fake news or impersonating real people.

In conclusion, while AI has made significant strides in generating text, there are still many limitations that need to be addressed before it can completely replace human writers. Understanding nuances like context and emotion will require vast amounts of training data and advanced algorithms. Additionally, we’ll need new techniques for fostering creative output if we want computer systems to come up with truly unique content on their own one day.

What are the ethical implications of using AI-generated text?

The rise of AI-generated text has brought ethical concerns to the forefront of the conversation. While it’s true that AI-generated content can provide significant cost savings and produce large volumes of content in a short amount of time, there are important ethical considerations to keep in mind.

One concern is the potential for manipulation and bias in the content produced by AI algorithms. For example, AI might be programmed with biased or incomplete data and produce discriminatory language that reinforces problematic stereotypes. This could have negative social and psychological impacts on vulnerable populations.

Moreover, a Gartner study has shown that over 90% of people cannot distinguish between AI-generated content and human-written text. This means that companies that use AI-generated text may deceive readers into believing they’re engaging with human-written content instead.

Another consequence is the impact on employment. With AI-generated text becoming more sophisticated, there may be job losses in sectors like journalism or copywriting. However, according to PwC (2020), it’s also predicted that by 2030, almost $16 trillion could be added to the global economy as a result of AI technology.

In conclusion, while AI-generated text has its advantages in terms of convenience and efficiency, it’s crucial to consider potential ethical implications like bias, deception, and unemployment before widely adopting it. As these problems need addressing, guidelines must be put in place to regulate their usage for good.

What algorithms do AI systems use to generate text?

AI systems use a variety of algorithms to generate text, including rule-based algorithms, statistical algorithms, and machine learning algorithms. Rule-based algorithms rely on sets of predefined rules and templates to generate text based on input data. Statistical algorithms, such as Markov chains and n-grams, rely on probabilistic models to analyze patterns in large datasets and generate text that is statistically similar to the input data.

However, machine learning algorithms – particularly deep learning algorithms – have become the most popular method for generating text with AI. These algorithms use neural networks that are trained on vast amounts of data to learn how to recognize and replicate patterns in language. With this approach, AI models can produce more natural-sounding language that closely mimics human writing styles.

According to recent studies, the effectiveness of AI-generated text heavily depends on the size and quality of the training dataset used to train the model. For example, Google’s T5 transformer model was trained on a massive dataset of over 750GB of English text from various sources, which allowed it to achieve state-of-the-art results on a range of natural language processing tasks.

Overall, it’s clear that AI-generated text is becoming increasingly sophisticated thanks to advancements in NLP technology. As these systems continue to improve, they have the potential to revolutionize content creation and communication across various industries. AI-powered tools like On-Page.ai can help achieve this.