Picture this: you’re seconds away from crafting the perfect tweet, but words fail you. Or maybe you’re crafting a highly persuasive email that requires those magical words to make your clients go, “Wow!” As the frustration builds up in these moments, imagine if there was an intelligent system that could understand your thoughts and magically string together the best possible word combinations for you.

But wait, what if I told you such a technology already exists? Welcome to the world of natural language generation (NLG), where we unlock its potential to revolutionize content creation in unimaginable ways. Stay with us as we unravel how NLG works and how it’s changing the face of digital marketing, one sentence at a time.

NLG technology uses machine learning algorithms and methodologies such as Markov chain, recurrent neural network (RNN), long short-term memory (LSTM), and transformer to identify patterns in large amounts of data. This data is then structured and presented in a human-readable format using pre-set templates or algorithms that generate entire summaries, articles, or responses based on the input data. Natural language understanding (NLU) comes into play to ensure that the generated content makes sense to human readers.

Core Concepts of Natural Language Generation

Natural language generation or NLG is an artificial intelligence technology that transforms data into human-readable language. NLG software analyses a data set and creates output texts that follow a predefined structure, format, and language rules. The primary goal of NLG is to generate narrative content that is informative, coherent, and engaging to its intended audience.

NLG outputs can range from short summaries or bullet points to full-length articles, reports, product descriptions, or even conversations with chatbots. Regardless of the intended purpose, NLG follows a specific set of core concepts that make it an effective and valuable tool for businesses across different industries.

The first core concept of NLG is that it needs high-quality input data. Without clean, structured, and relevant data, NLG algorithms will not function correctly and fail to produce useful outputs. Preprocessing the input data involves natural language processing (NLP) techniques such as entity recognition, sentiment analysis, or part-of-speech tagging.

Another core concept of NLG is the use of templates. NLG systems employ pre-made templates or frameworks that determine the output’s structure, tone, formatting, and language complexity. These templates also provide guidance on how to include relevant entities or keywords in the generated text while ensuring grammatical accuracy and coherence.

NLG also utilizes rule-based systems or statistical models to select the most appropriate sentences from a pool of potential outputs. This process takes into account user preferences, topic relevance, target audience demographics, or language functionality.

For example, suppose you are using an e-commerce platform that incorporates NLG technology to generate product descriptions automatically. In that case, the system might use different templates for different categories of products such as electronics versus skincare items. These templates would ensure that the generated text includes essential features such as brand name, model number, or customer reviews in a way that is both persuasive to the customer and informative in terms of product specifications.

NLG algorithms rely on different machine learning techniques to create meaningful output texts. These techniques include Markov chain models, decision trees, or neural networks such as LSTM or RNN. By training these algorithms on large datasets with human-written content, NLG systems can learn how to mimic human-like writing styles while ensuring grammaticality and coherence.

Despite the success of NLG in various domains, there are still challenges that researchers and developers need to overcome. One major challenge is to ensure the generated output’s variability and creativity. This means enabling NLG systems to generate texts that do not follow a rigid template but instead incorporate idiomatic expressions, metaphors, or other literary devices.

Another challenge is balancing accuracy and simplicity in the generated output. While being technically correct is important for certain applications such as medical reports or legal documents, it might not be desirable for other contexts where readability and ease of understanding are more critical. Finding the right balance between precision and simplification is an ongoing debate among NLG practitioners.

NLG Usage in Numbers

- According to Gartner, by 2025, 30% of outbound marketing content generated and distributed by large businesses will be produced using AI and NLG technology.

- A study conducted by Grand View Research estimated that the global NLG market was valued at US$833.6 million in 2020 and is predicted to grow at a compound annual growth rate (CAGR) of 25.5% from 2021 to 2028.

- It has been reported that current NLG models, like OpenAI’s third-generation Generative Pre-trained Transformer (GPT-3), have been trained on more than 45 terabytes of text data sourced from a wide array of sources, including books, articles, and websites.

NLG, NLP, and NLU Explained

NLG is one of several related natural language-based technologies that fall under the umbrella term natural language processing or NLP. NLP encompasses a range of topics such as text classification, sentiment analysis, speech recognition, named entity recognition, or language translation.

One essential difference between NLP and NLG is their respective directionality. NLP focuses on converting human language into machine-readable data so that computers can perform various tasks such as analyzing sentiment or recognizing voice commands. In contrast, NLG deals with transforming machine-readable input into human language outputs.

Another important aspect of NLP is natural language understanding or NLU. While NLP aims to convert human language into a machine-readable format, NLU focuses on interpreting the meaning behind the text. This includes understanding nuanced linguistic constructs such as sarcasm, irony, or ambiguity, and being able to extract relevant information from unstructured text.

NLG, NLP, and NLU are all driven by AI algorithms that rely on statistical models and deep learning techniques. These algorithms use large datasets to learn semantic patterns in language and apply them to new data inputs. This approach allows NLP and NLG systems to be highly adaptable to different contexts and languages.

For example, suppose you are using a voice assistant device that employs NLP technology to understand your spoken commands and perform various tasks such as setting alarms or playing music. In that case, the system might use pre-trained models of speech recognition that can recognize your accent or vocal tone while ignoring background noise or other irrelevant input. Once the system has understood your intent, it can then generate an appropriate output response using NLG techniques.

NLP and NLG technologies have numerous applications, ranging from healthcare and marketing to finance. In the healthcare industry, NLP is used for tasks such as analyzing medical records, identifying symptoms, or diagnosing diseases. NLG is deployed in generating medical reports or patient summaries.

In the marketing domain, NLG is used for content creation purposes such as product descriptions, blog articles, or even social media posts. In finance, NLP is applied to analyze market trends, identify fraud cases or automate customer support responses.

However, some controversies surrounding the use of NLP and NLG have arisen in recent years. One critical concern is about bias in machine learning algorithms that power these technologies. If datasets are not diverse enough or contain inherent biases based on race, gender, or class, then the resulting outputs could perpetuate such biases.

Privacy concerns around speech and text data collected by NLP applications have also been raised. Some critics argue that such data could be used for unwanted surveillance or targeted advertising purposes, making it important to establish ethical guidelines and regulations around the collection and use of such data.

The NLG Step-by-Step Process

Natural language generation is a complex process that involves several key steps to transforming raw data into human-like language. Understanding these steps will help you appreciate how NLG works and the possibilities it presents. Let’s explore each step in more detail.

- Content Analysis: The first step is to analyze the raw data and extract meaningful insights from it. This involves identifying patterns, trends, and relationships between the data points. For example, if we were analyzing customer feedback on a product, we would look for common issues or complaints that customers are raising.

- Data Understanding: Next, the NLG system needs to understand the context and meaning of the data points it has extracted. This means mapping the data into a semantic model that can be easily transformed into natural language. For instance, if we found that customers were complaining about long wait times for customer service, we need to ensure the NLG system understands what “customer service” means in this context.

- Document Structuring: Once the data has been analyzed and understood, the next step is to organize it into a coherent structure. This involves breaking down the data into smaller pieces such as paragraphs or sentences and organizing them in a way that makes sense to readers. Despite its importance, document structuring is often overlooked in NLG research.

- Sentence Aggregation: Now that we have structured our content, we need to aggregate it into sentences that make sense. This involves combining multiple sentences together while ensuring they are grammatically correct and follow logical sequencing. A useful analogy would be akin to piecing together bits of Lego blocks until you have built something new.

- Grammatical Structuring: Our content has been organized and molded into meaningful chunks and legible sentences. The next step is to ensure that these blocks of text or clauses fit within the limitations of grammar, syntax, and style. NLG systems must ensure that the output conforms to standard grammatical rules and uses vocabulary suitable for the target audience.

- Language Presentation: Finally, the NLG system presents the content in a human-like language format. This involves using stylistic techniques such as metaphors, alliterations, and rhetorical questions to make the text more engaging. The text must be easily readable and should match the sophistication level of your audience.

Data Understanding to Language Presentation

The process from data understanding to NLG presentation is often overlooked by users. However, it is one of the most critical factors that contribute to an effective NLG system.

When transforming raw data into natural language, it is crucial to ensure that NLG software understands the context behind each individual data point extracted from sources like databases or spreadsheets. By using NLP techniques like semantic parsing and dependency parsing techniques, NLG systems can uncover meaning beyond just individual words.

There are still challenges in this area related to voice and tonality. Each person speaks differently with their tone being influenced by emotional states and conversational context. Since language can be interpreted in various ways depending on how it is spoken/written down, factoring in contexts can help reduce errors during the analysis phase of raw data.

Let’s put this into perspective. When we speak, we may use words that have multiple meanings depending on how they are used within a sentence or scenario. For instance, consider the verb “run”, which can describe a movement or leadership task. For an NLG algorithm to adequately capture context-based aspects of language like this one’s used cases and sense, sophisticated modeling tools like deep learning networks are required.

The next step involves the structuring of the language structure. This involves breaking down the data into smaller pieces that can be easily manipulated, such as into phrases or individual sentences. These smaller chunks of text can then be further analyzed for logic and consistency before being combined with other units to form a coherent narrative.

Let’s say you have extracted data related to sales figures from different stores over various months. By arranging this data by, say month, NLG algorithms can create an automated story about how sales changed over time in each location.

After creating logical representation structures based on language structures, the generator needs to ensure grammatically correct formulations. There must be consistency between subjects and objects placed next to each other and between verbs and their complement. The natural language generator must ensure adherence to context-based grammar rules appearing around prepositions (related words). Computer algorithms should observe the usage of collocation (phrases with similar meanings) and act in accordance with syntax and style relevant to intended purposes.

Homophones (a word pronounced the same but have different meanings) could prove difficult when using software to generate report writing or marketing content because it can cause misinterpretation leading to a significant shift from the original meaning.

Imagine your sentence read something like: “The company decided to go on a hunt for doughnuts,” leaving out the vital part of context could translate “hunt” as seeking or looking rather than going out on a hunting spree.

Finally, we arrive at language presentation which undoubtedly yields best practices in storytelling. At this point, NLG will concentrate not only on facts but also on how these facts are presented. The way information delivery constructs affect quality and user experience in reading or listening — varieties of styles depending on goals defined by user requirements.

Sentiment-based content creation for social media and marketing campaigns based on data patterns is where these generators come in handy.

NLG algorithms are informed primarily by content analysis and will feed the semantic meanings into the system’s language model. By feeding keywords into its analyzing tools to give semantics to the generated content helps understand the reader’s needs better. This ensures that they can create content that is both informative and engaging.

The downside of language presentation would be neglecting consistency in tone and style while focusing mainly on grammatical correctness. An overemphasis on grammatically correct sentences may lead to a robotic textual representation that lacks humanity in writing.

Creating analogies similar to how someone would write helps alleviate concerns of drifting too far away from human-like formatting and readability while avoiding losing out on the occasional grammar slip-up or creative deviation.

The process of transforming raw data into natural language using NLG algorithms requires an understanding of context, grammar, syntax, and style in order to create coherent and engaging narratives. Challenges in NLG include accounting for variations in voice and tonality that can affect meaning and ensuring consistency between subjects and objects, verbs, complementizers, prepositions, and phrases. NLG systems use techniques like deep learning networks to model the complexity of natural language. Finally, NLG generators should prioritize both accuracy and readability when presenting information.

Applications of NLG Technology

The applications of NLG technology are far-reaching, spanning across industries and use cases. NLG is a powerful tool for generating human-like language based on data, which can be applied in various ways to enhance communication and productivity.

One of the most common applications of NLG is in business intelligence and reporting. NLG can help organizations transform large amounts of data into meaningful summaries, reports, or narratives in seconds. This functionality can help businesses save valuable time and resources by automating reporting processes that previously required manual work.

NLG technology is also making it easier to personalize customer engagement. Chatbots using NLG algorithms have been known to provide personalized customer engagement by responding with dynamic content that takes context into account.

For example, when a customer contacts an online store to ask about a specific product, an NLG-powered chatbot could process the customer’s inquiry and provide a customized response that includes relevant information such as the product’s availability, its price, shipping time frames, and more.

The ability of NLG-powered chatbots to understand natural language inputs has opened up new opportunities for businesses looking to leverage conversational interfaces. With smart adaptations to user inputs, chatbots can convert raw data into custom-made responses for users on-the-fly without any manual intervention from humans.

However, like all new technologies, there are debates around the effectiveness of NLG-powered chatbots in delivering personalized content. While NLP-powered chatbots lead to more conversational experiences than menu-based systems, they can be challenging for users to navigate in scenarios where they must clearly communicate what they need.

To make matters worse, some developers tend to overestimate their ability when building these complex bots hence resulting in poor user experience. An effective NLG algorithm relies on understanding linguistic structure alongside domain-specific knowledge which should get better if fed with data.

That being said, NLG technology is gaining traction in content creation as well.

Chatbots, Voice Assistants, and Content Creation

Chatbots and voice assistants are the most common examples of the use of NLG technology in content creation. NLG can generate responses to user queries almost instantly, making it an ideal tool to augment the performance of chatbots and voice assistants.

The rise of virtual assistants like Siri or Alexa is proof that voice-activated devices are here to stay. With the help of NLG, these devices can carry out complex tasks by understanding natural language commands.

For instance, when you ask Siri or Alexa for a recipe, they can pull up one in real-time thanks to their machine learning algorithms that analyze your spoken command. Other applications include ordering food online or requesting for general services like scheduling appointments.

Content creators have also shown interest in using NLG technologies in creating written content such as news articles and reports, especially since it eliminates the need for human intervention.

With the advent of advanced AI algorithms, NLG-powered content generators can create highly original pieces without compromising on quality. In fact, some companies already use automated reporting tools that produce newsletters and other daily roundup reports.

Think about speech recognition software like Dragon Naturally Speaking. It continuously learns from what you speak and improves its accuracy over time. Similarly, NLG algorithms follow a similar methodology when writing content based on prior data fed into the computer’s model.

Advancements and Challenges in NLG

As with any technology, natural language generation has seen some significant advancements over the years. One of the main areas where NLG has made leaps forward is in terms of language understanding. Thanks to AI-powered models and algorithms, NLG systems are now able to better understand the context behind certain words or phrases, allowing them to generate more accurate and relevant narratives.

Another area in which NLG is advancing is its ability to create output that is more natural-sounding. This is thanks to advances in machine learning, which have enabled systems to better mimic human speech patterns and tone. In fact, some of the most advanced systems today are so good at this that it can be difficult to tell whether you’re reading something generated by a machine or a person.

However, there are still some challenges that NLG needs to overcome before it can be considered a truly mature technology. One of these challenges is data quality. NLG relies on large amounts of data to generate narratives, and if that data is inaccurate or incomplete, the output will suffer as a result. Additionally, NLG systems need “clean” data. In other words, data that has been standardized and labeled in such a way that the system can easily understand it. Ensuring the quality of data is an ongoing challenge for many businesses.

Another key challenge is ensuring that the output generated by NLG systems is understandable and useful to humans. For example, generating complex financial reports with lots of technical jargon can be a challenge for many NLG systems. This requires not only an understanding of language but also an understanding of context and purpose, something that humans do naturally but machines struggle with.

One anecdotal case involves an e-commerce platform that implemented an NLG tool for product descriptions. While the tool was able to generate descriptions for thousands of products quickly and efficiently, the company found that the descriptions weren’t actually very helpful to customers. This was because the NLG tool struggled to understand what information was important to include and what wasn’t, something that a human copywriter would be able to do naturally.

Despite these challenges, NLG technology is rapidly developing and being adopted by businesses across a wide range of industries. From chatbots and voice assistants to financial reporting and content creation, NLG has the potential to revolutionize the way we interact with data.

State-of-the-Art Approaches and Limitations

In terms of state-of-the-art approaches to NLG, there are a number of exciting developments taking place in the field. One area that is seeing rapid growth is in the use of deep learning techniques such as RNNs and LSTM models. These models are capable of learning complex patterns and dependencies within data sets, allowing them to generate highly accurate and relevant narratives.

Another area that is seeing significant progress is in the use of unsupervised learning techniques for NLG. Traditionally, NLG systems have relied on supervised learning – where data is labeled and used to train the system — but unsupervised learning offers a new approach where data can be mined without prior labeling. This has the potential to significantly reduce the time and cost required to develop NLG systems.

However, despite these advances, there are still some key limitations that need to be addressed if NLG is to become a truly transformative technology. One of these limitations is around domain specificity – in other words, how well an NLG system can generate output for specific domains or industries. While most NLG systems can generate general-purpose narratives reasonably well, they often struggle when it comes to more specialized domains such as medicine or law.

Another limitation is about explainability. Because NLG systems rely on complex algorithms and machine learning models, it can be difficult to understand how they arrive at certain conclusions or generate specific narratives. This can be a problem for businesses that need to ensure the output of an NLG system is fair, unbiased, and accurate.

Finally, there is the ongoing challenge of ensuring that NLG systems are ethical and responsible. Because NLG can generate large amounts of content quickly and easily, there is a risk that it could be used to spread disinformation or propaganda. Additionally, NLG systems need to be trained on high-quality data in order to avoid perpetuating biases or stereotypes. Ensuring that NLG systems are used responsibly and ethically is a key challenge that must be addressed by the industry as a whole if we are to realize the full potential of this technology.

AI-powered content writing tools like On-Page.ai have changed how people generate smarter articles for business. Discover how it can empower your SEO campaigns today.

Frequently Asked Questions

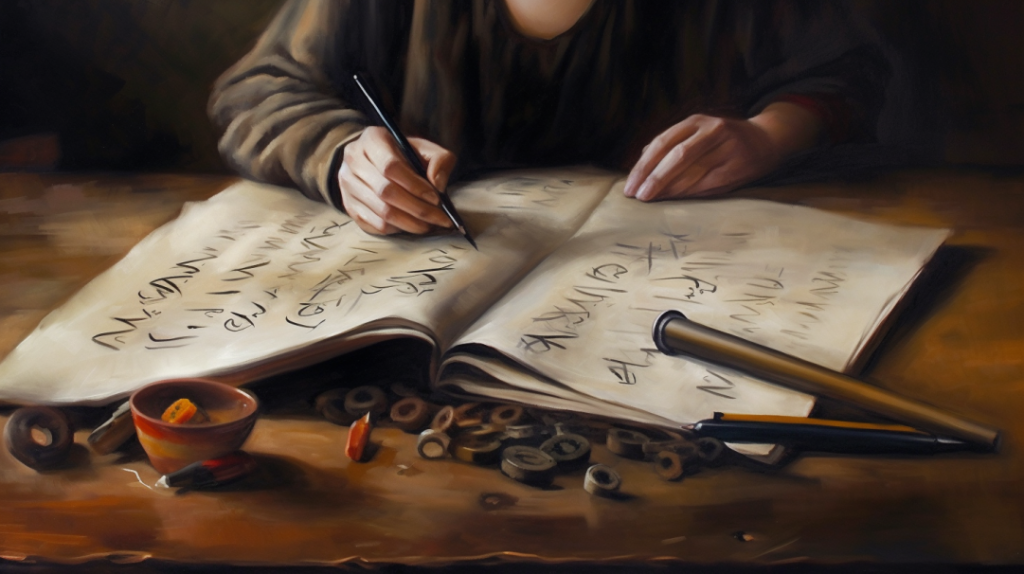

1. What programming languages are commonly used in natural language generation?

NLG involves the use of programming languages to generate human-like text through algorithms and rules. The most commonly used programming languages for NLG include Python, Java, and R.

Python is widely considered the most popular language for natural language processing and generation due to its versatility and extensive library support. In fact, according to a 2020 survey conducted by Analytics India Magazine, Python was the top language used in NLP projects with a usage rate of over 70%.

Java is another popular language in NLG due to its efficient memory management and object-oriented design. It also has numerous open-source libraries available for use in text processing and analysis.

Finally, R is often used for statistical analysis in NLG applications. Its popularity stems from its ability to handle large data sets and perform complex calculations quickly.

Overall, while these three languages are commonly used in natural language generation, there are many other programming languages that can be utilized depending on the specific needs of a project.

2. What advancements in NLP have contributed to the development of NLG?

NLG has come a long way in recent years thanks to advancements in NLP. NLP technology has enabled NLG systems to analyze and understand large amounts of data, which has helped to improve the accuracy and efficiency of NLG output.

One key advancement in NLP that has contributed to the development of NLG is deep learning. Deep learning algorithms have transformed the way machines learn from large datasets and make predictions or generate text. These algorithms are capable of identifying patterns in training data and developing highly accurate models for NLG.

Another important development has been the rise in popularity of transformer-based models such as the GPT series. Transformer-based models use self-attention mechanisms to analyze sequences of input data, allowing them to generate highly coherent and contextually relevant text. Additionally, transformer-based models can generate longer texts than traditional recurrent neural networks, making them more effective for NLG tasks.

According to Grand View Research, the global market for NLP is expected to reach $31.1 billion by 2026, with much of this growth attributed to advancements in NLG technology. With these developments, we can expect even greater levels of automation and personalization in content creation and communication.

3. What are the main components of an NLG system?

NLG systems consist of several main components, including content planning, document structuring, data-to-text conversion, and surface realization.

Content planning involves determining what information to include in the generated text. This is typically done using natural language understanding techniques to analyze the input data and identify relevant concepts and relationships.

Document structuring involves organizing the content in a way that makes sense for the intended audience. This might involve creating sections or headings, adding captions or footnotes, or highlighting important information using formatting or visual cues.

Data-to-text conversion involves mapping the input data to natural language sentences. This can be done using templates or rules-based systems, but many modern NLG systems use machine learning algorithms to generate more natural-sounding text.

Surface realization involves converting the structured text into its final forms, such as HTML for web pages or PDF for documents. This typically involves applying styling and formatting rules to ensure that the output looks professional and is easy to read.

According to a report by MarketsandMarkets, the global NLG market size is expected to reach US$1.5 billion by 2023, growing at a CAGR of 20% from 2018 to 2023. The increasing demand for automated content generation across various industries is driving this growth. As more companies recognize the value of NLG for improving efficiency and reducing costs, we can expect to see continued advancements in this field.

4. What industries or fields currently utilize NLG technology?

NLG technology is rapidly gaining traction across several industries and fields. Currently, NLG is being used by various sectors to automate tasks that require generating human-like narratives, summaries, and other forms of content.

One industry that heavily relies on NLG is the financial sector. Companies like JPMorgan Chase and American Express are using NLG to generate detailed financial reports and market insights automatically. According to a report by Gartner, the adoption rate of NLG in financial services will grow to 55% by 2025.

Another field that utilizes NLG is e-commerce. Retailers such as Amazon and Walmart use NLG technology to create product descriptions, email marketing campaigns, and personalized recommendations for customers. According to a study by MarketsandMarkets, the global NLG market in the retail and e-commerce sector is expected to reach $1.5 billion by 2023.

Healthcare is another industry set to benefit massively from NLG technology. Healthcare providers are using NLG software to generate diagnostic reports, patient summaries, and treatment plans automatically. According to a report by Tractica, the healthcare NLG market is expected to reach $1.45 billion in revenue globally by 2024.

In conclusion, NLG technology has already made significant strides across various industries such as finance, e-commerce, and healthcare, producing compelling results for businesses worldwide. With growing industry-specific expertise and advances in AI-based technologies, the possibilities of how we could leverage NLG will continue increasing exponentially in future years.

5. How does natural language generation differ from other forms of artificial intelligence?

NLG is a subset of AI that focuses on generating human-like language from data. Unlike other forms of AI such as machine learning and deep learning, which mainly focus on pattern recognition and prediction, NLG is more concerned with producing output in natural language, just like how a human would write or speak.

One of the key differences between NLG and other forms of AI is that NLG requires structured data input to generate natural language output. This means that NLG systems need to be trained on specific datasets, typically containing well-defined data structures and relationships between different pieces of data.

Another difference is that NLG is useful for creating personalized content at scale without the involvement of humans. For example, companies can use NLG to automatically generate personalized marketing emails or financial reports based on customer data. This not only saves time but also ensures consistency and accuracy across large amounts of content.

According to a report by MarketsandMarkets, the global NLG market size is projected to grow from US$311 million in 2018 to US$1,471 million by 2023, at a CAGR of 36.5% during the forecast period. This growth is attributed to the increasing demand for automated content generation across various industries, including healthcare, finance, and marketing.

In conclusion, NLG differs from other forms of AI in its focus on generating human-like language from structured data input and its ability to create personalized content at scale without human involvement. With the growing demand for automated content creation using tools like On-Page.ai, it’s clear that NLG will continue to play a major role in shaping the future of AI-driven content generation.