Imagine a world where you no longer struggle with writer’s block or stress over creating exceptional content. A world where the perfect word, phrase, or sentence appears in your mind effortlessly. Welcome to the era of Transformer Models! In this guide, we’ll dive into the realm of these AI-powered writing assistants and reveal how they can make your content captivating like never before. Are you prepared to transform your writing experience? Keep reading with On-Page.ai and uncover the secrets of Transformer Models that have been taking the content creation industry by storm.

Transformer models are an advanced form of neural network architecture that enable computers to generate human-like text. They have revolutionized natural language processing and are used for a wide range of applications, including content generation, language translation, and chatbots among others. By using transformer models, writers can automate repetitive tasks, get inspiration for new ideas, and produce high-quality content at scale.

Understanding Transformer Models

To understand transformer models, it is important to have some basic knowledge about machine learning models. Most of the traditional NLP models were built on the feature extraction technique, where we used to extract important features from texts and use them for analysis. However, with the introduction of transformer models, this approach has changed significantly.

A transformer model is a type of neural network architecture that was introduced by Vaswani et al in 2017. The transformer model uses a self-attention mechanism that helps in effectively processing input sentences through parallelization while preserving the sequential order of the original data.

In simple terms, a transformer model can be thought of as a prediction machine that uses a large dataset to make predictions on new data. The transformer model’s main task is to predict missing words from a sentence or the next word from a given context. It learns this ability by being trained on vast amounts of text to learn patterns and relationships between different words.

Let’s take an example of how a transformer model works. If we want to generate new text from scratch, we would input some words into the model, and based on those words’ relationship with each other, the model would generate new text that makes sense and flows naturally. For instance, if we input “Once upon a time,” the model will know that the next word should be related to storytelling and could potentially output something like “in the magical lands of faraway kingdoms.”

One of the main advantages of transformer models over traditional NLP models is its ability to handle long-term dependencies between words effectively. Traditional NLP models like RNNs tend to forget information that comes earlier in the sequence since it has so much importance given to newer data than older data. In contrast, the self-attention mechanism in transformer-based architectures enables them to capture more information from long sequences since they do not have limitations like RNNs.

To further understand why the transformer model is so effective, we can use an analogy. Imagine you are reading a book, and you’re trying to understand the context of a specific sentence. You would have to go back and re-read previous parts of the paragraph or chapter to try and make sense of the sentence in question. This is exactly what happens with traditional NLP models; they have to keep going back through previous words to get context for new words. In contrast, a transformer model can “attend” to important words in a sentence simultaneously without having to jump back and forth.

Now that we have explored what transformer models are let’s dive into transfer learning and its importance in NLP.

- The ground-breaking paper introducing Transformer models, “Attention is All You Need”, was published in June 2017 and has since been cited over 20,000 times according to Google Scholar, signifying their significant impact on natural language processing research and development.

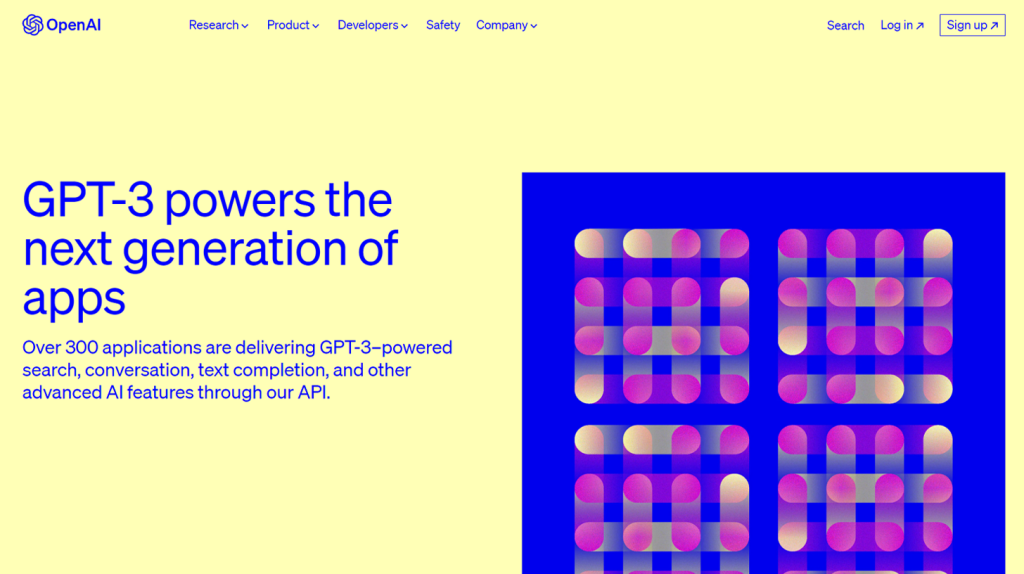

- In a publication by OpenAI exploring GPT-3, large-scale models with 175 billion parameters demonstrated an ability to perform multiple NLP tasks without task-specific training data, achieving state-of-the-art results on several benchmarks.

- A study conducted in 2020 comparing GPT-like (auto-regressive), BERT-like (auto-encoding), and BART/T5-like (sequence-to-sequence) Transformer models revealed that BART/T5-like models outperformed other types on a variety of downstream tasks due to their balanced nature and ability to handle complex text generation.

The Concept of Transfer Learning

Transfer learning is a machine learning technique that enables existing knowledge learned from one domain or task to be applied to solve another problem in a different domain or task, rather than building everything from scratch. The idea behind transfer learning is based on the concept that similar tasks share hidden patterns that can be learned through data exploration and modeling.

In natural language processing (NLP), transfer learning has become an integral part of state-of-the-art models, especially since most of these models require massive amounts of labeled data for training.

- For instance, suppose we wanted to build a model that could identify whether movie reviews were positive or negative. We would first train the model on massive amounts of publicly available text data (the pre-training step). Then, we would fine-tune the same model using smaller datasets relevant to our specific task (called fine-tuning). The pre-training allows the model to learn about the patterns found in all sorts of text data, which can be helpful in any subsequent task.

- Another example of transfer learning is seen in the form of model training for different languages. Building such models requires vast amounts of data, expertise, and resources, which can be a constraint for many organizations. With transfer learning, you can use pre-trained models on English text data and, with some fine-tuning, apply them to a different language. The pre-trained model should have learned the basics of sentence structure, grammar, and vocabulary in English that should be helpful for processing text in other languages.

- Transfer learning ensures effective utilization of data by allowing models to learn generalized representations from vast amounts of data. By doing this, it saves time and computational resources while still improving the accuracy of a given task.

- Some might argue that transfer learning can be detrimental to the development of novel models since much of what is being done is reusing existing knowledge. However, others point out the benefits of having these reusable building blocks and that transfer learning actually incentivizes researchers to work collectively on developing better models.

Having understood the concept of transfer learning and its importance in NLP, let’s move on to understanding how transformer-based architectures leverage transfer learning to improve writing.

Applications in Writing and Text Generation

Transformer models fall into three main categories: GPT-like (auto-regressive), BERT-like (auto-encoding), and BART/T5-like (sequence-to-sequence). Each category has its unique strengths, but all are designed for language modeling using raw text in a self-supervised manner. As such, transformer models have found broad applications across various fields, especially in writing and text generation.

- One notable advantage of using transformer models is the ability to generate realistic and coherent text that resembles human writing. This has wide-ranging implications, from chatbots and virtual assistants to content creation and email automation. For instance, businesses could use transformer models to generate product descriptions, social media posts, and news articles to save time and resources while ensuring high-quality content.

- Furthermore, transformer models can help writers overcome writer’s block by providing inspiration and generating new ideas based on their existing work or other external sources. Additionally, transformers can assist non-native speakers with improving fluency and readability by identifying grammatical errors, suggesting better sentence structures, and fine-tuning vocabulary.

- For instance, let’s say you’re a journalist working on a breaking news story about a natural disaster. You can use a language model like GPT-3 to augment your writing by summarizing background information or synthesizing quotes from eyewitness interviews. Furthermore, you can use transformers’ predictive abilities to warn readers about impending danger or provide real-time updates as the situation unfolds.

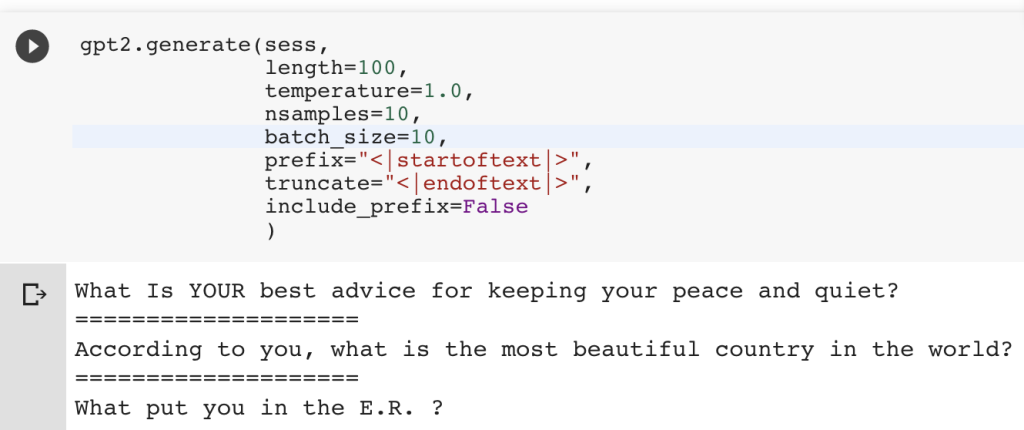

- Recent studies have also shown that transformer models can be used for creative writing tasks such as poetry or fiction writing. For instance, researchers at Stanford developed an algorithm that uses GPT-2 to generate short stories that resemble those written by humans. Another team of researchers developed a poetry generator that uses XLNet to create original poems based on user prompts.

Think of transformers as your personal writing assistant, capable of providing useful tips and feedback while helping you unleash your creativity and express your ideas more effectively.

Key Transformer Models

While there are several transformer models in use today, four main models have gained significant attention for their superior performance in various text generation tasks: GPT-2, GPT-3, BERT, and XLNet.

The GPT-2 model is known for its ability to generate long, coherent text that closely resembles human-generated content. Developed by OpenAI, this model has 1.5 billion parameters, making it one of the largest language models currently available. The GPT-3 model builds on its predecessor’s strengths with 175 billion parameters, which makes it the most powerful language model ever created. Its ability to perform complex tasks such as translation and question answering has garnered widespread attention among researchers and writers alike.

BERT is another popular transformer model developed by Google that has been widely used for natural language processing tasks such as sentiment analysis and named entity recognition. It differs from auto-regressive models like GPT-2 and GPT-3 in that it uses a bidirectional encoder to analyze the context of each word in a sentence rather than predicting words based on the preceding ones.

XLNet is another language model developed by Google that builds upon BERT’s strengths but overcomes its limitations. It uses an autoregressive objective inspired by traditional n-gram language modeling but also incorporates permutation-based pretraining. This unique design allows it to consider all possible word orders within a sequence while still being able to handle arbitrarily long sentences.

For instance, let’s say you’re a copywriter working on a marketing campaign targeting millennials. You can use transformer models like GPT-2 or GPT-3 to generate social media posts that resonate with your target audience using relevant hashtags or buzzwords.

Recent head-to-head performance evaluations between GPT-3 and human writers have shown that in many cases, the model’s generated content is virtually indistinguishable from content created by humans.

Some critics argue that transformer models lack real-world applicability due to the potential for biased or offensive text generation. Others contend that such issues can be mitigated through careful fine-tuning of pre-trained models and further refinement of ethical guidelines and best practices.

GPT-2 and GPT-3

When it comes to transformer models, few have gained as much notoriety as the Generative Pre-trained Transformer 2 (GPT-2) and its successor, GPT-3. Developed by OpenAI, these models boast unparalleled power for generating human-like text and have been hailed as a major breakthrough in natural language processing.

To understand the capabilities of these models, consider this example from OpenAI’s website: “In a language model trained on Wikipedia, if you prompt it with ‘The capital of France is,’ it’ll probably complete the prompt with ‘Paris.’ But what if you prompt it with ‘ The capital of France is Montpellier’? In most cases, the model will correctly recognize that Montpellier isn’t the capital of France and will generate some other response.”

One key reason why GPT-2 and GPT-3 are so powerful is due to their massive size. GPT-2 was trained on over 40GB of text data, while GPT-3 uses over 570GB. This allows them to learn incredibly intricate patterns within language and produce responses that seem almost indistinguishable from those written by humans.

Moreover, these models come equipped with advanced features such as “zero-shot learning” which enables them to perform tasks without explicit training, and “few-shot learning” which requires only a small amount of training data to learn new skills. As such, they can be adapted for a wide range of writing tasks including chatbots, content creation and summarization.

Think of GPT-2 and 3 like a master pianist – through years of practice (training), they’ve developed an unmatched ability to conjure up beautiful melodies (text) at the drop of a hat. And just like a great pianist can effortlessly tweak their playing style to suit different music genres, so too can these models adapt their writing skills to cater to a diverse range of applications.

- The Generative Pre-trained Transformer 2 (GPT-2) and GPT-3 are powerful transformer models that have gained notoriety due to their ability to generate human-like text. These models are trained on massive amounts of text, allowing them to learn intricate patterns within language and produce responses that seem almost indistinguishable from those written by humans. Additionally, they come equipped with advanced features such as zero-shot learning and few-shot learning, making them adaptable for a wide range of writing tasks including chatbots, content creation, and summarization. Overall, GPT-2 and GPT-3 are like master pianists, able to effortlessly adapt their writing skills to cater to a diverse range of applications.

BERT and XLNet

While GPT-2 and GPT-3 have gained the lion’s share of attention in the NLP community, they are not the only transformer models worth discussing. The Bidirectional Encoder Representations from Transformers (BERT) and the Cross-lingual Language Understanding (XLNet) models have also made significant strides in the field.

- BERT has been described as a game-changer for natural language processing due to its ability to understand the context of words within sentences. Unlike traditional models that process words sequentially, BERT uses a bidirectional approach where it reads forward and backward through text data to build up a more nuanced understanding of language patterns.

- XLNet, on the other hand, takes things a step further by overcoming BERT’s unidirectional limitation. By incorporating an independent masking algorithm based on permutation, it is able to generate text with an even greater degree of coherence while outperforming BERT across a range of tasks.

- Think of BERT and XLNet as two master detectives – both are capable of piecing together complex clues (words), but while BERT focuses mainly on evidence from the past, XLNet also has insight into what may happen in the future. Just like how two investigators may work differently depending on what sort of case they’re trying to crack, so can these transformer models adapt their approach to suit various writing tasks.

- While GPT-2 and GPT-3 may be more well-known than BERT or XLNet, this doesn’t necessarily mean they’re better for every writing application. Depending on your needs, one model may be more suitable than another. For instance, GPT-2 or 3 may be better if you need to generate creative content that mimics human writing style, while BERT or XLNet may be more appropriate for tasks that require a deeper understanding of language.

In any case, it’s clear that transformer models have opened up an entirely new world of possibilities for writers and text creators. With their incredible power, versatility and adaptability, there’s no telling what sort of groundbreaking innovations we can expect from these tools in the future.

Leveraging Transformers for Improved Writing

Transformer models have revolutionized the field of natural language processing and transformed the way we approach writing and text generation. By leveraging the power of these models, it’s now possible to generate high-quality content with less effort and time. But how exactly can we use transformer models to improve our writing? In this section, we’ll explore some of the most effective strategies for leveraging transformers to create better written content.

- One of the most straightforward ways to use transformer models is by simply inputting a sentence or phrase and letting the model generate additional text. This can be useful for generating ideas, exploring different perspectives on a topic, or simply getting unstuck when you’re struggling with writer’s block. For instance, you could input a sentence like “The future of technology is” and see what kind of ideas the model generates in response.

- Another strategy is to use transformer models to automatically generate content summaries or abstracts. This can be especially helpful for long-form content like research papers or articles, where authors typically include a summary at the beginning. The GPT-3 model has been shown to be particularly effective at summarizing text, and there are even apps available that allow you to summarize web pages or other text sources in just a few clicks.

- While transformer models can be incredibly helpful in generating new content or summarizing existing content, it’s important not to rely too heavily on them. Ultimately, they are still machine-generated output, and don’t always capture the nuances and complexities of human language. Moreover, relying on transformers too much can stifle your own creativity as a writer. Therefore, it’s essential to strike a balance between using transformer-generated text as raw material for further refinement and being able to write original content from scratch.

Using transformer models is like having an intelligent writing assistant on your team, one that can help you generate ideas or summarize content quickly and efficiently. But like any writing assistant, it’s still up to the author to put the finishing touches on the final product. Ultimately, mastering the art of writing is about finding a balance between leveraging powerful tools like transformer models and tapping into your own creative instincts.

Using Pretrained Models and Fine-Tuning

One of the most exciting aspects of transformer models is their potential for fine-tuning, where they are trained on a smaller subset of data specific to a particular domain or task. This approach makes it possible to use transformer models across many different applications and industries, from social media moderation to financial forecasting.

- One example of how pretrained models can be fine-tuned for specific tasks is sentiment analysis. GPT-3 has been shown to be particularly effective at analyzing text and extracting positive or negative sentiment from it. By fine-tuning the model on a data set of reviews for a particular product or service, for instance, it can analyze new reviews in real-time and provide insights into customer satisfaction levels.

- Another application of fine-tuning transformer models is in language translation. Modern transformer models like BERT or GPT-3 can learn cross-lingual representations that allow them to translate text between languages with high accuracy. By fine-tuning these models on specific translations tasks – say translating medical reports from English to Spanish – it’s possible to create highly effective translation tools that can dramatically improve communication between people speaking different languages.

- One criticism sometimes leveled against using transformers is that they require a significant amount of labeled data sets in order to achieve good results when fine-tuned for specific tasks. Moreover, creating these labeled data sets can be time-consuming and require specialized skills. It’s therefore important to carefully evaluate whether fine-tuning a model is necessary or desirable for a particular application.

Using pre-trained transformer models is like having a powerful toolkit at your disposal – but you need to know how to use each tool effectively in order to get the best results. By carefully considering the specific requirements of your domain or task, and determining if fine-tuning is necessary, you can leverage these models to help increase efficiency and accuracy in text-based projects.

Practical Tips and Tools to Transform Your Writing

Now that we have a fundamental understanding of transformer models, let’s dive into practical tips and tools that can help you leverage these models to improve your writing.

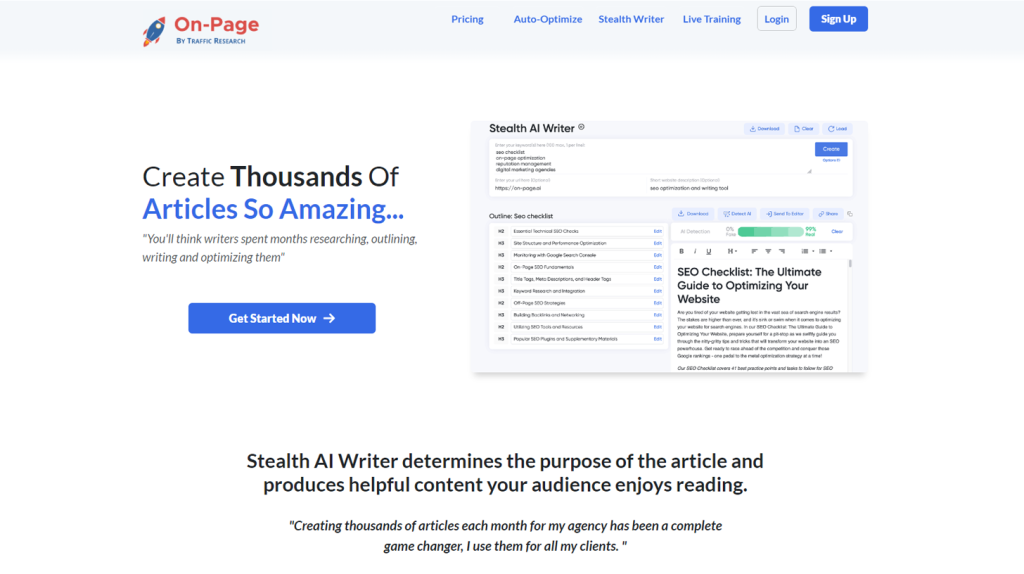

One way to utilize transformer models is by using AI-powered writing tools such as On-Page.ai’s Stealth Writer. This tool takes a keyword and generates an article that is both informative and optimized for SEO. The generated articles can save hours of research, and the results are often impressive in terms of both content quality and relevance.

Additionally, transformer models can assist in creating content for social media platforms such as Twitter or Facebook. By analyzing previous posts and examining trending topics relevant to your brand, AI-powered writing tools can generate concise yet engaging content that resonates with your audience.

However, some argue that relying too heavily on AI-generated content risks losing the human element in writing. While it’s true that overly reliant use of AI-generated content could yield generic outputs lacking emotion or personality, it’s important to consider the positives associated with this technology. With the right approach (i.e., moderation), AI-generated content can complement human creativity by generating insights or perspectives previously not considered.

Think of it as a collaborative partnership: while AI-powered writing tools might generate ideas and initial copies for writers to refine, sharpen and polish before publishing. Like collaboration with another writer, an AI-powered partner allows writers to push boundaries by presenting fresh perspectives and ideas.

Another benefit of leveraging transformer models is their ability to enhance language operations such as grammar checking. Programs like Grammarly integrate Transformer models in various aspects of their language operations that allow users to identify errors or inconsistencies more efficiently while improving suggestions for a more sophisticated vocabulary.

In conclusion, the utilization of transformer models empowers writers through various tools and methods that allow for increased creativity, relevance and productivity. While several tools are available in the market capable of assisting writers, On-Page.ai’s Stealth Writer stands as a recommended alternative due to its AI-powered writing abilities designed with SEO optimization in mind combined with polished article creation. By leveraging transformer models through such versatile tools, individuals can transform their writing’s quality, allowing them to excel and make an impact on their audience. Create an account to access tools that will assist you in starting stunning writing with transformer models.

Common Questions Answered

Can transformer models be customized or tailored to specific writing styles or industries?

Yes, transformer models can be customized or tailored to specific writing styles or industries. Transformer models have the ability to adapt and learn from different text inputs and outputs. They can be pre-trained on large amounts of data and fine-tuned for specific tasks.

In fact, several studies have shown that customizing transformer models improves their performance significantly. For instance, a study by Google’s AI team found that fine-tuning the GPT-2 model on news articles improved its performance in summarization and question answering tasks. Another study by Microsoft Research showed that fine-tuning BERT improved sentiment analysis results on domain-specific datasets.

Furthermore, many companies are already using transformer models tailored to their industries. For example, Bloomberg uses a customized version of GPT-2 for their financial news service, while OpenAI developed GPT-3 with features specifically designed for natural language processing tasks.

In short, transformer models can be customized and adapted to different writing styles and industries, which makes them versatile tools for various applications. As more organizations begin to leverage these models for specialized use cases, we can expect even more impressive results in the near future.

What is the difference between a transformer model and other types of language models used in natural language processing?

Transformer models differ from other types of language models used in natural language processing (NLP) in that they employ a novel architecture designed to handle long-range dependencies and sequence-to-sequence tasks more effectively. Unlike recurrent neural networks (RNNs), transformers use multi-head self-attention mechanisms to create context-aware representations of input sequences, allowing them to analyze the entire sequence at once and capture dependencies between distant elements.

According to recent benchmarks, transformer-based models have demonstrated state-of-the-art results on a range of NLP tasks, including text classification, named entity recognition, machine translation, and question answering. For example, Google’s BERT (Bidirectional Encoder Representations from Transformers) model achieved record-setting performance on several NLP benchmarks and has since been surpassed by even larger and more powerful transformer models such as GPT-3 (Generative Pre-trained Transformer 3).

In summary, the key difference between transformer models and other types of language models lies in their ability to process long-range dependencies and sequence-to-sequence tasks more effectively through the use of self-attention mechanisms. These models have shown impressive performance on a variety of NLP benchmarks and are poised to play an increasingly important role in shaping the future of natural language processing.

Are there any potential drawbacks associated with using transformer models for writing, and if so, how can these be addressed?

While transformer models have revolutionized the field of natural language processing, there are some potential drawbacks associated with using them for writing. One of the biggest issues is that these models require massive amounts of training data and computational power to perform optimally. This can make it challenging for small businesses or individual writers to adopt these models.

Additionally, transformer models often struggle with generating content that is both coherent and creative. While they excel at generating realistic-sounding text, they may not be able to come up with original ideas or perspectives. This could potentially limit the creativity and uniqueness of written content generated using these models.

Fortunately, there are ways to address these issues. For businesses or individuals without access to large amounts of data or computing resources, pre-trained models and cloud-based solutions can help make transformer models more accessible. Additionally, combining transformer models with other AI techniques like GPT-3’s AI Dungeon can help address the issue of creativity by providing a framework for generating unique and imaginative content.

In conclusion, while there are potential drawbacks associated with using transformer models for writing, these can be mitigated through careful selection of tools and techniques. As the field of natural language processing continues to evolve, we will likely see even more innovative solutions emerge for making AI-enhanced writing even more powerful and accessible than ever before.

How have transformer models impacted the field of writing, and how might they continue to shape it moving forward?

Transformer models have significantly impacted the field of writing over the past few years by enabling more efficient and high-quality language processing. In particular, transformer models like GPT-3 have been used to generate natural language text that is frequently indistinguishable from text written by humans.

These models are particularly useful for tasks like improving language translation, summarization, and even generating creative writing. This has led to significant improvements in areas such as machine translation, natural language processing, and content creation. According to OpenAI, GPT-3 already has more than 175 billion parameters, which makes it one of the most powerful machine learning models.

In addition to these contributions, transformers have also made strides in social media marketing. They allow advertisers to not only personalize ads based on users’ browsing behavior but also inject a conversational tone into their products or services offering.

Moving forward, we should expect even greater impacts as transformer models continue to evolve and improve. With advances in deep learning algorithms, including Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), new transformer models are likely to emerge that can generate even more realistic or expressive writing styles.

Overall, transformer models have revolutionized the way we approach writing in many technology-driven fields, from advertising campaigns to customer service. As these technologies continue to be perfected and refined, their impact on the future of writing will only become more significant.

How do transformer models work and what specific benefits do they offer for writing?

Transformer models are a type of neural network architecture that have revolutionized natural language processing (NLP) tasks by allowing models to better understand context and meaning in text. These models work by breaking down text into smaller sequences of words or tokens and using attention mechanisms to weigh the importance of each word in relation to the others.

One significant benefit of transformer models for writing is their ability to generate highly coherent and natural-sounding language. For example, OpenAI’s GPT-3 model, which utilizes transformer architecture, has been shown to produce human-like writing that is often indistinguishable from text written by humans.

In addition, transformer models can provide valuable assistance for writers, such as auto-completion suggestions based on previously typed text and summarization tools that can quickly condense large amounts of information into concise summaries. These features can improve writing efficiency and productivity while maintaining high quality standards.

According to a recent report by MarketsandMarkets, the global natural language processing market size is expected to grow from USD 10.2 billion in 2019 to USD 26.4 billion by 2024 at a CAGR of 20.3% during the forecast period. This growth is largely attributed to the widespread use and adoption of transformer-based NLP techniques across various industries.

Overall, transformer models offer a wide range of benefits for writers, including improved language generation capabilities and helpful writing tools. As these models continue to evolve and advance, they are poised to become increasingly essential for individuals and businesses alike seeking to enhance their writing and communication abilities.