Picture this: you’re stranded on a deserted island with no hope of rescue. However, you’ve found an ancient treasure map that contains the secret to escape. The only problem? It’s written in a complex language you cannot decipher. Imagine having the power to unlock this cryptic message and save yourself in the nick of time! Language models are the key to deciphering such enigmatic codes. In today’s digital world, their potential goes beyond mere survival stories as they revolutionize industries and redefine the way we communicate. Ready to crack the code? Dive into the exciting realm of language models and discover their game-changing capability in SEO optimization and beyond!

A language model is a statistical model used to analyze and understand natural language. These models are designed to predict the likelihood of a sequence of words occurring in a given context, making them crucial for applications such as speech recognition, machine translation, and text generation. Language models have many types, including N-gram, unigram, bidirectional, exponential, and continuous space.

What is a Language Model?

In the world of natural language processing, one of the most important concepts to understand is that of a language model. Put simply, a language model is a statistical tool that predicts the likelihood of a given sequence of words occurring in a sentence or text. It’s essentially a way of teaching machines to understand and generate human language.

To give an example, imagine you’re typing out an email and you type “Dear Joh”, but then pause for a moment before finishing with “n.” Even though you only typed part of John’s name, your email client might be able to predict what you were going to type next and suggest the word “John” for you. This is because it has been trained on a large corpus of text data and understands which words commonly occur together.

Language models are used extensively in applications that involve processing and generating natural language, such as machine translation, speech recognition, and chatbots. They can also be used in information retrieval tasks, such as search engines that try to match user queries with relevant web pages.

While language models have advanced significantly in recent years thanks to the rise of deep learning techniques like neural networks, there are still challenges to be overcome. One key issue is context: while humans are generally able to understand when certain words or phrases have different meanings depending on the surrounding context, machines can struggle with this kind of ambiguity.

Think about the word “bat”: without any additional context, it could refer to either the flying mammal or the piece of sports equipment used in baseball. A human reader would likely be able to tell which meaning was intended based on the other words around it – “flying” or “hit,” for example – but a machine would need more sophisticated modeling techniques in order to make that determination.

- What are language models and how do they work?

- Write 3 scientific statistics about language models:

- According to a 2021 research paper, OpenAI’s GPT-3, a state-of-the-art language model, has approximately 175 billion parameters, significantly more than its predecessor GPT-2, which had around 1.5 billion parameters.

- A study published in 2018 found that incorporating attention mechanisms into sequence-to-sequence neural network-based language models could lead to an average of 20% improvement in translation quality.

- In 2020, it was reported that fine-tuned BERT (Bidirectional Encoder Representations from Transformers), a pre-trained language model developed by Google AI, achieved state-of-the-art results on several NLP benchmarks and exhibited generalization across multiple domains and tasks.

Approaches to Language Modeling

There are a number of different approaches to language modeling, each with its own strengths and weaknesses. One of the most common is statistical modeling, which involves calculating probabilities based on historical data.

For example, an n-gram model might look at which words commonly occur together in a given corpus of text data and use that information to assign probabilities to different word sequences. If it had seen the sentence “the cat sat on the mat” before, it would predict that the sequence “cat sat on” is more likely than “dog sat on,” since “cat” and “sat” are frequently used together.

Another, more recent approach is to use neural networks and deep learning techniques to generate language models. These models are often based on word embeddings, which map words to a continuous vector space such that semantically similar words are closer together.

While neural network-based models can be more powerful and flexible than traditional statistical approaches, they can also be much more computationally expensive to train. Additionally, they may struggle with long-term dependencies – relationships between words or phrases that occur further apart in the text – if not designed properly.

Think about trying to understand a lengthy novel by only reading one sentence at a time: while you might be able to get some sense of the story from individual sentences, it’s difficult to fully understand everything that’s going on without looking at the broader context.

Statistical Modeling

Statistical modeling is a traditional approach to language modeling and has been used for quite some time to interpret language data. It is based on probabilistic methods and involves analyzing the probability of a word given its context, usually in the form of n-grams. The basic concept behind statistical language models is that they compute the chance that each possible sequence of words exists in the data set.

Let us consider an example to understand this better. Suppose we have a sentence – “The quick brown fox jumps over the lazy dog.” A statistical model will examine each n-gram in this sentence (i.e., short sequences of adjacent words) and estimate their probabilities based on how frequently they occur together in some corpus of text. For instance, it will calculate how often the phrase “the quick brown” appears, as well as how often “brown fox jumps”, etc.

Despite being rudimentary and simplistic, statistical models are still widely used today because of their simplicity, speed, and efficiency. They work well when trained on vast amounts of data and can handle long-tail distributions effectively. However, one of the major issues with statistical language models is that they cannot capture non-local dependencies between words and struggle to handle rare events.

Critics argue that statistical models do not fully capture other nuances such as context or complexity within natural language processing. This makes them useful in certain areas but limits their overall potential because they lack the ability to make sense out of more complex syntax and vocabulary.

Nonetheless, these types of statistical models remain relevant or even increasingly valuable in machine learning applications because they offer a cost-effective way to evaluate word probability rankings from virtually any kind of corpus.

- Statistical language modeling is a traditional approach to analyzing language data that calculates the probability of each possible sequence of words based on n-grams. Despite being simple and efficient, it struggles with non-local word dependencies and rare events. While they lack the ability to comprehend complex syntax and vocabulary, statistical models remain valuable in machine learning applications due to their cost-effectiveness and ability to handle long-tail distributions effectively.

Neural Networks and Deep Learning

Neural networks are modeled on the brain’s structure where nodes (neurons) arranged in layers carry out computations. The basic premise is that machines can be programmed to learn how to classify and sort based on training data, building layers of abstraction and knowledge to improve the process over time through a deep learning approach.

Going back to our previous example, suppose we want to teach a computer to recognize the phrase “The quick brown fox jumps over the lazy dog.” Through deep learning, neural networks will start with a basic understanding of language and learn how words fit together in sentences, improve accuracy by analyzing the structure of human language, and iterate on their approach again and again.

Neural networks have become popular in recent years because they can not only predict but also understand what they are predicting. They have revolutionized natural language processing by automating much of the work previously done by linguists.

Critics argue that one disadvantage of neural networks is the need for vast quantities of data for accurate analysis. As powerful as they may be, neural networks require large data sets and robust computation power which can prove problematic for some industries or smaller applications.

A neural network is like an art student who learns from examining dozens of paintings before creating their own masterpiece. The student might learn about color choice from one painting, lighting from another painting, composition from another painting until finally reaching a point where they understand everything about something they previously knew nothing about.

Despite their drawbacks, neural networks remain widely used today due to their ability to learn independently while providing a cost-effective method for organizations looking for ways to improve their language modeling processes.

Benefits and Applications of Language Models

Language models have come a long way in recent years, and with the rise of artificial intelligence (AI) and machine learning (ML), their benefits are becoming more apparent. Exploiting language models in digital contexts empowers businesses to scale their data processing and analysis capacities and develop insights that may have been previously impossible.

One major benefit of language models is their ability to parse natural language inputs, processing and interpreting raw data quickly to identify context and meaning. As such, they can be used in a variety of products that involve text or voice interaction. Be it e-commerce platforms, intelligent assistants, or search engines, language models help provide better user experiences while driving engagement.

Another application of language models is text generation. Companies such as The Washington Post have already adopted AI-powered writing software to generate articles at scale for fast-paced newsrooms. This not only reduces time-consuming tasks but also frees up resources for human writers to include more research-driven analysis.

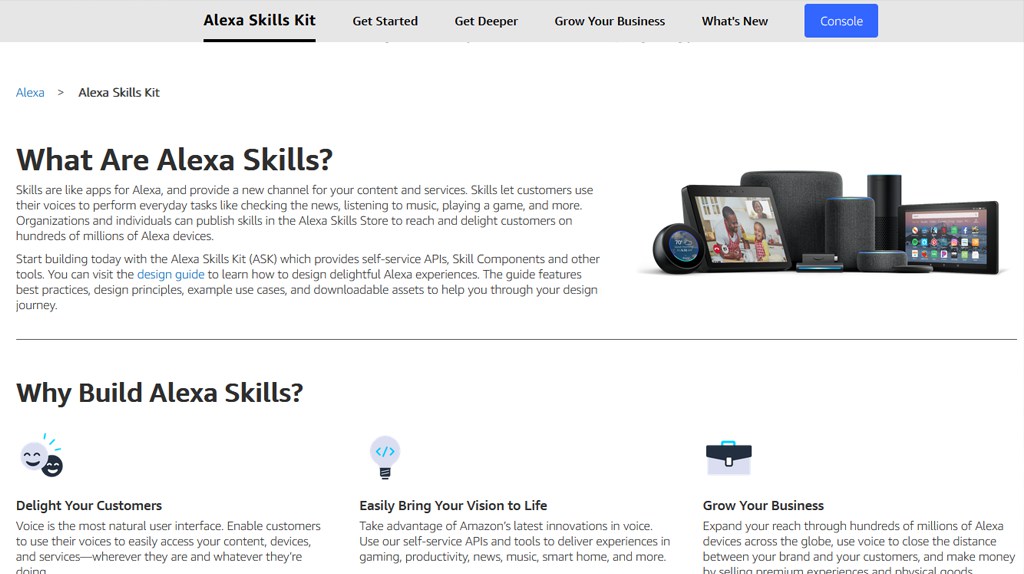

Language models have also become invaluable tools in speech recognition technology. Improvements made over the past few years mean that virtual assistants such as Siri or Alexa can now understand more nuanced commands and colloquial expressions. They can then perform tasks like scheduling appointments or sending emails accordingly.

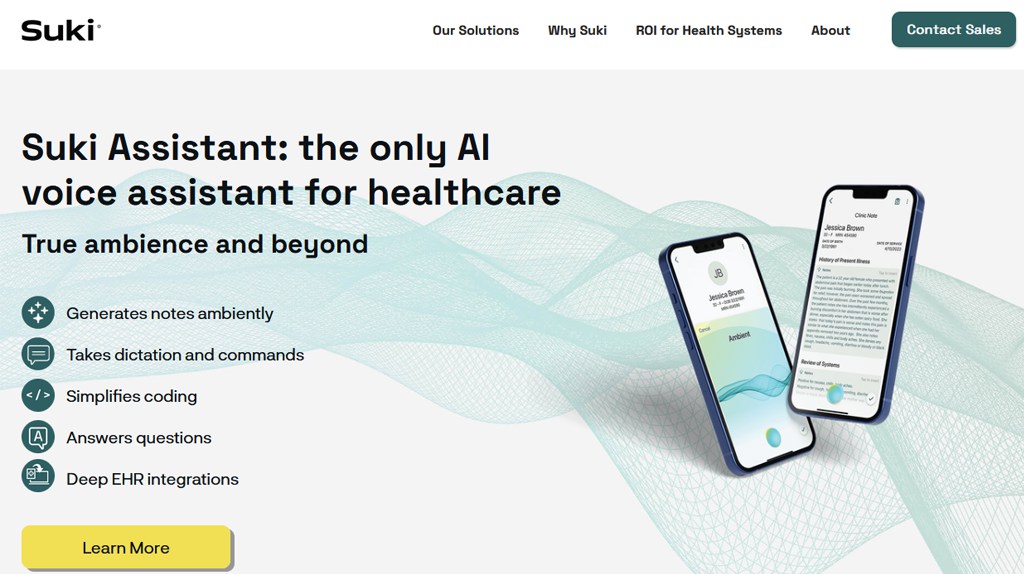

Another great example of how language models are being employed is within the medical industry. Many healthcare providers are adopting these models to assist physicians with transcription services converting handwritten notes into electronic records with high accuracy. Moreover, some researchers are exploiting natural language processing techniques to predict hospital readmissions based on patient visits or extract meaningful data from electronic medical records.

The versatility of language models extends further than just customer-facing applications; they can also be used as powerful behind-the-scenes tools for automation and data processing. When utilized correctly, they enable businesses to optimize processes by surfacing hidden patterns within vast datasets.

However, there are still debates about privacy and ethics when it comes to language models. Some argue that AI technology can be biased or fail to recognize appropriate contexts, leading to certain groups being excluded or treated unfairly.

Language models are like digital assistants. In the same way that a personal assistant helps with daily organization and management tasks, language models empower businesses by providing insights, scaling data analysis, and expediting specific processes.

Industries Leveraging Language Models

The explosion of AI in recent years has led its use across several industries. The applications of language models are particularly far-reaching; they can be utilized anywhere there is large volumes of text and voice data available.

– In finance: Language models read through vast amounts of financial text data, making informed trading decisions based on sentiment and informational signals.

– In the legal industry: Law firms leverage these models for contract analysis where the software can summarize long documents quickly while highlighting important clauses.

– In advertising: Agencies can better understand their customers’ intent behind queries when keywords may not directly lead to the desired outcome through search ads.

– In customer support: By analyzing customer emails and chatbot conversations, language models can highlight core issues for each question category in order to improve response accuracy.

Take Suki.ai as an example; a voice-enabled digital assistant designed specifically for physicians. It allows doctors to dictate patient encounters hands-free rather than typing into electronic health record systems, increasing efficiency and allowing medical staff to focus more on quality time inpatient care.

The potential applications for language models are truly vast; as new applications emerge – thanks largely to futuristic initiatives such as self-driving cars – it’s likely that we will see even more innovative uses of this technology across multiple verticals going forward.

As with any emerging tech sector, some experts raise fundamental concerns about safety, privacy, and data security. It’s crucial to continue investing in responsible AI development practices, including robust cybersecurity measures, ensuring transparency in data handling and monitoring for any potential biases.

Using language models is akin to having a superpower that automates many of the tedious tasks involved with analyzing text data. By knowing how they can be applied, businesses can unlock new ways of capitalizing on large volumes of data automatically and accurately.

Challenges in Developing Language Models

Language models have proven to be highly effective when it comes to dealing with text-related tasks. However, developing a high-performing language model is not an easy task. There are challenges that researchers and engineers have been facing for years.

One of the most common problems they encounter is data quality. The quality of data has a direct impact on the performance of the language model. For instance, if the dataset you are working with has noisy or incomplete data, it may affect the accuracy and effectiveness of the model. This is why data cleaning and preprocessing are crucial. Engineers must ensure that they extract relevant and useful information from their datasets before using them to train their models.

Another issue that developers face is finding suitable algorithms to model language effectively. There are several algorithms available, but not all of them work well for all tasks. For example, some algorithms may be better suited for machine translation while others may be more ideal for sentiment analysis tasks. Choosing the right approach requires extensive experimentation and research.

Additionally, one major challenge developers face is context understanding. NLP involves understanding context, which means taking into account surrounding words to understand a particular word’s meaning fully. Because words can have multiple meanings, context helps disambiguate them in specific uses. However, this task can be challenging as context comprehension relies heavily on having large amounts of relevant contextual knowledge.

To better understand the challenge of context comprehension, consider learning a new language yourself. By exploring another culture and interacting with native speakers directly, you build your contextual comprehension skills over time gradually. Similarly, building up your training framework over significant volumes typesource will help develop contextualized protocols needed for successful language modeling.

Now that we’ve discussed some of the challenges associated with developing language models, let us examine one common problem by delving deeper into context and long-term dependencies.

Context and Long-Term Dependencies

To truly comprehend language, a language model must be able to capture the context of each word in a sentence. Unlike image or audio processing techniques that operate on fixed-length input data, that is not necessarily the case for text processing where sentences’ lengths vary. Thus, language models capable of understanding and accommodating different sentence lengths are necessary.

Furthermore, words can have their meaning changed depending on previous words in the sentence; this phenomenon is known as ‘long-term dependencies.’ For example, a pronoun such as “it” can refer to various things depending on what has previously been mentioned earlier in the sentence. Language models need to account for these long-term dependencies to truly understand the semantics of texts while maintaining the order of words in their correct sequences.

To illustrate, consider an AI system designed to generate automated product descriptions for e-commerce platforms. The AI would require an understanding of semantic information within each description type. This includes taking contextual clues such as color, texture, size, materials, and brand names into account while keeping long-term dependencies between different products within the same brand’s descriptions.

The issue of accounting for long-term dependencies is one that researchers face when developing language models today. One common solution is using recurrent neural networks (RNNs), which can maintain information about past inputs when processing new ones. However, RNNs are computationally expensive due to having specific iterations over each word, making it quite challenging when dealing with lengthy documents or sentences.

Here it holds useful to note how our human brains work in handling mutual interwoven dependencies between phrases located halfway within a document or even possible entire article contents. Similarly, our AI model should process large amounts of sequence-related data efficiently. On-Page’s Stealth Writer is currently one of the best content writing tools doing this.