Unlocking the Power of Natural Language Processing: What You Need to Know

Imagine walking into a library with millions upon millions of books, each containing unique and valuable information. As you roam the halls, daunted by the sheer volume of literature and uncertainty about where to begin, a powerful guiding force steps in to save the day. This force sifts through countless pages at breakneck speed, unveiling only the precise knowledge you seek. In today’s world, this force is known as Natural Language Processing (NLP) — the key ingredient behind the innovative tool that can revolutionize your SEO optimization efforts, unlocking the untapped potential for your business. Dive in to discover how NLP can be your ultimate guide in the vast digital library we call the internet, giving you all the tools and tactics needed to rise above the competition.

NLP is a subfield of artificial intelligence that enables computers to understand, interpret, and generate human language. NLP allows software applications to analyze and process unstructured data, such as text and speech, and convert it into structured data that can be used by other programs. Applications of NLP include chatbots, virtual assistants, sentiment analysis, document summarization, language translation, and more.

What is Natural Language Processing?

Natural language processing or NLP is the technology used to get machines to understand, interpret, and manipulate human language. It’s one of the most exciting fields in artificial intelligence today because it opens up a range of possibilities for how we interact with computers.

Imagine if you could talk to your computer as if it were another person who understood everything you said? NLP makes that possible. From chatbots to voice-based assistants like Siri or Alexa, NLP has been integrated into many modern technologies that we use every day.

One interesting example of NLP in action is Google Translate. With the help of NLP algorithms, Google Translate can accurately translate entire sentences from one language to another with reasonable accuracy. It does so by analyzing grammar and syntax within a given sentence and then translating accordingly.

In short, NLP allows computers to analyze and make sense of human language in the same way humans can. It enables them to read text documents, listen to spoken language, recognize what people are saying, and respond appropriately.

For instance, consider a case where someone types out a query on a search engine like Google. NLP takes this query as input and extracts essential information from it while disregarding irrelevant data. This leads to more relevant search results that match the user’s intent.

NLP applies various techniques like tokenization, stemming, part-of-speech tagging, sentiment analysis, named entity recognition (NER), co-reference resolution, topic modeling, and much more to gain insights into human languages’ properties and structure.

For businesses, employing NLP can help gather valuable customer feedback through sentiment analysis tools that automatically detect positive or negative tones in customer reviews or social media posts about their products or services. Other industries like healthcare use NLP algorithms for clinical documentation improvement or extracting disease-specific data from patient records.

Some critics argue that NLP has a long way to go before it can truly understand the nuances of human language. For instance, a machine might fail to understand human slang, or if a person uses multiple languages in the same sentence – known as code-switching.

However, the accuracy of NLP algorithms is constantly improving, thanks to advancements in deep learning and machine learning.

Combining Linguistics and AI

Combining linguistics with AI has brought massive breakthroughs in NLP over the years. Traditional methods solely relied on rule-based systems like if-then statements; however, these are very rigid and struggle with parsing unstructured data.

AI-enabled methods use unsupervised machine learning algorithms trained on vast amounts of labeled text data that learn patterns in language usage automatically instead of hard-coded rules.

AI enables NLP tasks like speech recognition, NER, and sentiment analysis more accurate than ever before. This is because AI-enabled devices can learn from large samples of data sets much faster than humans can.

One example where combining linguistics with AI has been transformative is chatbots or voice assistants. These devices rely heavily on sophisticated speech recognition technologies for their operation. By using machine learning algorithms powered by suitable hardware specifications like GPUs or TPUs, you can achieve up to 99% accuracy in speech synthesis and other natural language processes in seconds.

Another important factor that makes this combination so successful is collaboration within different fields such as computer science, mathematics, engineering, and more. This facilitates discovering new insights that drive innovation continually throughout the industry.

To better understand how combining linguistics and AI works together in action: imagine a patient who is visiting a hospital in a different country where they do not speak the local language. Instead of being processed through an outdated translation system or hiring a professional interpreter, the hospital can use NLP algorithms to break through any communication barriers.

Core NLP Techniques

NLP is a complex and multifaceted field that combines linguistics, computer science, mathematics, and artificial intelligence. At the core of NLP are several techniques that enable computers to analyze and understand human language in its natural form. Understanding these techniques is critical to grasping the power and potential of NLP.

One of the most important core techniques in NLP is named entity recognition or NER. NER involves identifying and categorizing named entities such as people, places, organizations, dates, times, and more from unstructured text data. A good example of how NER can be used in the real world is helping doctors analyze medical records. By automatically extracting information related to patient symptoms, diagnoses, treatments, medications, and other relevant data points using NER, medical professionals can make better-informed decisions faster.

Another fundamental technique in NLP is stemming and lemmatization. Stemming involves reducing each word in a given text to its base or root form whereas lemmatization involves grouping together inflected forms of a word with similar meaning based on their intended part of speech (POS). For instance, running can be stemmed as run whereas running can be lemmatized as run (verb) or run (noun). These techniques are useful for building search engines because they help identify relevant documents by recognizing different inflections of words and grouping them under a single term.

One common technique in NLP is sentiment analysis which involves determining whether a given text expresses positive or negative opinions about a particular topic or entity. However, sentiment analysis is far from perfect because it depends on the quality of training data as well as differences in cultural contexts and expression styles. For instance, sarcasm and irony can alter the intended meaning of a text even if its literal sentiments appear positive or negative.

Think of core NLP techniques like ingredients in a recipe. Each technique represents a different element that adds to the final dish. Just as you can’t make a cake with only flour or sugar, you can’t achieve a true understanding of natural language without leveraging each key NLP technique together.

Understanding these core NLP techniques lays the foundation for implementing more advanced applications and uses of natural language processing. One of the most important aspects of NLP is its ability to analyze individual words and phrases within a given text block. This is where tokenization and POS tagging come in.

Tokenization and POS Tagging

Tokenization involves dividing a given text into smaller units or tokens such as sentences, words, or phrases. These tokens allow machines to analyze the meaning and context of text data by breaking it down into manageable chunks. POS tagging, on the other hand, involves assigning each token a specific part-of-speech tag based on its grammatical function within a sentence.

The power of tokenization and POS tagging lies in their ability to enable machines to understand language at the semantic level. For instance, when analyzing written content, machine learning algorithms need to be able to distinguish between proper nouns and regular nouns, verbs, and adjectives, singular versus plural forms, and so on. Without the ability to do this kind of analysis automatically, we’d still be limited by manual web searches rather than personalized digital assistants that can answer our questions efficiently.

To put it another way, imagine reading an entire page of text with no spaces between words or punctuation marks whatsoever. It would be nearly impossible to discern where one word ends and another begins without some sort of contextual clues like capitalization rules or syntax cues. Tokenization essentially adds these visual cues artificially so that machines have a better idea of which clusters of letters should be treated as discrete units of meaning.

Of course, tokenization isn’t perfect. In languages where words are often compounded together into longer strings, such as German or Finnish, tokenization can be more difficult and require more advanced techniques to get right. Furthermore, sometimes the meanings of specific words or phrases can only be inferred from their context rather than their POS tag or lexical category. In these cases, machines have to rely more heavily on contextual clues such as co-occurring keywords, adjacent sentences or paragraphs, or even general knowledge about the subject matter at hand.

Tokenization and POS tagging might be thought of as taking apart a puzzle and organizing all the pieces into separate groups based on their colors, shapes, and patterns. Just as it’s easier to see how the individual puzzle pieces fit together when grouped by color or shape, categorizing tokens by part-of-speech tags allows machine learning algorithms to better understand the semantic connections between individual words in a sentence. Without this level of analysis, computers would struggle to decipher natural language in a way that is both accurate and useful.

Armed with an understanding of these key NLP techniques, we’re better equipped to unlock the full power of language processing algorithms for real-world applications and use cases. In the next section, we’ll dive deeper into some of these applications and highlight their potential impact on our daily lives.

Syntax and Semantic Analysis

In natural language processing, syntax and semantic analysis are two core techniques used to understand the meaning and structure of human language. While both techniques are essential for effective NLP systems, they differ in their focus and application.

Syntax analysis focuses on the arrangement of words in a sentence to make grammatical sense. It involves identifying the different parts of speech (such as nouns, verbs, adjectives, etc.) within a sentence and analyzing how they are related to one another. This type of analysis is critical for understanding the structure of a sentence, including its subject, verb, object, modifiers, etc.

Semantic analysis focuses on the meaning behind words in a sentence. It involves analyzing the context surrounding each word to determine its intended meaning. This type of analysis is critical for understanding the overall meaning of a sentence, including its intent, tone, and mood.

To illustrate the difference between syntax and semantic analysis, consider these two sentences:

- “The dog ate the bone.”

- “The bone ate the dog.”

Both sentences have correct syntax (arrangement of words), but only one has correct semantics (meaning). In this case, it’s clear that the first sentence has correct semantics because it describes a common scenario where a dog eats a bone. The second sentence, on the other hand, doesn’t make any sense semantically because it describes an impossible scenario where a bone eats a dog.

In an NLP system designed for sentiment analysis (determining whether text expresses positive or negative sentiment), syntax analysis can be used to identify key phrases or sentences that express an opinion. Semantic analysis can then be used to determine whether those opinions are positive or negative based on related words and phrases within the same context.

For example, if someone says “The new iPhone is great!”, an NLP system can use syntax analysis to identify that “the new iPhone” is the subject of the sentence and “is great” is the predicate. Semantic analysis can then determine that “great” is a positive word, and therefore the sentence expresses a positive sentiment towards the iPhone.

One debate in NLP is whether syntax or semantics is more important for understanding human language. Some argue that syntax is more important because it provides the fundamental structure for any sentence, while others argue that semantics is more important because it determines the overall meaning of a sentence.

In reality, both are equally important and necessary for effective NLP systems. Without proper syntax analysis, it’s impossible to understand the structure of a sentence and therefore difficult to determine its intended meaning. Similarly, without semantic analysis, it’s impossible to determine the overall meaning of a sentence, including its intent, tone, and mood.

Real-World Applications of NLP

NLP has a wide range of applications in various industries today. From virtual assistants and chatbots to predictive text input and automated summarization, NLP technology continues to evolve and expand its reach.

Virtual assistants and chatbots are some of the most popular applications of NLP technology today. These intelligent systems use natural language processing techniques such as speech recognition, named entity recognition, sentiment analysis, and text-to-speech conversion to interact with users in real time. They can be used for customer service inquiries, personal shopping recommendations, or even help people with medical conditions better manage their health.

Another application of NLP technology is in predictive text input. This feature uses algorithms to suggest words or phrases as a user types, making it faster and easier to write messages or emails on mobile devices. These suggestions are based on context (sentences entered before) which makes writing faster and more effective.

Automated summarization is another application of NLP technology that can help save time by condensing large documents or articles into short summaries. This is ideal for keeping up with news and developments in a particular industry when you’re limited on time.

In the field of healthcare, NLP technology has numerous applications. It can help extract key information from electronic health records to accurately diagnose and treat patients. For instance, if a patient suffers from diabetes mellitus, an NLP system can extract information such as the patient’s blood sugar levels, medications taken, and relevant test results to provide clinicians with a comprehensive picture of the patient’s health.

In financial services, NLP technology can be used to analyze consumer sentiment towards a particular company or product by analyzing social media posts, customer reviews, or comments. This helps companies understand their customers better, make more informed business decisions and improve their products and services further.

The impact of NLP on our daily lives can be compared to that of GPS technology; both have revolutionized how we navigate the world around us. With GPS, we can easily find directions no matter where we are, while NLP technology helps us communicate more effectively through natural language with virtual assistants and chatbots.

Some people argue that NLP shouldn’t be relied upon too heavily because it lacks the nuance of human conversation. But NLP technology continues to improve rapidly through advanced machine learning techniques which use feedback mechanisms that allow systems to learn from their mistakes.

The benefits of NLP continue to evolve rapidly—you just need to look at how quickly voice-activated assistants have developed over the past few years. As new developments emerge in AI and machine learning technology, it’s likely that we will see even more advanced applications of NLP in the future.

Virtual Assistants and Chatbots

Virtual assistants and chatbots are some of the most useful applications of NLP. They have completely changed the way people interact with technology, and many businesses are now implementing them in their customer service departments.

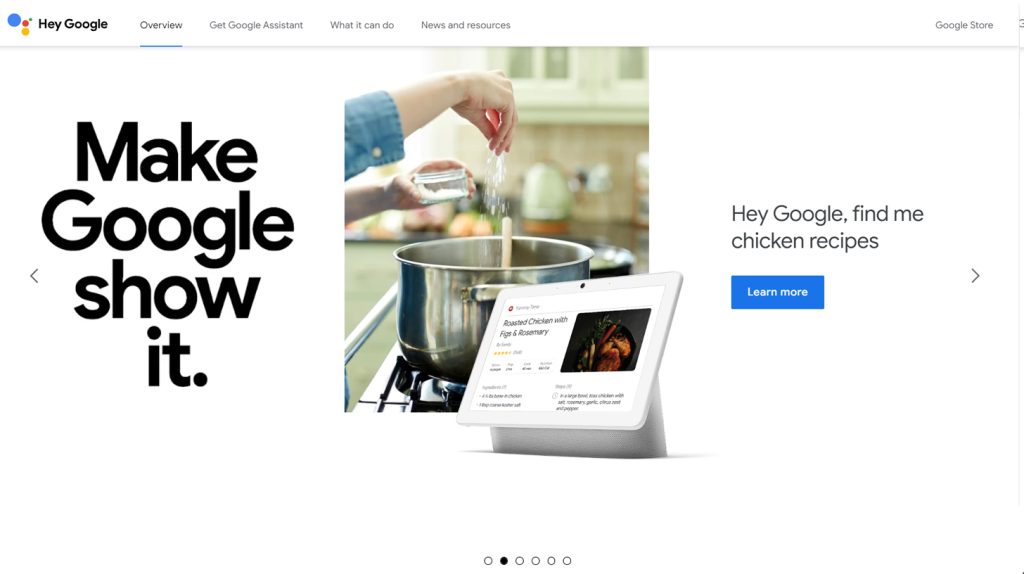

An example of a very successful virtual assistant is Apple’s Siri, which is integrated into all iOS devices. Siri can understand spoken language and carry out various commands such as setting reminders, making calls, and sending texts, among others. Similarly, Amazon’s Alexa and Google Assistant have become very popular personal assistants for making tasks easier around the home.

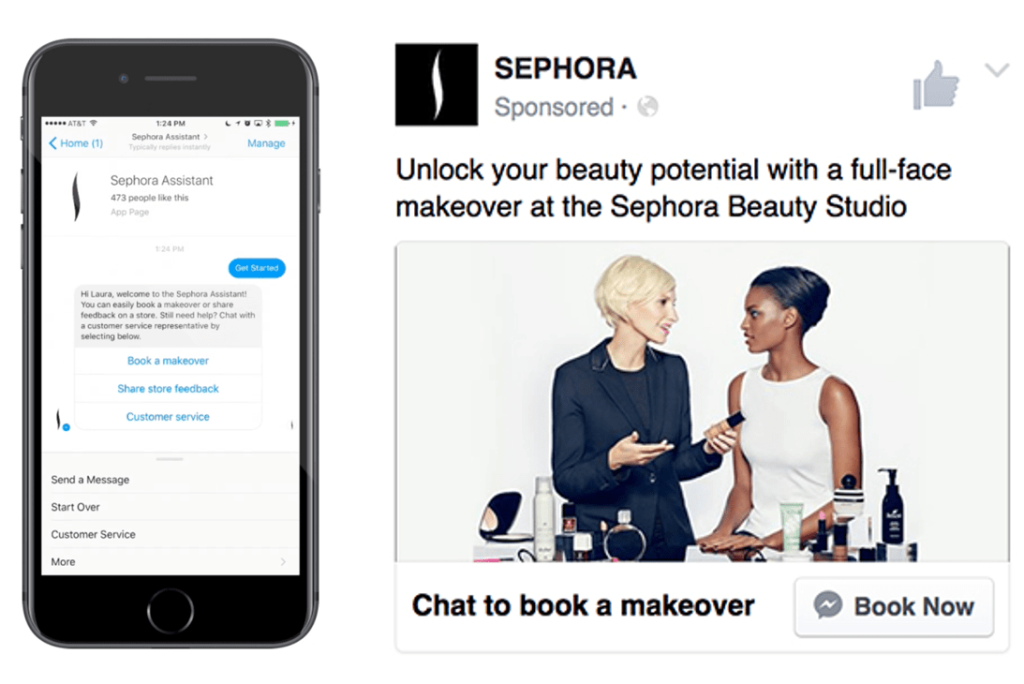

Chatbots, on the other hand, are virtual assistants that use textual conversation to provide answers to users’ questions. Chatbots have revolutionized customer service by providing quick responses to clients’ queries, streamlining communication processes, and improving customer engagement.

Many e-commerce sites now have implemented chatbots as an essential component of their customer support system. For instance, Sephora has developed Sephora Assistant which acts as a personal shopping assistant for customers needing help to purchase cosmetics. It assists clients in finding products that suit their skin type or complexion as well as offering other recommendations based on the user’s preference history.

According to a report from Gartner, by 2020, chatbots will be handling over 85% of customer interactions. There are several reasons why chatbots have such a huge potential:

Firstly, chatbots are always available – they don’t need to take breaks or holidays like human support agents do.

Secondly, they save time – since they can handle multiple queries at once without tiring or getting overwhelmed.

Thirdly, chatbots present an affordable solution to businesses that look for ways to lower operational costs. By replacing human workers with chatbots, companies can provide services more frequently and effectively than before with minimal investment needed.

However, there are critics of chatbots who argue that incorporating chatbots can lead to a reduction in human customer service workers’ jobs. Some people feel that as more businesses start using chatbots and other forms of artificial intelligence, the human touch in customer service will disappear.

On the other hand, it’s essential to think about how chatbots can assist human workers rather than replace them. A good way to illustrate this is by looking at ATM machines. Just as customers can use ATMs to take care of small transactions without speaking to a teller, companies can use chatbots for handling routine customer interactions such as fulfilling orders or responding to Frequently Asked Questions (FAQs). This frees up human workers to focus on more complicated queries and complex needs.

- The global natural language processing market size was valued at $50.9 billion in 2021 and is projected to grow from $64.94 billion in 2022 to $357.7 billion in 2030, a compound annual growth rate (CAGR) of 27.6% during the forecast period (Market Research Future).

- A comprehensive study carried out in 2019 found that the top three application areas for NLP were sentiment analysis (23.5%), text classification (22.7%), and machine translation (21%).

- As of 2022, Google’s BERT model, an NLP pre-training technique based on Transformer architecture, achieved state-of-the-art performance on various NLP tasks like question-answering and natural language inference, with an F1 score of 93.2% on the SQuAD v1.1 dataset.

Future Perspectives of NLP

The future of NLP looks bright, with many advancements expected in the coming years. Here are some predictions:

Voice-operated systems: With voice assistants like Siri and Alexa becoming increasingly popular, we can expect that virtual assistants powered by natural language processing will become even more prevalent in our daily lives.

Improved sentiment analysis: In recent years sentiment analysis has improved drastically enabling machines to classify opinions more accurately based on user feedback which could have implications addressing online hate speech and bullying.

Greater applications for machine translation: Neural Machine Translation (NMT) has already revolutionized the field of machine translation. NMT has created better translations between languages – and it’s only getting better. Expect better integration in global businesses to promote effective communication between teams in different regions with different languages.

More industries adopting NLP: With investments pouring into AI-driven startups, it won’t be long before we see new businesses entering various industries ranging from healthcare and law to fintech. These cover chatbots that answer questions about healthcare coverage or personal finance management solutions customized according to demographic trends, offering new possibilities for NLP-powered software in various industries.

Greater synthesis of NLP with other AI techniques: Just like we have seen a substantial rise of machine learning applications, NLP too will be increasingly used to enhance specific tasks with the integration of machine learning models to develop natural language generation as well as voice recognition systems where accurate translation becomes much easier than ever.

As businesses continue to find new ways to integrate technology into their operations better, it’s clear that NLP will become even more ubiquitous. It will be a critical component not only for consumer product development and business automation tasks but also for essential sectors such as national security or healthcare.

However, along with this growth comes concerns about data privacy and security. Companies must ensure that the personal data they gather from users are secure and managed appropriately. There are also fears surrounding job loss among those employed in human customer service positions. Still, NLP can create jobs including researchers and engineers who help develop technologies such as conversational AI.

To sum up the potential effects of NLP technologies fully, it is worth imagining a world where communication flows seamlessly between skilled professionals and their clients irrespective of geographical location and lingual barriers.

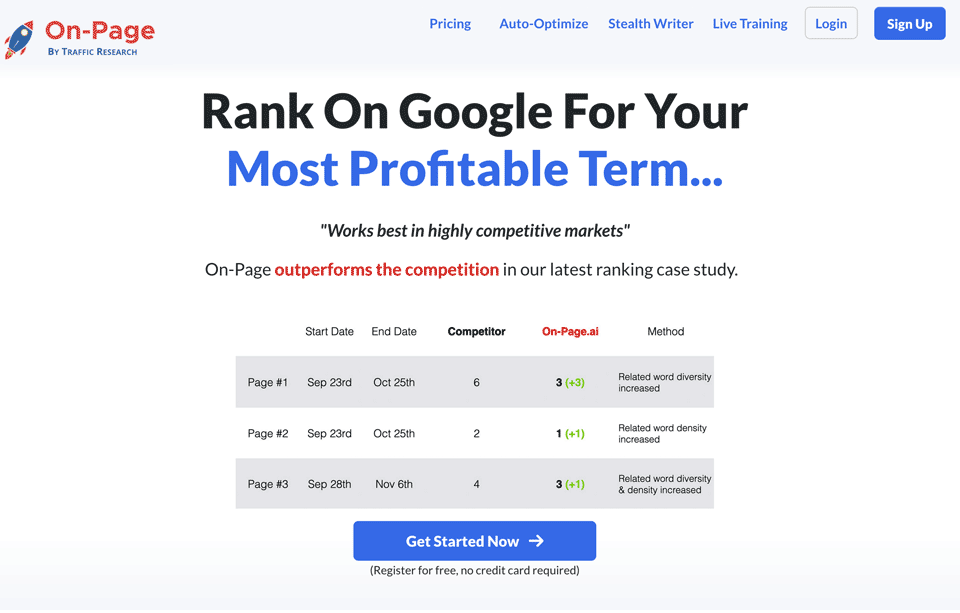

AI-powered tools like On-Page.ai should get you started in your machine learning journey. Explore On-Page.ai and its Stealth AI Writer and ReWriter features and get the most out of natural language processing technology for your business.

Frequently Asked Questions

How is natural language processing used in the field of artificial intelligence?

NLP is a crucial component of the field of AI. It allows machines to understand, interpret, and generate human language, which is essential for developing more advanced AI applications. One of the most significant uses of NLP in AI is in chatbots and voice assistants, which are becoming increasingly popular among consumers.

According to a report by Grand View Research, the global chatbot market size is expected to reach $27.3 billion by 2030. Companies across various industries are implementing chatbots to enhance customer service, reduce human error, and improve efficiency by automating routine tasks.

In addition to chatbots, NLP is also being used in sentiment analysis, machine translation, text summarization, and speech recognition. These applications have broad significance in fields such as healthcare, education, finance, and cybersecurity.

Overall, natural language processing plays a critical role in advancing the capabilities of artificial intelligence. Its ability to understand and process human language is what makes it possible for machines to interact with humans in more natural ways.

What are the potential drawbacks or limitations of natural language processing technology?

While NLP has revolutionized the way we interact with machines and online platforms, there are also several potential drawbacks and limitations to this technology.

Firstly, NLP algorithms heavily rely on the quality and quantity of data they receive. Therefore, if the training data set is limited or biased, the algorithm may produce inaccurate results. According to a study by IBM, up to 80% of AI systems contain bias, leading to discrimination against certain individuals or groups in decision-making processes.

Secondly, NLP struggles with understanding context and sarcasm, which can lead to misinterpretation or misunderstanding of the intended meaning of the text. For instance, a sarcastic comment such as “Great job breaking my computer!” could be interpreted as a compliment without proper context analysis.

Finally, NLP is also challenged by the vast diversity of human languages and dialects spoken worldwide. While some languages have well-developed NLP models, many others lack sufficient resources for comprehensive analysis and translation.

In conclusion, while natural language processing technology presents numerous opportunities for innovation and advancement in various industries, it’s important to acknowledge its limitations. As NLP continues to develop rapidly, addressing these challenges should remain a priority for developers and researchers.

What are some common applications of natural language processing?

NLP has become an increasingly popular area of research and application in recent years. Some common applications of NLP include language translation, sentiment analysis, chatbots, speech recognition, and text summarization.

According to a report by MarketsandMarkets, the global NLP market is expected to reach $16.07 billion by 2021, growing at a compound annual growth rate of 16.1%. This growth is fueled by the increasing demand for enhanced customer experience and the need for machine-to-human interaction.

Language translation is a particularly important application of NLP as it enables people from different regions and cultures to communicate with one another more easily. Google Translate is one such example of how NLP can enable real-time translation across languages. In fact, as reported by TechCrunch in 2016, Google Translate now supports over 100 languages.

Sentiment analysis is another common application of NLP. With the enormous amount of online communication happening via social media platforms and other sources, businesses are able to use sentiment analysis tools to track customer feedback about their products or services. This enables them to identify areas for improvement and enhance their customer experience.

Chatbots are also becoming more sophisticated through the use of NLP. These digital assistants can help businesses automate interactions with customers, reducing costs associated with customer service teams and providing efficient support.

Overall, the applications of natural language processing are many and varied. As technology advances continue, we can expect even greater innovation in this field in the years to come.

What is the difference between natural language processing and machine learning?

Natural language processing and machine learning are two closely related fields but they serve different purposes. While NLP focuses on understanding human language, ML has a broader scope that involves training machines to learn from data.

NLP deals with the analysis of spoken or written human language and the communication between humans and computers in natural language. Its main aim is to help computers understand the nuances of human language including grammar, syntax, and semantics. Some of the important applications of NLP include sentiment analysis, speech recognition, and machine translation.

In contrast, machine learning involves training machines to learn from data without being explicitly programmed. It uses algorithms to automatically identify patterns and insights from data. The goal of ML is essentially to create intelligent systems that can make predictions or decisions based on experience.

While there are some overlaps between both fields, NLP mainly focuses on the distinctive features of human language, whereas ML emphasizes the development of algorithms that can intelligently process large amounts of data.

According to a study by Grand View Research, the global NLP market size is expected to reach $80.6 billion by 2027, while the machine learning market size was valued at $8.43 billion in 2020 and is projected to reach $117.19 billion by 2027.

In conclusion, while both NLP and ML relate to data processing and analysis, their approaches differ significantly. While NLP focuses primarily on analyzing natural language for specific applications, ML is concerned with training computer systems using large datasets for a wide range of tasks.

Can natural language processing be used for languages other than English?

Yes, NLP can be used for languages other than English. In fact, NLP has been developed and applied to numerous languages over the years. According to a report by MarketsandMarkets, the global NLP market was valued at $7.48 billion in 2018 and is projected to reach $22.30 billion by 2025, which indicates a growing demand for NLP technologies in various languages.

One of the primary reasons why NLP can be used for other languages is the availability of language-specific data sets. Many industries have created and shared large corpora of text in multiple languages, making it possible to develop machine learning algorithms that can analyze and interpret these texts.

For example, Google’s multilingual neural machine translation system can translate between any pair of the 103 languages it supports. Similarly, Facebook has developed a multilingual machine translation system that uses NLP techniques to support more than 100 languages.

Additionally, several academic institutions have made significant contributions to the field of multilingual NLP research. The European Union’s Seventh Framework Program funded the META-NET project, which aimed to develop high-quality language resources for all European languages. Another notable academic initiative is the Universal Dependencies project, which provides a framework for annotating morphological, syntactic, and semantic dependencies in multiple languages.

In summary, natural language processing can certainly be used for languages other than English. With an increasing number of language-specific data sets becoming available and advancements in technology and academia, we can expect that NLP will continue to make strides in multilingual applications.