Improve Your Website’s Crawlability for Better SEO: A Step-by-Step Guide

Picture this: you’ve created an extraordinary website filled with valuable content, but your target audience can’t seem to find it. You’re puzzled and frustrated, wondering why your efforts are not reaping the desired results. The secret ingredient that you’re missing could be as simple as improving your website’s crawlability. In this step-by-step guide, we will unravel the mystery behind crawlability and how it affects your SEO performance, transforming your website into a treasure trove that search engines can easily discover and index. Get ready to embark on a journey that will skyrocket your online visibility and drive success like never before!

There are several strategies you can use to improve your website’s crawlability and enhance its SEO performance. First, ensure your site has a clear and well-structured hierarchy, so search engines can easily navigate it. Use appropriate header tags, meta descriptions, and keywords to optimize your content, but avoid keyword stuffing. Regularly update and add new content to your site, paying attention to internal linking and avoiding duplicate content. Submitting a sitemap to Google can help improve the crawling and indexing of your pages. Finally, monitor any server errors, broken links, or unsupported scripts that could affect your site’s crawlability. By following these practices, you can help boost the visibility of your website in search rankings and gain more organic traffic from interested users.

Crawling and Indexing Basics for SEO

When it comes to optimizing a website for search engines, two key concepts come into play: crawling and indexing. These two factors make up the essential foundation of SEO. By better understanding how they work, you can improve your website’s crawlability, indexability, and overall search engine rankings.

Imagine a giant library filled with millions of books. Now, imagine that Google is the librarian in charge of finding any book that someone may need. In order for Google to do this effectively, it needs to be able to ‘crawl’ through every book in the library (or in our case, web pages on the internet). Once a page is crawled and indexed, Google can add it to its database so that anyone searching for relevant information can find it quickly.

Both crawling and indexing are crucial because if your website cannot be crawled by Googlebot (Google’s automated webcrawler), then your site won’t show up in the index – making it nearly impossible to show up on search results.

While there are other important aspects to SEO like content marketing and link building, those strategies wouldn’t be effective without first ensuring that your site is being crawled and indexed properly.

So let’s dive into each concept separately starting with crawling.

- Understanding the concepts of crawling and indexing is crucial for optimizing your website’s search engine rankings. Without proper crawlability and indexability, your website may not be visible in search results. Therefore, focusing on these two factors should be the foundation of any SEO strategy before moving on to other aspects such as content marketing and link building.

What is Crawling?

Simply put, crawling is the process by which search engines scan websites on the internet finding new or updated relevant content.

Think of it as sending an army of robots or spiders out onto the web to crawl over every nook and cranny of your website looking for changes since their last visit. By doing this Google finds fresh content immediately allowing them to index these pages quicker.

For example, when you update a blog post or upload new content onto your website – these changes need to be crawled by search engines before they can be indexed and considered for ranking in search results.

If a website has poor crawlability, it means that the web crawler cannot access and scan the website correctly, hindering the process of indexing. As a result, new content won’t appear in search results.

Crawlability is important because if Googlebot can’t crawl your site effectively, it may skip over fresh content causing it not to index or rank high in SERPs.

Continue reading to learn more about “What is Indexing?” and how you can improve your website’s overall crawling and indexing performance.

What is Indexing?

Indexing is the second part of the crawling and indexing process by which search engines like Google analyze websites to provide users with accurate search results. Once the search engine crawls a webpage, it saves its information on its servers. During indexing, the search engine organizes all this crawled data into an index that can be quickly searched when a user inputs a query. The information is then used by the search algorithms with other ranking factors, such as content quality and relevancy, to present users with relevant results.

To understand better how indexing works, consider an analogy of a library’s archive system. When you visit a library, you see rows of books arranged systematically, organized according to their respective catalog numbers for easy access. In the same way, when search engines crawl and index your website, they save each page and record them in their database based on certain factors like titles, headers, meta descriptions, content body, and user engagement. All these factors help establish relevance and context for each set of information found on your pages.

A well-indexed website has higher chances of ranking high up in the search engine results pages (SERPs). It means that the indexed web pages are easily accessible to users searching for information or products related to what your site offers. Thus understanding how search engines index your website is crucial for creating relevant content designed to satisfy user intent and improving overall SEO efforts.

Some people think that effective SEO optimizations can be achieved by merely having plenty of backlinks aimed at their website. However, having lots of backlinks does not necessarily mean improved indexing or higher rankings – it’s the linking structure or the link architecture that counts. Linking structures help search engines understand your website hierarchy and determine which pages are more important than others.

Now that we’ve understood what indexing is let’s focus on tips for improving your website’s crawlability and indexability.

Improving Crawlability

Improving crawlability is the first step towards better search rankings. The crawlers need to access your website before they can index it. Hence, the easier it is for search engines to crawl and access specific pages on your site, the higher the chances are of getting indexed and ranked for relevant keywords.

Site Structure and Internal Links

One aspect of improving crawlability is optimizing your site structure and internal linking strategy. A clear site architecture that organizes pages into categories or sections makes it easier for crawlers to navigate through your site while also establishing relevance and context between related pages. Internal links help signal which pages are more important than others and helps users find their way around within the site.

For example, consider a hotel website where each page offers information about a different amenity or facet of the experience in each room type. By having internal links on each page that points to other valuable amenities, you can assist Google with formulating your website hierarchy according to how it should be prioritized.

Think of your website as a virtual car dealership where all vehicles undergo a set process of organization in predetermined categories like make, model, manufacturer year Etc. By providing clarity into your website structure helps avoid confusing search engine robots when traversing its content.

Speeding up Page Load Time

Search engines want to promote websites with fast load times to provide users with a smooth browsing experience. A slow-performing website can put off visitors resulting in bounce rates leading to lower domain authority scores.

Slow loading speeds can harm both user experience and SEO efforts; hence constant monitoring and optimization should be part of your technical SEO strategy. Improvements may include image compression, server response time optimization, and minimizing HTTP requests that significantly affect load times.

Some webmasters may argue that page load speeds aren’t as crucial since most users are browsing on fast internet connections. However, this is not always the case; slow internet connections or high user traffic can cause long loading times and derail a user’s experience leading to a loss of potential customers.

By focusing on essential SEO elements such as crawling, indexing, and site architecture, your website can rank higher in search engines and get additional traffic directed towards it.

Site Structure and Internal Links

When it comes to improving your website’s crawlability, site structure and internal links play a vital role. A well-structured website with an organized hierarchy makes it easier for search engines to crawl and understand the content on each page. It also ensures that all pages on your site are accessible by users and search engine bots.

Consider building your website’s structure like a pyramid, where the homepage is at the top and the subsequent pages feed off of it. From there, you can arrange pages into categories or silos to create a clear path for both users and search engines to navigate. You want to make sure every important page is only a few clicks away from the homepage, making it easier for crawlers to reach them.

In addition, internal linking within your website allows you to spread authority across different pages. When one page ranks high in search results, you can use that authority to boost other related pages by linking them together. By doing so, you can establish a hierarchy of information that guides search engine bots through your website while simultaneously improving user experience.

However, too many internal links can have the opposite effect and negatively impact crawling efficiency. Over-linking may cause a confusing link structure which can hurt website rankings. Instead, be mindful of what pages need internal links, and make sure they make sense in the context of your site’s organization.

To ensure optimal crawlability on your website, prioritize organizing it with a clear hierarchy and focus on linking related pages together.

Content Optimization for Better SEO

Your site must have quality content that flows cohesively between webpages. It should be free from errors like duplicate content and 404 errors while maintaining clarity when using redirects. When creating content for your site, keep in mind these factors’ importance as they increase the number of indexing opportunities for search engine crawlers, thereby improving your organic traffic.

Analogous to building a strong foundation for your home or apartment, building a website with quality content starts at the ground level. A firm foundation will allow you to build upon the solid ground with new pages and fresh information that becomes interspersed into your structure. Focusing on structured data and providing valuable user information will only solidify this foundation and improve your search engine rankings.

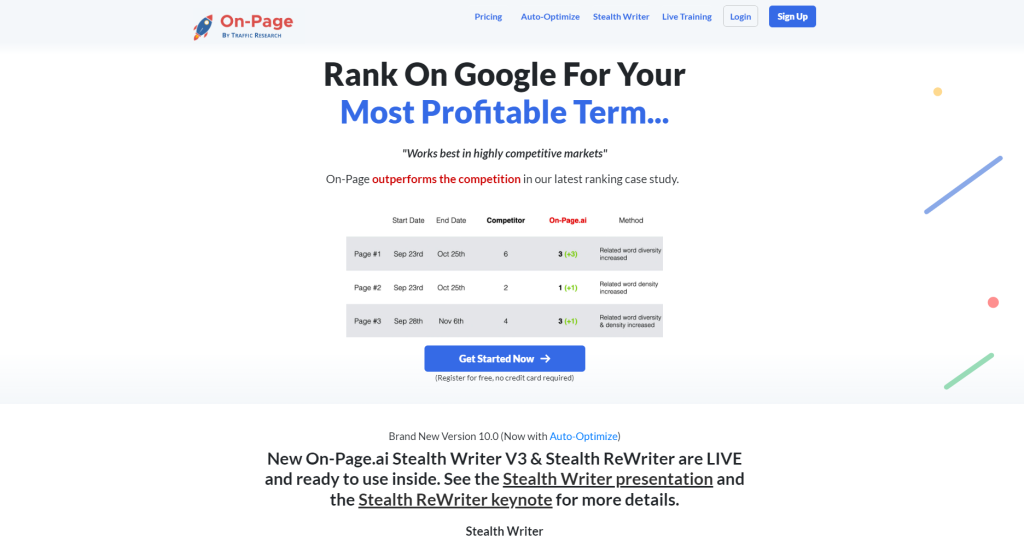

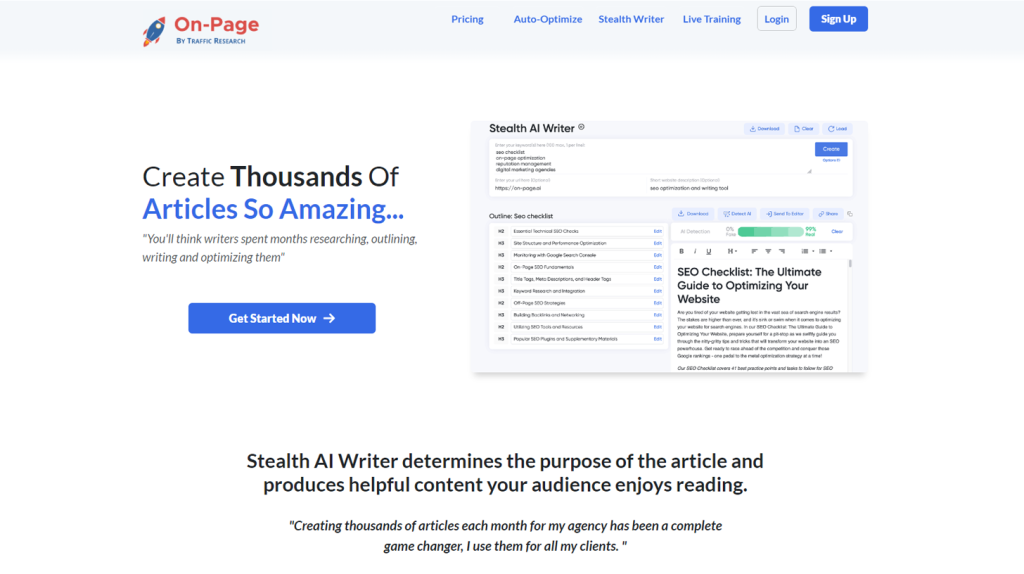

On-Page.ai‘s AI-powered tool ensures that vital elements are covered in every piece of content you create. From headlines to body text to images, it analyzes the page’s relevance and provides recommendations to optimize it further. This feature helps with keyword placement in tags like H1, meta descriptions, alt tags in images, and other crucial elements to establish better relevancy that improves search engine rankings.

On the other hand, if your site has duplicate content placed in two different URLs, crawlers can’t index both of them. The same goes for invalid redirects (404 errors); they waste your crawl budget because bots can’t find a page that doesn’t exist and instead leave instantly. Another issue is linking out to broken links which negatively impact user experience by taking them nowhere – these are some things to avoid.

Ensuring good content quality means consistency over time through keyword research and staying up-to-date on trends relevant to what users seek. On-Page.ai offers content optimizations and tools that ensure you’re creating high-quality content and avoiding issues like duplicate content.

Avoid Duplicate Content and Looped Redirects

Duplicate content is a serious concern for websites because it can lead to poor user experience, lost traffic, and lower rankings on search engines. When a website has multiple URLs containing the same or substantially similar content, it is considered duplicate content. Search engines may not know which version to index or consider more authoritative. Therefore, website owners must ensure that there is only one URL per page.

One way to avoid duplicate content is by using canonical tags. Canonical tags tell search engines which URL should be indexed out of several identical duplicates. This technique helps prevent the dilution of link equity across different versions of the same page. It also consolidates ranking signals into a single URL, boosting SEO value.

Another problem that can cause duplicate content issues and lower crawlability is looped redirects. Looping occurs when two or more URLs redirect to each other in an endless cycle. While web crawlers are smart enough to detect looping redirections and stop the process after several attempts, this wastes crawl budget and reduces the effectiveness of indexing pages.

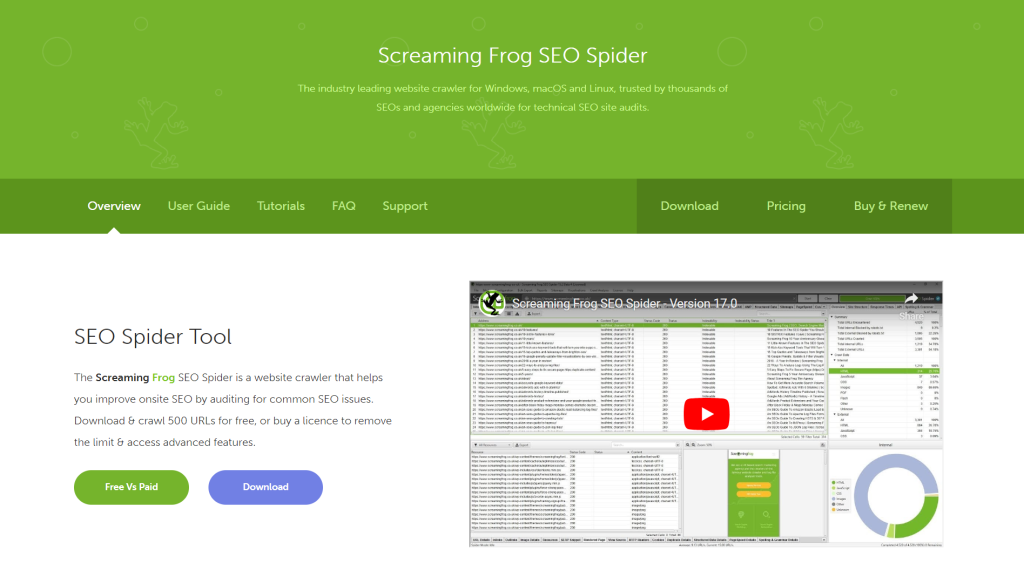

By checking web server logs and using online tools like Screaming Frog, website owners can detect looping redirects and resolve them with 301 or 302 redirects (depending on whether they’re permanent or temporary). Website owners should also update any backlinks pointing to redirected URLs so that search engine users land on the correct page.

Duplicate content can also arise from thin or low-quality pages that contain little original text or unique value for users. Not only can these pages harm crawling and indexing efficiency, but they can also result in penalties from search engines such as Google. By pruning weak pages or consolidating their content into stronger ones, website owners can improve crawlability by limiting internal competition for ranking signals.

Loops in navigation menus, breadcrumbs, paginations, and faceted filters on eCommerce sites can also lead to duplicate content issues. It is recommended to use rel=”nofollow” attributes for links that lead to pages with similar or identical content, and to avoid infinite categories and subcategories.

Avoiding duplicate content and looping redirects on a website is like maintaining proper road signs on a highway. Without clear signs, drivers might get lost, take the wrong exit, or even cause accidents. Similarly, without canonical tags and proper URL redirects, web crawlers might index wrong or outdated pages from your site, confuse search results for users, and reduce your ranking authority.

Enhancing Website Discoverability

Website discoverability refers to how easily potential customers can find your site online. It’s not enough to create great content if people can’t find it. Using SEO techniques like those discussed in this article can help boost your website discoverability.

- One technique for improving website visibility is by using descriptive meta titles and descriptions for each page on your site. These are the snippets of text that appear in search results underneath the page URL. By crafting unique titles and descriptions for each page on your site that accurately reflects its contents, you can improve users’ understanding of what your website has to offer.

- Another way to enhance website discoverability is by having a well-structured internal linking system. Internal links provide a roadmap of your site’s hierarchy and make it easy for crawlers and users to navigate through your content. They also help distribute link equity across all pages of the site, which boosts SEO value overall.

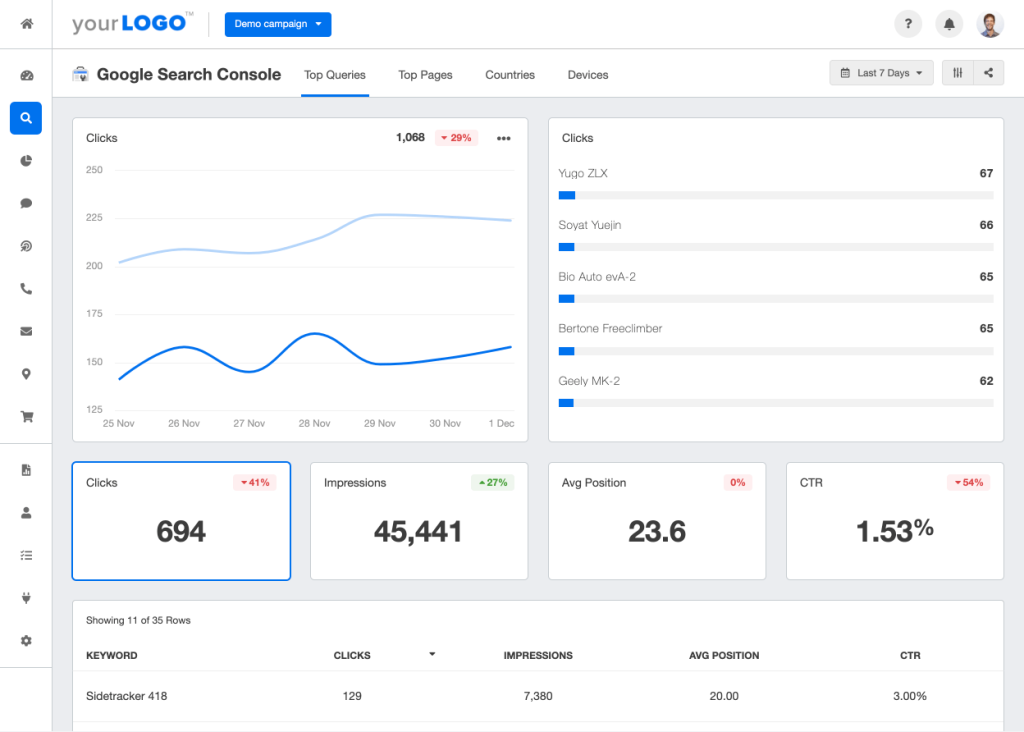

- Additionally, submitting a sitemap file to Google Search Console can help increase crawlability and indexing speed. A sitemap is a listing of all the URLs on your site that you want Google (and other search engines) to consider when crawling and indexing your site.

- Finally, reducing page load time is another essential factor affecting website discoverability. Slow-loading sites have higher bounce rates, meaning visitors leave before engaging with your content. This can lead to a decline in user satisfaction, ranking authority, and ultimately, revenue.

In fact, according to Google’s research, if page load times increase from 1 second to 3 seconds, the probability of bounce increases by 32%. If page load times increase from 1 second to 6 seconds, the probability of bounce increases by 106%. Therefore, optimizing website speed should be a top priority for website owners who want their pages to rank well on search engines.

Sitemap Submission and Page Load Speed

Submitting your sitemap to Google is an essential step in improving your website’s crawlability and indexability. A sitemap is a file that lists all the pages on your website, allowing search engines like Google to discover and crawl them more efficiently. Submitting a sitemap to Google can help deep websites or those with a lack of proper internal linking, ensuring that all your pages are accessible to search engine crawlers.

Page load speed is another vital factor in SEO and crawlability. Slow loading times can harm your website’s ranking on Google, as well as affecting user experience. According to Google, the ideal page load time is under three seconds, but many websites struggle to meet this target. Slow loading times can be caused by multiple factors, such as large images, unnecessary plugins, too many ads, or poor server performance.

- To improve your website’s page load speed, you could optimize your images by compressing their size. You could also minimize HTTP requests by removing unnecessary plugins or widgets. Another way is to enable browser caching so that returning visitors can load your site faster. Additionally, ensure that your website is hosted on a reliable server with enough resources to handle traffic spikes without slowing down.

- By making these improvements, you’ll not only enhance your website’s crawlability and indexability but also improve user experience. Fast-loading websites typically have lower bounce rates and higher engagement rates since users are more likely to stay on a site that loads quickly. Moreover, Google prioritizes user experience when ranking websites, so optimizing for load time directly affects your website’s search engine visibility.

- Some argue that page load speed isn’t as crucial for SEO as other factors like content quality and backlinks. However, while these elements are undoubtedly important components of SEO, page load speed can significantly impact your website’s ranking performance. Google’s algorithms consider page load time as a crucial factor in determining a website’s overall quality, presenting it as an important metric in their search rankings.

Think of your website like a physical store. If the store is hard to navigate or takes ages to load, customers are likely to leave and never come back. Similarly, if your website is slow and difficult to use, users will bounce off and go somewhere else. In contrast, a well-designed store with fast customer service encourages visitors to stick around and browse.

Improving your website’s crawlability and indexability requires effort, dedication, and the right tools. On-Page.ai can help you optimize your website for SEO by providing detailed recommendations that cater directly to Google’s crawling and indexing requirements. Additionally, On-Page.ai’s Stealth Writer can create high-quality content based on any keywords you desire while the Stealth ReWriter makes sure your existing content is SEO-friendly. Try and start using On-Page.ai for all your SEO needs, you’ll drastically improve your website’s ranking performance, crawlability, and user experience.

Answers to Common Questions

How do search engine bots crawl and index websites?

Search engine bots crawl and index websites by following links from one web page to another. They start with the homepage and then follow links to other pages on the website, as well as external links to other websites.

It’s essential to ensure your website is easy for search engine bots to crawl to improve its SEO ranking. Google states that it uses several crawling techniques such as sitemaps, robots.txt files, and URLs found during previous crawls to discover content.

According to a study conducted by Ahrefs in 2017, the average lifespan of a web page is two years. This means that if your website isn’t crawled frequently and efficiently, it could lead to a lower ranking in search engine results pages (SERPs).

To improve your website’s crawlability, you can:

1. Use a comprehensive website structure: Make sure your website has an organized structure with clear hierarchy and links between pages.

2. Optimize your URL structure: Use descriptive and relevant keywords in your URLs but avoid using too many parameters.

3. Submit XML sitemaps: Submitting your sitemap ensures that search engines know about all the pages on your site.

4. Monitor crawl errors: Regularly check for any crawl errors on your site and fix them promptly.

In conclusion, understanding how search engine bots crawl and index websites is crucial to improving your website’s SEO ranking. Using effective strategies such as having a comprehensive website structure, optimizing URLs and submitting XML sitemap improves crawling efficiency while monitoring errors helps uphold optimal performance long-term.

How do internal linking and sitemaps impact a website’s crawlability?

Internal linking and sitemaps play a vital role in a website’s crawlability. Internal linking involves linking pages within your website to each other using hyperlinks. Search engines use these links to navigate through your website, understand the content on each page, and determine its relevance to specific search queries.

Sitemaps are files that provide information about all the pages on your website, including their hierarchy and content. They act as guides for search engine bots as they crawl through your site and help them access pages that might be hard to find through traditional navigation methods.

According to a study by SEMrush, websites with strong internal linking have 40% more organic traffic compared to those with weak or non-existent internal linking. Similarly, websites with well-optimized sitemaps have a higher chance of getting indexed quickly by search engines.

In conclusion, internal linking and sitemaps are crucial elements in improving a website’s crawlability and overall SEO performance. By optimizing both, you can help search engines understand the structure of your website better and improve its ability to rank on relevant searches.

What role does website speed play in crawlability and SEO?

Website speed plays a critical role in crawlability and SEO. When search engines crawl websites, they want to deliver the best possible user experience to their users. A website’s speed is an integral part of that experience. Slow-loading sites can frustrate users and cause them to abandon the site, leading to lost traffic, engagement, and revenue.

In fact, Google has long recognized the importance of page speed in its algorithm as it affects a user’s experience on your website. In 2021, Google started including Core Web Vitals as ranking factors for SEO performance. Core Web Vitals consist of three metrics: loading speed, interactivity, and visual stability. In particular, loading speed measured by Largest Contentful Paint (LCP) severity is an essential measure for how fast a page loads.

A recent study by Akamai showed that even a minor delay of just one second in website load time could lead to a 7% reduction in conversions, a 16% reduction in customer satisfaction, and an 11% drop in page views. Furthermore, according to Google, more than half of mobile users will leave a website if it takes longer than three seconds to load.

Therefore, improving your website speed should be high on your priority list. You can improve website speed through techniques like optimizing images, minimizing CSS and JavaScript files, reducing server response times with faster hosting including Content Delivery Network (CDN), etc.

Overall, faster load speeds equal better crawlability and ultimately better SEO performance. Any improvement in page speed will lead you one step closer towards reaching the top rankings of Google’s SERP rankings!

What factors affect a website’s crawlability and how can they be optimized for better SEO?

There are several factors that can affect a website’s crawlability and subsequently, its search engine optimization (SEO). Here are some of the most important ones:

1. Site structure and navigation: A clear site structure with organized navigation is crucial for optimal crawlability. This helps search engines understand what your site is about and how content is related. According to a study by Search Engine Journal, websites with a clear site structure see up to a 58% increase in organic traffic.

2. URL structure: URLs should be concise and descriptive, including relevant keywords if possible. Avoid dynamic parameters and excessive use of subdomains as these can confuse search engine crawlers.

3. Site speed: Website load time is an important factor for both user experience and SEO. Slow-loading sites have been shown to have higher bounce rates and lower search engine rankings. Google recommends a maximum load time of 3 seconds for websites.

4. Mobile responsiveness: With more than half of all internet traffic coming from mobile devices, having a mobile-friendly website is crucial for SEO. Google has also announced that starting in March 2021, mobile-first indexing will be enabled by default for all websites.

5. Quality content: High-quality content that is regularly updated is essential for attracting both users and search engines. According to Hubspot, companies that blog receive 97% more backlinks to their website, which can significantly improve their search engine rankings.

By optimizing these factors and ensuring your website is easy to navigate and engaging, you can boost its crawlability and SEO performance, which will lead to increased visibility in search results pages and ultimately drive more traffic to your site.

What are some common crawl errors and how can they be fixed?

Common crawl errors can severely impact a website’s search engine rankings and traffic. Here are some of the most frequent ones and how to fix them:

1. 404 Errors: A 404 error occurs when a page cannot be found on a website. These errors can result from broken links or pages that have been removed or renamed. Fixing 404 errors involves either redirecting the missing page to a new URL, fixing the broken link or updating the website sitemap.

2. Redirect Errors: Redirect errors occur when a user is redirected from one URL to another. Redirect can be useful to preserve existing backlinks or move content to a new location but if not used correctly can be hurting your site’s SEO score by misdirecting bots and users resulting in low latency and loss of their visit duration. Fixing redirect errors require verifying that all redirects point to live URLs, using the appropriate redirect type, deleting unnecessary re-directs and regularly checking for redirect chains.

3. Broken Links and Inconsistent Navigation: Broken links are harmful to website functionality affecting navigation and ease of access. Sitemaps with outdated links or webpage with orphaned content are examples leading faster towards an inconsistent navigation scenario (the navigation structure does not mirror the site architecture). Troubling these errors can prove critical for web accessibility which may in turn upset visitors causing bounce rates and ultimately hurt its SERP ranking factor. Repair broken links by setting out a crawl strategy, check regularly for broken links, screen lower traffic URLs, make sure there is no missing architecture in the website structure.

4. Duplicate Meta Descriptions and Titles: Duplicate meta descriptions or title tags create confusion for search engines when determining what content is most valuable in relation to particular keywords and may result in SERPs penalties damaging visibility on results pages achieving lower query real estate . Repaired by conducting regular SEO audits, optimizing metadata for each page by following on-page best practices making it unique according to it’s content, only creating original metadata on new pages added.

Improving website crawlability needs a continuous and instrumental process that every website owner or digital marketer should prioritize. By fixing these common errors and continuously monitoring for others can be the difference between generating traffic to your site or being invisible to your audience.