Imagine trying to analyze the pages of a massive library, only to find that numerous books are hidden away in locked rooms, while others have random pages missing. This is precisely what Google’s crawlers experience when encountering websites with poor crawlability and indexability. Don’t let your site become an impenetrable fortress to search engines – uncover the key to unlocking your website’s full potential as we unveil top tips for boosting your site’s crawlability and indexability, all but guaranteeing higher organic rankings and unprecedented traffic growth.

There are several proven methods to improve the crawlability and indexability of your website, such as optimizing page loading speed, strengthening internal link structure, submitting your sitemap to Google, updating your robots.txt file, regularly adding fresh content, and ensuring proper formatting of your web pages. By focusing on these areas, you can help search engine bots crawl and index your site more efficiently, leading to improved SEO performance and increased organic traffic.

Importance of Crawlability and Indexability

For your website to be discoverable, it must be easily found by search engines like Google. To achieve this, your website’s crawlability and indexability must be optimized. Crawlability, as the name suggests, is the ability of search engine bots to scan and read your website’s content quickly and accurately. On the other hand, indexability refers to how Google interprets and adds your site’s pages to its database or index.

Think of it this way: imagine a library with millions of books on various subjects. The librarians can easily group books according to their genre, making it faster for someone looking for relevant books to find what they are looking for. Imagine if the library had no organization, wouldn’t it take an awfully long time to find a book? This same principle applies to search engine bots. If your website’s structure isn’t well-organized with simple navigation or a clear hierarchy from headers to footers, it becomes difficult for web spiders to crawl your site and understand its content better.

Additionally, there are other factors that impact crawlability such as page loading speed, site design, coding quality, and site format. Slow-loading pages lead to web spiders leaving websites before crawling all pages fully. Poor site design makes it hard for bots to navigate and understand a site’s content significantly affecting indexing. Lastly, incorrect coding language can cause resource confusion in spiders resulting in irrelevant suggestions ranking higher than relevant ones.

Google has advanced over the years and has become more sophisticated in evaluating websites based on page relevance rather than just a match-up between keywords expectantly fed into code boxes hidden within sites by developers with less respect for good quality search engine optimization (SEO) best practices.

However, even with Google more emphasis on relevance and user experience over keywords cramming on pages or meta-descriptions; well-structured sites always have an edge over sites with a poor organization.

This makes crawlability and indexability one of the most significant aspects of SEO.

Impact on Site Rankings

Your website’s ranking on Google is determined by multiple factors, including the quality of backlinks, domain authority, social media presence, user experience, and quality content, but it all begins with crawlability and indexability. A well-crawled site leads to higher search engine rankings and more organic traffic. Regular updating of new content on websites and fixing crawl errors helps achieve better crawlability and indexability.

Imagine if your website was a restaurant. The menu (content) must be easily located by your customers (Google bots) to buy what you are selling. If your place is disorganized and unappealing, you’ll lose customers- like your users losing interest in clicking through your pages when they can’t understand your content or find what they need quickly.

Crawl errors like server errors lead to lower search engine rankings by alerting Google’s bots, which reduces crawling activity on the site. Unindexed pages hurt a website’s organic reach as they aren’t discoverable within any relevant keywords’ searches due to poor optimization.

That said, keep in mind that ranking is not the only factor considered when increasing search engine visibility but these qualities significantly affect overall SEO quality.

- According to a study conducted by Forrester Research, over 71% of internet users begin their web sessions with a search query on a search engine. Increasing your site’s crawlability and indexability helps your content rank higher in search results, leading to more traffic.

- Page speed plays an essential role in crawlability. Research conducted by Backlinko found that the average loading time for a webpage on Google’s first page of results was under 3 seconds, making quicker load times advantageous for both search engines and user experience.

- A study published by Moz discovered that websites with solid internal linking structures had 44% more unique pages indexed than those with weak linking systems, emphasizing the importance of interconnecting your site’s content for better crawlability and indexability.

Benefits for Users and Search Engines

Improving your website’s crawlability and indexability brings numerous benefits not only for search engines but also for users. For users, a well-crawled and indexed website translates to faster loading times, which can improve their experience on your site. A good user experience leads to more engagement and higher conversions, which are essential metrics that signal the success of your online presence.

From the search engine perspective, better crawlability and indexability bring higher visibility on search engine results pages (SERPs). More importantly, it makes it easier for search engines such as Google to accurately rank and display websites based on their intended audience or demographics – ultimately serving users with more relevant results.

When a website is difficult to crawl or improperly indexed, it can lead to major issues with its ranking. One possible issue is that a search engine may fail to recognize the main content of the page. Another issue that may arise from poor crawlability occurs when important pages are buried too deep in the hierarchy of a site making it difficult for web spiders to reach or process them. Both these possibilities result in lower rankings.

In addition, poor navigation structure can cause users to quickly leave the bottom-tier pages of your website if they find it difficult to get back up. This leads to high bounce rates and lower user retention rates, so ensuring a good internal linking system is highly beneficial for both parties involved.

Think about a large bookstore with shelves upon shelves of books. If customers can’t find what they’re looking for easily, they may leave the store without making a purchase. Similarly with web crawlers, if your content isn’t organized properly or intelligently linked through your website’s pages, Google might have trouble indexing your website leading to decreased SERP visibility.

Improving crawlability and indexability means helping make search engines better at understanding the nature of your website and the intent of your audience, and showing users exactly what they want, when they need it.

Tips to Enhance Crawlability

Fortunately, there are several ways to enhance your crawlability and improve the discoverability of your website. Below are some tips that can assist in producing better indexing results.

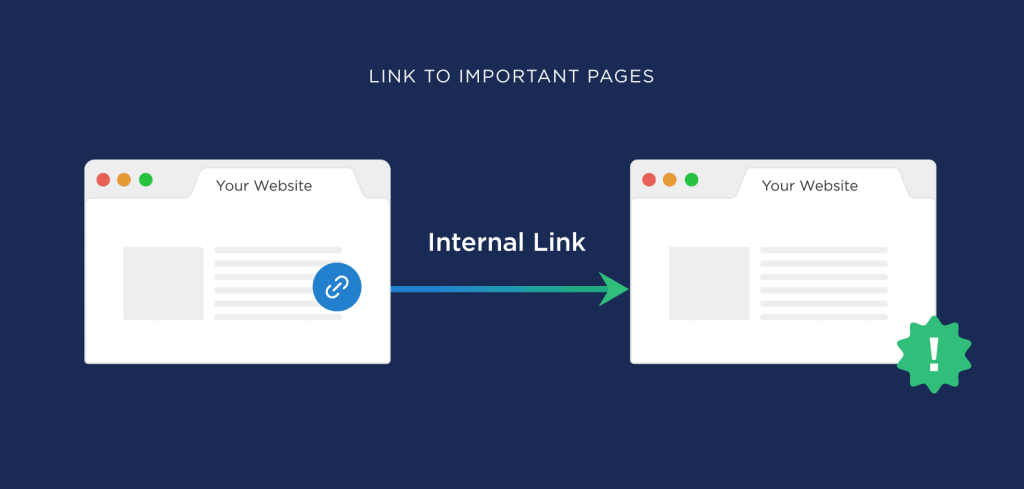

First off is the internal linking system. There are different link types including outbound links, inbound links, and—most important for our purposes—internal links. These internal links demonstrate a site’s structure. They connect pages together on the same domain and act as a signal to crawlers that easy navigation functionality has been considered when designing the website.

Keep in mind that too much or too few internal links can have consequences alike. Overdoing it with internal linking on unrelated pages can lead to a penalized ranking, while having too few leads to insufficient navigation signals, resulting in limited search engine visibility.

The homepage may be most people’s initial landing page, so further evaluation depends heavily on how well it’s designed and linked internally. Not all content on a site is created equal – so focus on prioritizing related keyword terms and label all sections appropriately. If you wouldn’t place a certain piece of content as part of your homepage’s internal structure then question why it’s included in the first place.

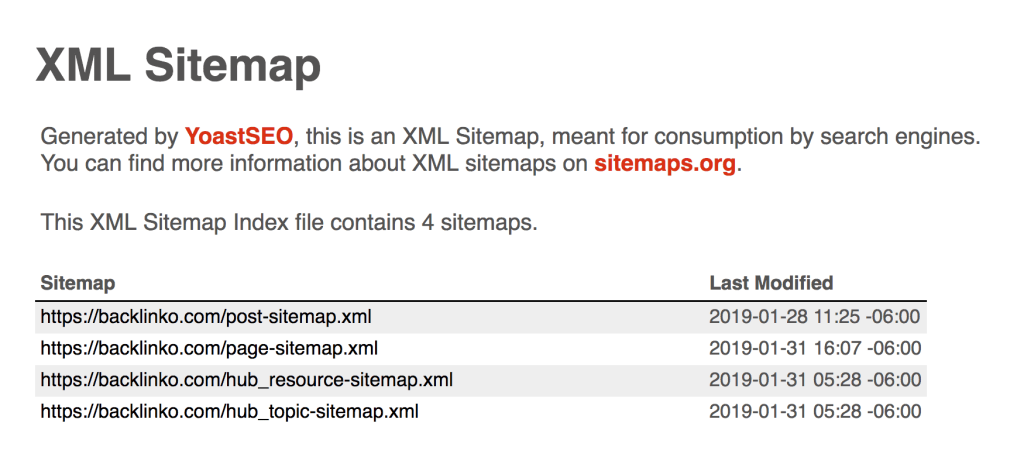

Another specific step is by creating XML sitemaps for your website which provide data about its structure or content to search engines like Google, allowing them to more easily crawl your website. Make sure to configure your robots.txt file properly so search engines may properly scan through organized sitemap files.

So whether big or small, make sure crawlers won’t encounter any dead ends as incorrect URLs that result in 404 errors may significantly impact SERP ranking by diminishing an audience’s trust towards the website as well as lowering rank over time.

Internal Linking and Site Structure

Internal linking is one of the most crucial factors for improving website crawlability. When your website has a strong internal linking system, it helps search engine bots understand the hierarchy and relevance of your web pages. Internal links act as a roadmap for both users and search engines to navigate through your content, making it easier for them to discover new pages.

To understand the importance of internal linking, consider an analogy of a grocery store layout. Just like how products are arranged in sections and aisles for easy navigation, your website should be organized into different categories and subcategories using internal links. The more internal links you have on your site, the easier it is for search engine bots to crawl through your content and index important pages.

Proper site structure is also important for enhancing crawlability. Having a well-organized site structure makes it easy for Google to crawl through your website’s content and find relevant information quickly. If you have a disorganized site structure, search engine bots might miss important content which can negatively impact your site rankings.

Imagine you’re looking for ingredients in someone else’s kitchen. It would be much easier to navigate if there were labels on drawers and cabinets, or organized sections for each type of ingredient – spices, grains, baking goods, and more. Similarly, having clear sections on your website and clearly labeling them with internal links allows search engines to easily navigate through your content.

Many people debate whether footer links are helpful for SEO or not. While footer links might not have as much weight as header links or top navigation menu anchors, they still contribute positively to the overall user experience by providing quick access to important pages.

Now that we’ve established why internal linking and proper site structure matter so much for website crawlability, let’s dive into the strategies and best practices for implementing them effectively.

Strengthening Indexability

Indexability is another crucial factor when it comes to SEO. The better your website’s indexability, the more likely it is to appear in SERPs. One of the easiest ways to improve your website’s indexability is to create a sitemap.

A sitemap acts as a blueprint of your website, helping search engine bots to crawl through its content easily. You can think of it like an index in a book. It gives you an overview of all the pages on your website and their hierarchical structure. When you submit your sitemap to search engines, it helps them discover new or updated pages on your site quickly and efficiently.

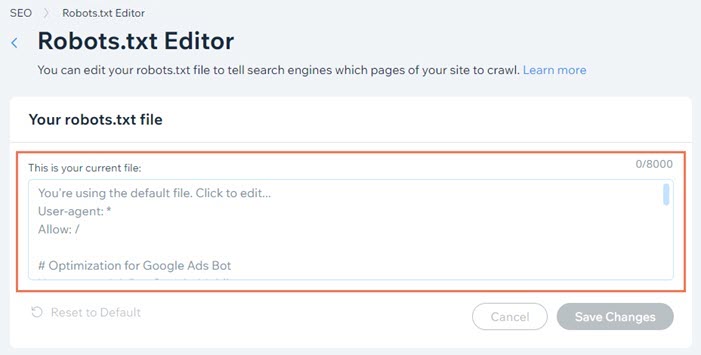

Another important factor for strengthening indexability is updating your robots.txt file. This file tells search engine bots which pages they should crawl and which ones they should avoid. Without a proper robots.txt file, search engines could crawl pages that are meant to be hidden from users, such as private pages or admin sections.

To understand why updates are essential to your robots.txt file, consider an analogy where you’re setting up a security system for your house. Just like how you would install alarms on doors or windows to restrict access, you need to configure your robots.txt file to limit search engines’ access to specific parts of your site.

Some webmasters mistakenly believe that excluding pages from crawlers generates positive ranking signals. However, blocking important content might have adverse effects on page rankings if Google cannot find and understand them. Therefore, it is crucial not to use the robots.txt file as a way of hiding content from Google.

Now that we’ve explored the importance of sitemaps and robots.txt files in improving indexability, let’s explore some additional strategies and types of tools that can help monitor and improve crawlability.

Sitemaps and Robots.txt Updates

One of the most critical aspects of optimizing crawlability and indexability is ensuring that search engine crawlers can access all the pages on your website. This is where sitemaps and robots.txt files come in handy.

A sitemap is a file that provides an outline of your website’s structure to search engines. It contains a list of all the URLs on your website along with metadata that describes each page’s content. By submitting your sitemap to Google, you help it learn about multiple pages simultaneously, making it beneficial for indexability, especially for deep websites, new pages, or those with poor internal linking.

Robots.txt, on the other hand, is a text file located in the root directory of your site that tells search engine crawlers which parts of your site to exclude from indexing. By customizing your robots.txt file, you can prevent bots from crawling pages that aren’t meant to be indexed – such as admin pages or login portals – saving their resources for more important pages.

For example, imagine you’re running an e-commerce store with thousands of product pages. If you were to accidentally block these pages in your robots.txt file or forget to include them in your sitemap, search engine crawlers would have no way of knowing they exist – preventing them from being indexed and ultimately leading to lower search rankings.

Submitting a sitemap to Google Search Console doesn’t guarantee every URL will be indexed, but it does make it easier for bots to find and evaluate them. Moreover, blocking certain sections using robots.txt and including others in the sitemap ensures optimized crawl paths and lessens duplicated work on less valuable material.

Let’s take a closer look at how these two files work together in improving crawlability and indexability.

Firstly, before creating a sitemap or editing a robots.txt file, you must understand how your website is structured. You can use a content analysis tool to scan and examine these files. Consider sorting your pages into categories with clear headings like “Products,” “Blog,” or “About Us.” This way, it’s easier to prioritize the most important parts of your website and create an accurate sitemap.

Some people might argue that allowing every page on your site to be crawled and indexed harms crawl efficiency and divides links into less valuable pages. While this may be true in certain cases, there are other ways to direct crawlers such as canonical tags or nofollow links meaning that webmasters should still always allow access to every page via sitemaps, using robots.txt when necessary.

Now that we’ve covered the basics of sitemaps and robots.txt files let’s explore some tools and strategies you can use to analyze your website’s crawlability and indexability.

Tools and Strategies for Monitoring and Improvement

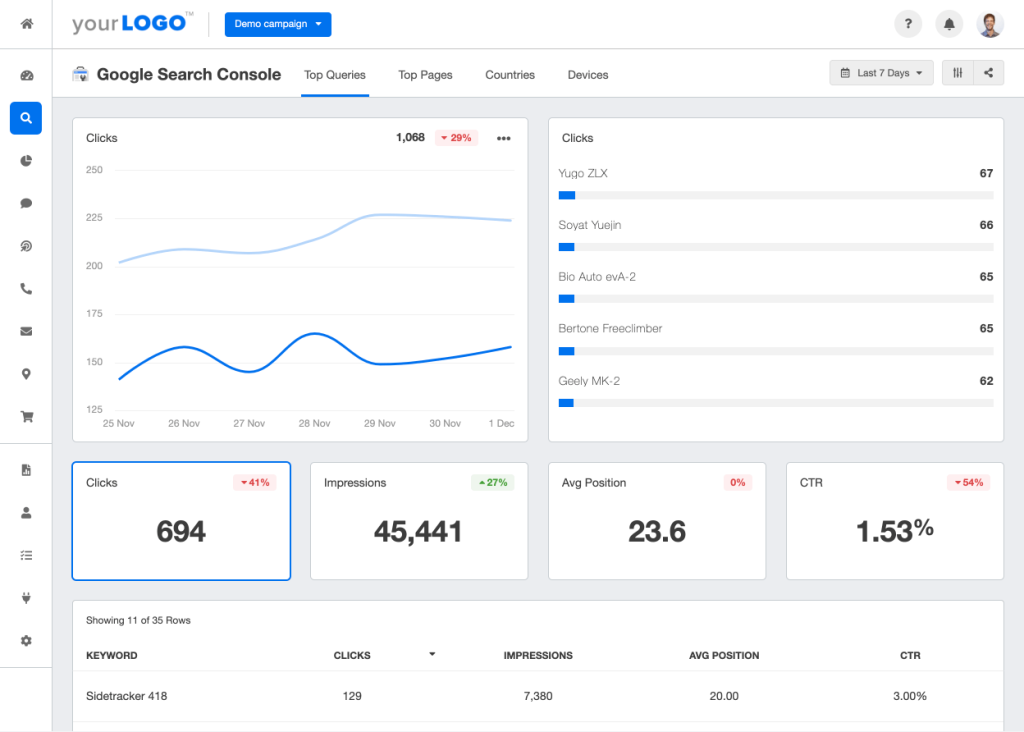

One useful tool for improving crawlability is Google Search Console. This free service lets you monitor your website’s indexing status, identify crawl errors, submit sitemaps, test robots.txt files, and much more. By analyzing the reports provided by Google Search Console, you can make strategic updates that bolster your site’s visibility on search engine results pages.

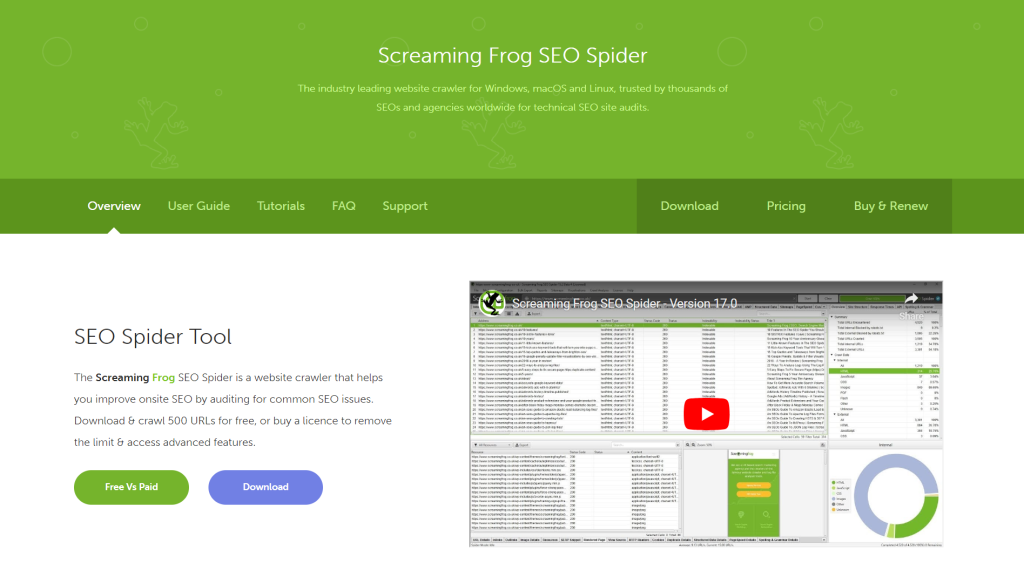

Another helpful tool is Screaming Frog SEO Spider. It allows you to crawl up to 500 URLs for free, find broken links, create XML sitemaps, crawl redirects chains, and more.

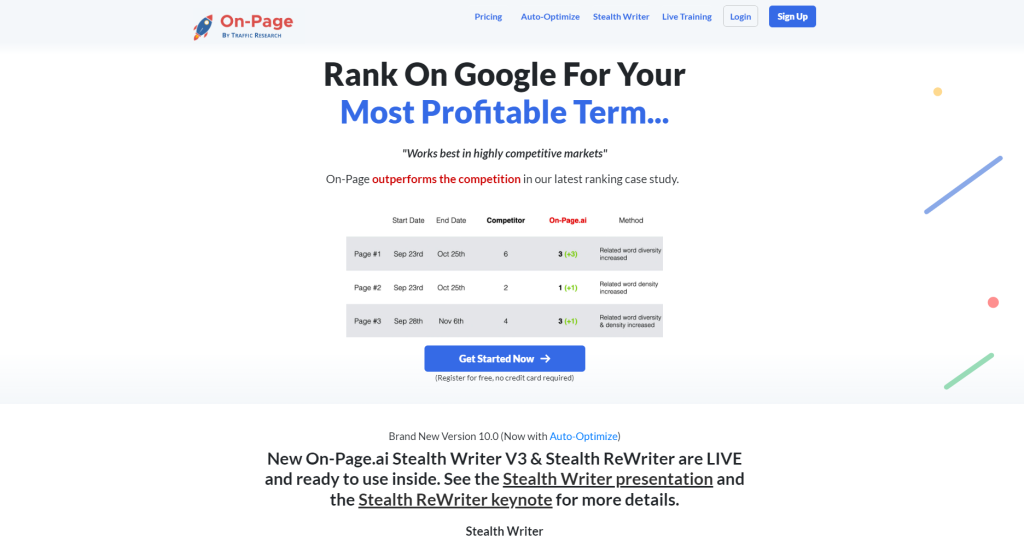

Finally, a more recently developed tool is On-page.ai. Its On-page scans let you crawl sites just like Google, identifying main content, sidebars, footers, headers, and advertisements to provide accurate recommendations. This helps users increase their page’s relevance and has been shown to increase web rankings.

Using Screaming Frog SEO Spider to analyze your website’s internal link structure and fix broken links identified within the report, or using On-page.ai to optimize header/body text accordingly based on page recommendation both help in improving crawlability.

Conversely, some people might argue that all these tools aren’t necessary for small websites with fewer pages that can be easily managed independently without specialized tools. While it is true that small websites need less management than bigger websites, proper optimization can result in an increase in organic traffic and a lower bounce rate.

Think of these monitoring and improvement tools as inspection equipment for car mechanics. While not necessary for everyone, they are critical for finding issues with larger machines where it is impossible to keep track of all the parts manually.

Regardless of whether your website is large or small scale, implementing sitemaps and robots.txt updates alongside regular analysis of crawlability metrics can benefit both users and search engines in finding relevant new content so make sure to use them properly for optimal performance. Check out how On-Page.ai’s optimization features can help with your SEO campaign.

Responses to Common Questions with Detailed Explanations

How can page load speed affect a site’s crawlability and what are some strategies for improvement?

Page load speed can have a significant impact on a site’s crawlability. Slow loading pages can lead to decreased crawl frequency, as search engine crawlers often prioritize faster loading sites in their indexing process.

Additionally, slow page load speeds can hurt user experience and ultimately result in decreased traffic and lower search engine rankings. According to Akamai, even a delay of one second in page load time can cause a 7% decrease in conversions.

To improve page load speed and enhance crawlability, there are several strategies that site owners can employ. One effective approach is to optimize images and other media elements for faster loading times. This can be accomplished by compressing image files before uploading them, using responsive design to ensure optimal display on all devices, and leveraging browser caching for frequently accessed resources.

Other strategies for improving page load speed and crawlability include reducing the use of JavaScript, improving server response times, and implementing technologies like Accelerated Mobile Pages (AMP) which prioritize speed and mobile optimization.

By focusing on these key areas of website optimization, site owners can improve their site’s overall performance and increase its visibility in search engines.

How are meta tags important for search engine indexing, and what guidelines should be followed when creating them?

Meta tags are an essential element of a webpage that can significantly impact search engine indexing. These tags provide information about the content of a page to search engines, which help them determine how to index and rank the page in their search results.

When creating meta tags for your website, it’s important to follow some guidelines. Firstly, make sure you include relevant keywords and phrases that accurately describe your site’s content. Keyword stuffing is not recommended and may hurt your website’s rankings instead of improving them.

It is critical to keep the title tag concise and under 60 characters so that it appears in its entirety on the search engine result page. Similarly, the meta description tag should be brief and engaging to attract users. Make sure it doesn’t exceed 155 characters as search engines will truncate beyond this length.

An analysis conducted by Backlinko found that pages with a higher number of total meta tags often rank higher in Google search results than those with fewer meta tags.

In conclusion, having well-crafted meta tags can improve your website’s crawlability and indexability, leading to better visibility in search engine results. By following best practices when creating them, you can increase your chances of ranking higher and attracting more traffic to your site.

Are there any common mistakes that negatively impact a site’s crawlability or indexability?

Yes, there are several common mistakes that can negatively impact a site’s crawlability or indexability. These mistakes can lead to lower search engine rankings and less visibility for your website. Here are some of the most common mistakes:

- Blocked pages: If search engine bots can’t access your pages due to blocked robots.txt files or password-protected content, those pages won’t be indexed. According to a study by Semrush, 4.45% of indexed pages on the web are blocked from crawlers.

- Duplicate content: Having duplicate content on your site can confuse search engines and prevent them from properly indexing your pages. A study by Raven Tools found that 29% of websites have duplicate content issues.

- Broken links: Broken links can harm your site’s usability and make it harder for search engines to crawl your pages. A study by Semrush found that an average of 7% of internal website links are broken.

- Slow page load times: Slow page speeds can lead to higher bounce rates and lower search engine rankings. According to Google, the average time it takes for a mobile page to load fully is over 15 seconds.

By avoiding these common mistakes, you can improve your site’s crawlability and indexability, ultimately leading to better search engine rankings and increased traffic.

What factors influence a website’s crawlability and how can they be optimized?

Website crawlability refers to a search engine’s ability to successfully navigate and index a website’s pages. Several factors influence crawlability, including:

- Site Architecture: The structure of a website should be logical, organized, and easy to navigate for both users and search engines. A clear hierarchy of content allows search engines to understand the site’s main topics and crawl pages more efficiently.

- Meta Tags: Properly written meta tags provide valuable information about a webpage’s relevance and help search engines understand what the page is about.

- Internal Linking: Including internal links within your website helps search engines understand the relationship between webpages on the site. Additionally, using relevant anchor text in these links can also improve the ranking power of certain keywords.

- Mobile Compatibility: In today’s mobile-first world, it’s essential for websites to be fully responsive and adaptable across a range of device sizes. By providing a user-friendly experience on all devices, websites make themselves more accessible to potential visitors and offer Google an optimized crawling experience.

There are several tools available for website owners and marketers to implement these optimizations. Utilizing XML sitemaps, Google Analytics, and other technical SEO resources can help pinpoint areas in need of improvement. Moreover, regularly monitoring key metrics such as loading speeds and bounce rates can help ensure continued optimization.

In this digital era where online visibility matters more than ever before, investing in crawlability optimization is imperative for ensuring success in virtually any industry or sector!

What is the role of sitemaps in improving crawlability, and how do they interact with robots.txt files?

Sitemaps are an essential tool for improving a website’s crawlability, as they provide search engine bots with a detailed map of the site’s structure and content. By listing all the pages on a website, including those that may not be easily discoverable through traditional navigation, sitemaps help ensure that search engines can find and index all important pages on a site.

While sitemaps provide search engines with valuable information about a site’s content, robots.txt files tell search engine bots which parts of a site should not be crawled or indexed. These files can be used to restrict access to certain pages or directories, such as those containing sensitive information or duplicate content.

It is important to note that while sitemaps can improve crawlability, they do not override any instructions given in the robots.txt file. In other words, if a page or directory is blocked in the robots.txt file, it will not be indexed even if it is included in the sitemap.

According to Google, websites with XML sitemaps are more likely to have their pages crawled and indexed quickly compared to those without sitemaps. Therefore, including a well-structured sitemap in your website’s SEO strategy is crucial for optimizing your crawlability and indexability.

In conclusion, while robots.txt files help prevent unwanted pages from being crawled and indexed, sitemaps allow search engine bots to navigate through all important pages on your site. It is important to keep in mind that these two elements work together toward improving your website’s performance in search results.